Public Member Functions | |

| __init__ (self, ApdbCassandraConfig config) | |

| None | __del__ (self) |

| VersionTuple | apdbImplementationVersion (cls) |

| VersionTuple | apdbSchemaVersion (self) |

| Table|None | tableDef (self, ApdbTables table) |

| ApdbCassandraConfig | init_database (cls, list[str] hosts, str keyspace, *str|None schema_file=None, str|None schema_name=None, int|None read_sources_months=None, int|None read_forced_sources_months=None, bool use_insert_id=False, bool use_insert_id_skips_diaobjects=False, int|None port=None, str|None username=None, str|None prefix=None, str|None part_pixelization=None, int|None part_pix_level=None, bool time_partition_tables=True, str|None time_partition_start=None, str|None time_partition_end=None, str|None read_consistency=None, str|None write_consistency=None, int|None read_timeout=None, int|None write_timeout=None, list[str]|None ra_dec_columns=None, int|None replication_factor=None, bool drop=False) |

| pandas.DataFrame | getDiaObjects (self, sphgeom.Region region) |

| pandas.DataFrame|None | getDiaSources (self, sphgeom.Region region, Iterable[int]|None object_ids, astropy.time.Time visit_time) |

| pandas.DataFrame|None | getDiaForcedSources (self, sphgeom.Region region, Iterable[int]|None object_ids, astropy.time.Time visit_time) |

| bool | containsVisitDetector (self, int visit, int detector) |

| list[ApdbInsertId]|None | getInsertIds (self) |

| None | deleteInsertIds (self, Iterable[ApdbInsertId] ids) |

| ApdbTableData | getDiaObjectsHistory (self, Iterable[ApdbInsertId] ids) |

| ApdbTableData | getDiaSourcesHistory (self, Iterable[ApdbInsertId] ids) |

| ApdbTableData | getDiaForcedSourcesHistory (self, Iterable[ApdbInsertId] ids) |

| pandas.DataFrame | getSSObjects (self) |

| None | store (self, astropy.time.Time visit_time, pandas.DataFrame objects, pandas.DataFrame|None sources=None, pandas.DataFrame|None forced_sources=None) |

| None | storeSSObjects (self, pandas.DataFrame objects) |

| None | reassignDiaSources (self, Mapping[int, int] idMap) |

| None | dailyJob (self) |

| int | countUnassociatedObjects (self) |

| ApdbMetadata | metadata (self) |

Public Attributes | |

| config | |

| metadataSchemaVersionKey | |

| metadataCodeVersionKey | |

| metadataConfigKey | |

Static Public Attributes | |

| str | metadataSchemaVersionKey = "version:schema" |

| str | metadataCodeVersionKey = "version:ApdbCassandra" |

| str | metadataConfigKey = "config:apdb-cassandra.json" |

| partition_zero_epoch = astropy.time.Time(0, format="unix_tai") | |

Protected Member Functions | |

| tuple[Cluster, Session] | _make_session (cls, ApdbCassandraConfig config) |

| AuthProvider|None | _make_auth_provider (cls, ApdbCassandraConfig config) |

| None | _versionCheck (self, ApdbMetadataCassandra metadata) |

| None | _makeSchema (cls, ApdbConfig config, *bool drop=False, int|None replication_factor=None) |

| Mapping[Any, ExecutionProfile] | _makeProfiles (cls, ApdbCassandraConfig config) |

| pandas.DataFrame | _getSources (self, sphgeom.Region region, Iterable[int]|None object_ids, float mjd_start, float mjd_end, ApdbTables table_name) |

| ApdbTableData | _get_history (self, ExtraTables table, Iterable[ApdbInsertId] ids) |

| None | _storeInsertId (self, ApdbInsertId insert_id, astropy.time.Time visit_time) |

| None | _storeDiaObjects (self, pandas.DataFrame objs, astropy.time.Time visit_time, ApdbInsertId|None insert_id) |

| None | _storeDiaSources (self, ApdbTables table_name, pandas.DataFrame sources, astropy.time.Time visit_time, ApdbInsertId|None insert_id) |

| None | _storeDiaSourcesPartitions (self, pandas.DataFrame sources, astropy.time.Time visit_time, ApdbInsertId|None insert_id) |

| None | _storeObjectsPandas (self, pandas.DataFrame records, ApdbTables|ExtraTables table_name, Mapping|None extra_columns=None, int|None time_part=None) |

| pandas.DataFrame | _add_obj_part (self, pandas.DataFrame df) |

| pandas.DataFrame | _add_src_part (self, pandas.DataFrame sources, pandas.DataFrame objs) |

| pandas.DataFrame | _add_fsrc_part (self, pandas.DataFrame sources, pandas.DataFrame objs) |

| int | _time_partition_cls (cls, float|astropy.time.Time time, float epoch_mjd, int part_days) |

| int | _time_partition (self, float|astropy.time.Time time) |

| pandas.DataFrame | _make_empty_catalog (self, ApdbTables table_name) |

| Iterator[tuple[cassandra.query.Statement, tuple]] | _combine_where (self, str prefix, list[tuple[str, tuple]] where1, list[tuple[str, tuple]] where2, str|None suffix=None) |

| list[tuple[str, tuple]] | _spatial_where (self, sphgeom.Region|None region, bool use_ranges=False) |

| tuple[list[str], list[tuple[str, tuple]]] | _temporal_where (self, ApdbTables table, float|astropy.time.Time start_time, float|astropy.time.Time end_time, bool|None query_per_time_part=None) |

Protected Attributes | |

| _keyspace | |

| _cluster | |

| _session | |

| _metadata | |

| _pixelization | |

| _schema | |

| _partition_zero_epoch_mjd | |

| _preparer | |

Static Protected Attributes | |

| tuple | _frozen_parameters |

Detailed Description

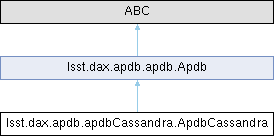

Implementation of APDB database on to of Apache Cassandra.

The implementation is configured via standard ``pex_config`` mechanism

using `ApdbCassandraConfig` configuration class. For an example of

different configurations check config/ folder.

Parameters

----------

config : `ApdbCassandraConfig`

Configuration object.

Definition at line 249 of file apdbCassandra.py.

Constructor & Destructor Documentation

◆ __init__()

| lsst.dax.apdb.apdbCassandra.ApdbCassandra.__init__ | ( | self, | |

| ApdbCassandraConfig | config ) |

Definition at line 285 of file apdbCassandra.py.

◆ __del__()

| None lsst.dax.apdb.apdbCassandra.ApdbCassandra.__del__ | ( | self | ) |

Definition at line 335 of file apdbCassandra.py.

Member Function Documentation

◆ _add_fsrc_part()

|

protected |

Add apdb_part column to DiaForcedSource catalog. Notes ----- This method copies apdb_part value from a matching DiaObject record. DiaObject catalog needs to have a apdb_part column filled by ``_add_obj_part`` method and DiaSource records need to be associated to DiaObjects via ``diaObjectId`` column. This overrides any existing column in a DataFrame with the same name (apdb_part). Original DataFrame is not changed, copy of a DataFrame is returned.

Definition at line 1311 of file apdbCassandra.py.

◆ _add_obj_part()

|

protected |

Calculate spatial partition for each record and add it to a DataFrame. Notes ----- This overrides any existing column in a DataFrame with the same name (apdb_part). Original DataFrame is not changed, copy of a DataFrame is returned.

Definition at line 1255 of file apdbCassandra.py.

◆ _add_src_part()

|

protected |

Add apdb_part column to DiaSource catalog. Notes ----- This method copies apdb_part value from a matching DiaObject record. DiaObject catalog needs to have a apdb_part column filled by ``_add_obj_part`` method and DiaSource records need to be associated to DiaObjects via ``diaObjectId`` column. This overrides any existing column in a DataFrame with the same name (apdb_part). Original DataFrame is not changed, copy of a DataFrame is returned.

Definition at line 1276 of file apdbCassandra.py.

◆ _combine_where()

|

protected |

Make cartesian product of two parts of WHERE clause into a series

of statements to execute.

Parameters

----------

prefix : `str`

Initial statement prefix that comes before WHERE clause, e.g.

"SELECT * from Table"

Definition at line 1405 of file apdbCassandra.py.

◆ _get_history()

|

protected |

Return records from a particular table given set of insert IDs.

Definition at line 1037 of file apdbCassandra.py.

◆ _getSources()

|

protected |

Return catalog of DiaSource instances given set of DiaObject IDs.

Parameters

----------

region : `lsst.sphgeom.Region`

Spherical region.

object_ids :

Collection of DiaObject IDs

mjd_start : `float`

Lower bound of time interval.

mjd_end : `float`

Upper bound of time interval.

table_name : `ApdbTables`

Name of the table.

Returns

-------

catalog : `pandas.DataFrame`, or `None`

Catalog containing DiaSource records. Empty catalog is returned if

``object_ids`` is empty.

Definition at line 970 of file apdbCassandra.py.

◆ _make_auth_provider()

|

protected |

Make Cassandra authentication provider instance.

Definition at line 361 of file apdbCassandra.py.

◆ _make_empty_catalog()

|

protected |

Make an empty catalog for a table with a given name.

Parameters

----------

table_name : `ApdbTables`

Name of the table.

Returns

-------

catalog : `pandas.DataFrame`

An empty catalog.

Definition at line 1384 of file apdbCassandra.py.

◆ _make_session()

|

protected |

Make Cassandra session.

Definition at line 340 of file apdbCassandra.py.

◆ _makeProfiles()

|

protected |

Make all execution profiles used in the code.

Definition at line 911 of file apdbCassandra.py.

◆ _makeSchema()

|

protected |

Definition at line 573 of file apdbCassandra.py.

◆ _spatial_where()

|

protected |

Generate expressions for spatial part of WHERE clause.

Parameters

----------

region : `sphgeom.Region`

Spatial region for query results.

use_ranges : `bool`

If True then use pixel ranges ("apdb_part >= p1 AND apdb_part <=

p2") instead of exact list of pixels. Should be set to True for

large regions covering very many pixels.

Returns

-------

expressions : `list` [ `tuple` ]

Empty list is returned if ``region`` is `None`, otherwise a list

of one or more (expression, parameters) tuples

Definition at line 1449 of file apdbCassandra.py.

◆ _storeDiaObjects()

|

protected |

Store catalog of DiaObjects from current visit.

Parameters

----------

objs : `pandas.DataFrame`

Catalog with DiaObject records

visit_time : `astropy.time.Time`

Time of the current visit.

Definition at line 1077 of file apdbCassandra.py.

◆ _storeDiaSources()

|

protected |

Store catalog of DIASources or DIAForcedSources from current visit.

Parameters

----------

sources : `pandas.DataFrame`

Catalog containing DiaSource records

visit_time : `astropy.time.Time`

Time of the current visit.

Definition at line 1114 of file apdbCassandra.py.

◆ _storeDiaSourcesPartitions()

|

protected |

Store mapping of diaSourceId to its partitioning values.

Parameters

----------

sources : `pandas.DataFrame`

Catalog containing DiaSource records

visit_time : `astropy.time.Time`

Time of the current visit.

Definition at line 1146 of file apdbCassandra.py.

◆ _storeInsertId()

|

protected |

Definition at line 1057 of file apdbCassandra.py.

◆ _storeObjectsPandas()

|

protected |

Store generic objects.

Takes Pandas catalog and stores a bunch of records in a table.

Parameters

----------

records : `pandas.DataFrame`

Catalog containing object records

table_name : `ApdbTables`

Name of the table as defined in APDB schema.

extra_columns : `dict`, optional

Mapping (column_name, column_value) which gives fixed values for

columns in each row, overrides values in ``records`` if matching

columns exist there.

time_part : `int`, optional

If not `None` then insert into a per-partition table.

Notes

-----

If Pandas catalog contains additional columns not defined in table

schema they are ignored. Catalog does not have to contain all columns

defined in a table, but partition and clustering keys must be present

in a catalog or ``extra_columns``.

Definition at line 1168 of file apdbCassandra.py.

◆ _temporal_where()

|

protected |

Generate table names and expressions for temporal part of WHERE

clauses.

Parameters

----------

table : `ApdbTables`

Table to select from.

start_time : `astropy.time.Time` or `float`

Starting Datetime of MJD value of the time range.

end_time : `astropy.time.Time` or `float`

Starting Datetime of MJD value of the time range.

query_per_time_part : `bool`, optional

If None then use ``query_per_time_part`` from configuration.

Returns

-------

tables : `list` [ `str` ]

List of the table names to query.

expressions : `list` [ `tuple` ]

A list of zero or more (expression, parameters) tuples.

Definition at line 1489 of file apdbCassandra.py.

◆ _time_partition()

|

protected |

Calculate time partition number for a given time.

Parameters

----------

time : `float` or `astropy.time.Time`

Time for which to calculate partition number. Can be float to mean

MJD or `astropy.time.Time`

Returns

-------

partition : `int`

Partition number for a given time.

Definition at line 1362 of file apdbCassandra.py.

◆ _time_partition_cls()

|

protected |

Calculate time partition number for a given time.

Parameters

----------

time : `float` or `astropy.time.Time`

Time for which to calculate partition number. Can be float to mean

MJD or `astropy.time.Time`

epoch_mjd : `float`

Epoch time for partition 0.

part_days : `int`

Number of days per partition.

Returns

-------

partition : `int`

Partition number for a given time.

Definition at line 1336 of file apdbCassandra.py.

◆ _versionCheck()

|

protected |

Check schema version compatibility.

Definition at line 393 of file apdbCassandra.py.

◆ apdbImplementationVersion()

| VersionTuple lsst.dax.apdb.apdbCassandra.ApdbCassandra.apdbImplementationVersion | ( | cls | ) |

Return version number for current APDB implementation.

Returns

-------

version : `VersionTuple`

Version of the code defined in implementation class.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 426 of file apdbCassandra.py.

◆ apdbSchemaVersion()

| VersionTuple lsst.dax.apdb.apdbCassandra.ApdbCassandra.apdbSchemaVersion | ( | self | ) |

Return schema version number as defined in config file.

Returns

-------

version : `VersionTuple`

Version of the schema defined in schema config file.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 430 of file apdbCassandra.py.

◆ containsVisitDetector()

| bool lsst.dax.apdb.apdbCassandra.ApdbCassandra.containsVisitDetector | ( | self, | |

| int | visit, | ||

| int | detector ) |

Test whether data for a given visit-detector is present in the APDB.

Parameters

----------

visit, detector : `int`

The ID of the visit-detector to search for.

Returns

-------

present : `bool`

`True` if some DiaObject, DiaSource, or DiaForcedSource records

exist for the specified observation, `False` otherwise.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 681 of file apdbCassandra.py.

◆ countUnassociatedObjects()

| int lsst.dax.apdb.apdbCassandra.ApdbCassandra.countUnassociatedObjects | ( | self | ) |

Return the number of DiaObjects that have only one DiaSource

associated with them.

Used as part of ap_verify metrics.

Returns

-------

count : `int`

Number of DiaObjects with exactly one associated DiaSource.

Notes

-----

This method can be very inefficient or slow in some implementations.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 897 of file apdbCassandra.py.

◆ dailyJob()

| None lsst.dax.apdb.apdbCassandra.ApdbCassandra.dailyJob | ( | self | ) |

Implement daily activities like cleanup/vacuum. What should be done during daily activities is determined by specific implementation.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 893 of file apdbCassandra.py.

◆ deleteInsertIds()

| None lsst.dax.apdb.apdbCassandra.ApdbCassandra.deleteInsertIds | ( | self, | |

| Iterable[ApdbInsertId] | ids ) |

Remove insert identifiers from the database.

Parameters

----------

ids : `iterable` [`ApdbInsertId`]

Insert identifiers, can include items returned from `getInsertIds`.

Notes

-----

This method causes Apdb to forget about specified identifiers. If there

are any auxiliary data associated with the identifiers, it is also

removed from database (but data in regular tables is not removed).

This method should be called after successful transfer of data from

APDB to PPDB to free space used by history.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 709 of file apdbCassandra.py.

◆ getDiaForcedSources()

| pandas.DataFrame | None lsst.dax.apdb.apdbCassandra.ApdbCassandra.getDiaForcedSources | ( | self, | |

| sphgeom.Region | region, | ||

| Iterable[int] | None | object_ids, | ||

| astropy.time.Time | visit_time ) |

Return catalog of DiaForcedSource instances from a given region.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIASources.

object_ids : iterable [ `int` ], optional

List of DiaObject IDs to further constrain the set of returned

sources. If list is empty then empty catalog is returned with a

correct schema. If `None` then returned sources are not

constrained. Some implementations may not support latter case.

visit_time : `astropy.time.Time`

Time of the current visit.

Returns

-------

catalog : `pandas.DataFrame`, or `None`

Catalog containing DiaSource records. `None` is returned if

``read_forced_sources_months`` configuration parameter is set to 0.

Raises

------

NotImplementedError

May be raised by some implementations if ``object_ids`` is `None`.

Notes

-----

This method returns DiaForcedSource catalog for a region with

additional filtering based on DiaObject IDs. Only a subset of DiaSource

history is returned limited by ``read_forced_sources_months`` config

parameter, w.r.t. ``visit_time``. If ``object_ids`` is empty then an

empty catalog is always returned with the correct schema

(columns/types). If ``object_ids`` is `None` then no filtering is

performed and some of the returned records may be outside the specified

region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 669 of file apdbCassandra.py.

◆ getDiaForcedSourcesHistory()

| ApdbTableData lsst.dax.apdb.apdbCassandra.ApdbCassandra.getDiaForcedSourcesHistory | ( | self, | |

| Iterable[ApdbInsertId] | ids ) |

Return catalog of DiaForcedSource instances from a given time

period.

Parameters

----------

ids : `iterable` [`ApdbInsertId`]

Insert identifiers, can include items returned from `getInsertIds`.

Returns

-------

data : `ApdbTableData`

Catalog containing DiaForcedSource records. In addition to all

regular columns it will contain ``insert_id`` column.

Notes

-----

This part of API may not be very stable and can change before the

implementation finalizes.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 756 of file apdbCassandra.py.

◆ getDiaObjects()

| pandas.DataFrame lsst.dax.apdb.apdbCassandra.ApdbCassandra.getDiaObjects | ( | self, | |

| sphgeom.Region | region ) |

Return catalog of DiaObject instances from a given region.

This method returns only the last version of each DiaObject. Some

records in a returned catalog may be outside the specified region, it

is up to a client to ignore those records or cleanup the catalog before

futher use.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIAObjects.

Returns

-------

catalog : `pandas.DataFrame`

Catalog containing DiaObject records for a region that may be a

superset of the specified region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 621 of file apdbCassandra.py.

◆ getDiaObjectsHistory()

| ApdbTableData lsst.dax.apdb.apdbCassandra.ApdbCassandra.getDiaObjectsHistory | ( | self, | |

| Iterable[ApdbInsertId] | ids ) |

Return catalog of DiaObject instances from a given time period

including the history of each DiaObject.

Parameters

----------

ids : `iterable` [`ApdbInsertId`]

Insert identifiers, can include items returned from `getInsertIds`.

Returns

-------

data : `ApdbTableData`

Catalog containing DiaObject records. In addition to all regular

columns it will contain ``insert_id`` column.

Notes

-----

This part of API may not be very stable and can change before the

implementation finalizes.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 748 of file apdbCassandra.py.

◆ getDiaSources()

| pandas.DataFrame | None lsst.dax.apdb.apdbCassandra.ApdbCassandra.getDiaSources | ( | self, | |

| sphgeom.Region | region, | ||

| Iterable[int] | None | object_ids, | ||

| astropy.time.Time | visit_time ) |

Return catalog of DiaSource instances from a given region.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIASources.

object_ids : iterable [ `int` ], optional

List of DiaObject IDs to further constrain the set of returned

sources. If `None` then returned sources are not constrained. If

list is empty then empty catalog is returned with a correct

schema.

visit_time : `astropy.time.Time`

Time of the current visit.

Returns

-------

catalog : `pandas.DataFrame`, or `None`

Catalog containing DiaSource records. `None` is returned if

``read_sources_months`` configuration parameter is set to 0.

Notes

-----

This method returns DiaSource catalog for a region with additional

filtering based on DiaObject IDs. Only a subset of DiaSource history

is returned limited by ``read_sources_months`` config parameter, w.r.t.

``visit_time``. If ``object_ids`` is empty then an empty catalog is

always returned with the correct schema (columns/types). If

``object_ids`` is `None` then no filtering is performed and some of the

returned records may be outside the specified region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 657 of file apdbCassandra.py.

◆ getDiaSourcesHistory()

| ApdbTableData lsst.dax.apdb.apdbCassandra.ApdbCassandra.getDiaSourcesHistory | ( | self, | |

| Iterable[ApdbInsertId] | ids ) |

Return catalog of DiaSource instances from a given time period.

Parameters

----------

ids : `iterable` [`ApdbInsertId`]

Insert identifiers, can include items returned from `getInsertIds`.

Returns

-------

data : `ApdbTableData`

Catalog containing DiaSource records. In addition to all regular

columns it will contain ``insert_id`` column.

Notes

-----

This part of API may not be very stable and can change before the

implementation finalizes.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 752 of file apdbCassandra.py.

◆ getInsertIds()

| list[ApdbInsertId] | None lsst.dax.apdb.apdbCassandra.ApdbCassandra.getInsertIds | ( | self | ) |

Return collection of insert identifiers known to the database.

Returns

-------

ids : `list` [`ApdbInsertId`] or `None`

List of identifiers, they may be time-ordered if database supports

ordering. `None` is returned if database is not configured to store

insert identifiers.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 685 of file apdbCassandra.py.

◆ getSSObjects()

| pandas.DataFrame lsst.dax.apdb.apdbCassandra.ApdbCassandra.getSSObjects | ( | self | ) |

Return catalog of SSObject instances.

Returns

-------

catalog : `pandas.DataFrame`

Catalog containing SSObject records, all existing records are

returned.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 760 of file apdbCassandra.py.

◆ init_database()

| ApdbCassandraConfig lsst.dax.apdb.apdbCassandra.ApdbCassandra.init_database | ( | cls, | |

| list[str] | hosts, | ||

| str | keyspace, | ||

| *str | None | schema_file = None, | ||

| str | None | schema_name = None, | ||

| int | None | read_sources_months = None, | ||

| int | None | read_forced_sources_months = None, | ||

| bool | use_insert_id = False, | ||

| bool | use_insert_id_skips_diaobjects = False, | ||

| int | None | port = None, | ||

| str | None | username = None, | ||

| str | None | prefix = None, | ||

| str | None | part_pixelization = None, | ||

| int | None | part_pix_level = None, | ||

| bool | time_partition_tables = True, | ||

| str | None | time_partition_start = None, | ||

| str | None | time_partition_end = None, | ||

| str | None | read_consistency = None, | ||

| str | None | write_consistency = None, | ||

| int | None | read_timeout = None, | ||

| int | None | write_timeout = None, | ||

| list[str] | None | ra_dec_columns = None, | ||

| int | None | replication_factor = None, | ||

| bool | drop = False ) |

Initialize new APDB instance and make configuration object for it.

Parameters

----------

hosts : `list` [`str`]

List of host names or IP addresses for Cassandra cluster.

keyspace : `str`

Name of the keyspace for APDB tables.

schema_file : `str`, optional

Location of (YAML) configuration file with APDB schema. If not

specified then default location will be used.

schema_name : `str`, optional

Name of the schema in YAML configuration file. If not specified

then default name will be used.

read_sources_months : `int`, optional

Number of months of history to read from DiaSource.

read_forced_sources_months : `int`, optional

Number of months of history to read from DiaForcedSource.

use_insert_id : `bool`, optional

If True, make additional tables used for replication to PPDB.

use_insert_id_skips_diaobjects : `bool`, optional

If `True` then do not fill regular ``DiaObject`` table when

``use_insert_id`` is `True`.

port : `int`, optional

Port number to use for Cassandra connections.

username : `str`, optional

User name for Cassandra connections.

prefix : `str`, optional

Optional prefix for all table names.

part_pixelization : `str`, optional

Name of the MOC pixelization used for partitioning.

part_pix_level : `int`, optional

Pixelization level.

time_partition_tables : `bool`, optional

Create per-partition tables.

time_partition_start : `str`, optional

Starting time for per-partition tables, in yyyy-mm-ddThh:mm:ss

format, in TAI.

time_partition_end : `str`, optional

Ending time for per-partition tables, in yyyy-mm-ddThh:mm:ss

format, in TAI.

read_consistency : `str`, optional

Name of the consistency level for read operations.

write_consistency : `str`, optional

Name of the consistency level for write operations.

read_timeout : `int`, optional

Read timeout in seconds.

write_timeout : `int`, optional

Write timeout in seconds.

ra_dec_columns : `list` [`str`], optional

Names of ra/dec columns in DiaObject table.

replication_factor : `int`, optional

Replication factor used when creating new keyspace, if keyspace

already exists its replication factor is not changed.

drop : `bool`, optional

If `True` then drop existing tables before re-creating the schema.

Returns

-------

config : `ApdbCassandraConfig`

Resulting configuration object for a created APDB instance.

Definition at line 439 of file apdbCassandra.py.

◆ metadata()

| ApdbMetadata lsst.dax.apdb.apdbCassandra.ApdbCassandra.metadata | ( | self | ) |

Object controlling access to APDB metadata (`ApdbMetadata`).

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 904 of file apdbCassandra.py.

◆ reassignDiaSources()

| None lsst.dax.apdb.apdbCassandra.ApdbCassandra.reassignDiaSources | ( | self, | |

| Mapping[int, int] | idMap ) |

Associate DiaSources with SSObjects, dis-associating them

from DiaObjects.

Parameters

----------

idMap : `Mapping`

Maps DiaSource IDs to their new SSObject IDs.

Raises

------

ValueError

Raised if DiaSource ID does not exist in the database.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 805 of file apdbCassandra.py.

◆ store()

| None lsst.dax.apdb.apdbCassandra.ApdbCassandra.store | ( | self, | |

| astropy.time.Time | visit_time, | ||

| pandas.DataFrame | objects, | ||

| pandas.DataFrame | None | sources = None, | ||

| pandas.DataFrame | None | forced_sources = None ) |

Store all three types of catalogs in the database.

Parameters

----------

visit_time : `astropy.time.Time`

Time of the visit.

objects : `pandas.DataFrame`

Catalog with DiaObject records.

sources : `pandas.DataFrame`, optional

Catalog with DiaSource records.

forced_sources : `pandas.DataFrame`, optional

Catalog with DiaForcedSource records.

Notes

-----

This methods takes DataFrame catalogs, their schema must be

compatible with the schema of APDB table:

- column names must correspond to database table columns

- types and units of the columns must match database definitions,

no unit conversion is performed presently

- columns that have default values in database schema can be

omitted from catalog

- this method knows how to fill interval-related columns of DiaObject

(validityStart, validityEnd) they do not need to appear in a

catalog

- source catalogs have ``diaObjectId`` column associating sources

with objects

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 773 of file apdbCassandra.py.

◆ storeSSObjects()

| None lsst.dax.apdb.apdbCassandra.ApdbCassandra.storeSSObjects | ( | self, | |

| pandas.DataFrame | objects ) |

Store or update SSObject catalog.

Parameters

----------

objects : `pandas.DataFrame`

Catalog with SSObject records.

Notes

-----

If SSObjects with matching IDs already exist in the database, their

records will be updated with the information from provided records.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 801 of file apdbCassandra.py.

◆ tableDef()

| Table | None lsst.dax.apdb.apdbCassandra.ApdbCassandra.tableDef | ( | self, | |

| ApdbTables | table ) |

Return table schema definition for a given table.

Parameters

----------

table : `ApdbTables`

One of the known APDB tables.

Returns

-------

tableSchema : `felis.simple.Table` or `None`

Table schema description, `None` is returned if table is not

defined by this implementation.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 434 of file apdbCassandra.py.

Member Data Documentation

◆ _cluster

|

protected |

Definition at line 291 of file apdbCassandra.py.

◆ _frozen_parameters

|

staticprotected |

Definition at line 271 of file apdbCassandra.py.

◆ _keyspace

|

protected |

Definition at line 289 of file apdbCassandra.py.

◆ _metadata

|

protected |

Definition at line 294 of file apdbCassandra.py.

◆ _partition_zero_epoch_mjd

|

protected |

Definition at line 323 of file apdbCassandra.py.

◆ _pixelization

|

protected |

Definition at line 308 of file apdbCassandra.py.

◆ _preparer

|

protected |

Definition at line 329 of file apdbCassandra.py.

◆ _schema

|

protected |

Definition at line 314 of file apdbCassandra.py.

◆ _session

|

protected |

Definition at line 291 of file apdbCassandra.py.

◆ config

| lsst.dax.apdb.apdbCassandra.ApdbCassandra.config |

Definition at line 303 of file apdbCassandra.py.

◆ metadataCodeVersionKey [1/2]

|

static |

Definition at line 265 of file apdbCassandra.py.

◆ metadataCodeVersionKey [2/2]

| lsst.dax.apdb.apdbCassandra.ApdbCassandra.metadataCodeVersionKey |

Definition at line 613 of file apdbCassandra.py.

◆ metadataConfigKey [1/2]

|

static |

Definition at line 268 of file apdbCassandra.py.

◆ metadataConfigKey [2/2]

| lsst.dax.apdb.apdbCassandra.ApdbCassandra.metadataConfigKey |

Definition at line 617 of file apdbCassandra.py.

◆ metadataSchemaVersionKey [1/2]

|

static |

Definition at line 262 of file apdbCassandra.py.

◆ metadataSchemaVersionKey [2/2]

| lsst.dax.apdb.apdbCassandra.ApdbCassandra.metadataSchemaVersionKey |

Definition at line 612 of file apdbCassandra.py.

◆ partition_zero_epoch

|

static |

Definition at line 282 of file apdbCassandra.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-8.0.0/Linux64/dax_apdb/gd2a12a3803+f8351bc914/python/lsst/dax/apdb/apdbCassandra.py