Public Member Functions | |

| __init__ (self, ApdbSqlConfig config) | |

| VersionTuple | apdbImplementationVersion (cls) |

| ApdbSqlConfig | init_database (cls, str db_url, *str|None schema_file=None, str|None schema_name=None, int|None read_sources_months=None, int|None read_forced_sources_months=None, bool use_insert_id=False, int|None connection_timeout=None, str|None dia_object_index=None, int|None htm_level=None, str|None htm_index_column=None, list[str]|None ra_dec_columns=None, str|None prefix=None, str|None namespace=None, bool drop=False) |

| VersionTuple | apdbSchemaVersion (self) |

| dict[str, int] | tableRowCount (self) |

| Table|None | tableDef (self, ApdbTables table) |

| pandas.DataFrame | getDiaObjects (self, Region region) |

| pandas.DataFrame|None | getDiaSources (self, Region region, Iterable[int]|None object_ids, astropy.time.Time visit_time) |

| pandas.DataFrame|None | getDiaForcedSources (self, Region region, Iterable[int]|None object_ids, astropy.time.Time visit_time) |

| bool | containsVisitDetector (self, int visit, int detector) |

| bool | containsCcdVisit (self, int ccdVisitId) |

| list[ApdbInsertId]|None | getInsertIds (self) |

| None | deleteInsertIds (self, Iterable[ApdbInsertId] ids) |

| ApdbTableData | getDiaObjectsHistory (self, Iterable[ApdbInsertId] ids) |

| ApdbTableData | getDiaSourcesHistory (self, Iterable[ApdbInsertId] ids) |

| ApdbTableData | getDiaForcedSourcesHistory (self, Iterable[ApdbInsertId] ids) |

| pandas.DataFrame | getSSObjects (self) |

| None | store (self, astropy.time.Time visit_time, pandas.DataFrame objects, pandas.DataFrame|None sources=None, pandas.DataFrame|None forced_sources=None) |

| None | storeSSObjects (self, pandas.DataFrame objects) |

| None | reassignDiaSources (self, Mapping[int, int] idMap) |

| None | dailyJob (self) |

| int | countUnassociatedObjects (self) |

| ApdbMetadata | metadata (self) |

Public Attributes | |

| config | |

| pixelator | |

| use_insert_id | |

| metadataSchemaVersionKey | |

| metadataCodeVersionKey | |

| metadataConfigKey | |

Static Public Attributes | |

| ConfigClass = ApdbSqlConfig | |

| str | metadataSchemaVersionKey = "version:schema" |

| str | metadataCodeVersionKey = "version:ApdbSql" |

| str | metadataConfigKey = "config:apdb-sql.json" |

Protected Member Functions | |

| sqlalchemy.engine.Engine | _makeEngine (cls, ApdbSqlConfig config) |

| None | _versionCheck (self, ApdbMetadataSql metadata) |

| None | _makeSchema (cls, ApdbConfig config, bool drop=False) |

| ApdbTableData | _get_history (self, Iterable[ApdbInsertId] ids, ApdbTables table_enum, ExtraTables history_table_enum) |

| pandas.DataFrame | _getDiaSourcesInRegion (self, Region region, astropy.time.Time visit_time) |

| pandas.DataFrame | _getDiaSourcesByIDs (self, list[int] object_ids, astropy.time.Time visit_time) |

| pandas.DataFrame | _getSourcesByIDs (self, ApdbTables table_enum, list[int] object_ids, float midpointMjdTai_start) |

| None | _storeInsertId (self, ApdbInsertId insert_id, astropy.time.Time visit_time, sqlalchemy.engine.Connection connection) |

| None | _storeDiaObjects (self, pandas.DataFrame objs, astropy.time.Time visit_time, ApdbInsertId|None insert_id, sqlalchemy.engine.Connection connection) |

| None | _storeDiaSources (self, pandas.DataFrame sources, ApdbInsertId|None insert_id, sqlalchemy.engine.Connection connection) |

| None | _storeDiaForcedSources (self, pandas.DataFrame sources, ApdbInsertId|None insert_id, sqlalchemy.engine.Connection connection) |

| list[tuple[int, int]] | _htm_indices (self, Region region) |

| sql.ColumnElement | _filterRegion (self, sqlalchemy.schema.Table table, Region region) |

| pandas.DataFrame | _add_obj_htm_index (self, pandas.DataFrame df) |

| pandas.DataFrame | _add_src_htm_index (self, pandas.DataFrame sources, pandas.DataFrame objs) |

Protected Attributes | |

| _engine | |

| _metadata | |

| _schema | |

Static Protected Attributes | |

| tuple | _frozen_parameters |

Detailed Description

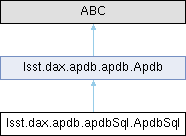

Implementation of APDB interface based on SQL database.

The implementation is configured via standard ``pex_config`` mechanism

using `ApdbSqlConfig` configuration class. For an example of different

configurations check ``config/`` folder.

Parameters

----------

config : `ApdbSqlConfig`

Configuration object.

Definition at line 185 of file apdbSql.py.

Constructor & Destructor Documentation

◆ __init__()

| lsst.dax.apdb.apdbSql.ApdbSql.__init__ | ( | self, | |

| ApdbSqlConfig | config ) |

Definition at line 218 of file apdbSql.py.

Member Function Documentation

◆ _add_obj_htm_index()

|

protected |

Calculate HTM index for each record and add it to a DataFrame. Notes ----- This overrides any existing column in a DataFrame with the same name (pixelId). Original DataFrame is not changed, copy of a DataFrame is returned.

Definition at line 1147 of file apdbSql.py.

◆ _add_src_htm_index()

|

protected |

Add pixelId column to DiaSource catalog. Notes ----- This method copies pixelId value from a matching DiaObject record. DiaObject catalog needs to have a pixelId column filled by ``_add_obj_htm_index`` method and DiaSource records need to be associated to DiaObjects via ``diaObjectId`` column. This overrides any existing column in a DataFrame with the same name (pixelId). Original DataFrame is not changed, copy of a DataFrame is returned.

Definition at line 1167 of file apdbSql.py.

◆ _filterRegion()

|

protected |

Make SQLAlchemy expression for selecting records in a region.

Definition at line 1133 of file apdbSql.py.

◆ _get_history()

|

protected |

Return catalog of records for given insert identifiers, common implementation for all DIA tables.

Definition at line 645 of file apdbSql.py.

◆ _getDiaSourcesByIDs()

|

protected |

Return catalog of DiaSource instances given set of DiaObject IDs.

Parameters

----------

object_ids :

Collection of DiaObject IDs

visit_time : `astropy.time.Time`

Time of the current visit.

Returns

-------

catalog : `pandas.DataFrame`

Catalog contaning DiaSource records.

Definition at line 829 of file apdbSql.py.

◆ _getDiaSourcesInRegion()

|

protected |

Return catalog of DiaSource instances from given region.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIASources.

visit_time : `astropy.time.Time`

Time of the current visit.

Returns

-------

catalog : `pandas.DataFrame`

Catalog containing DiaSource records.

Definition at line 793 of file apdbSql.py.

◆ _getSourcesByIDs()

|

protected |

Return catalog of DiaSource or DiaForcedSource instances given set

of DiaObject IDs.

Parameters

----------

table : `sqlalchemy.schema.Table`

Database table.

object_ids :

Collection of DiaObject IDs

midpointMjdTai_start : `float`

Earliest midpointMjdTai to retrieve.

Returns

-------

catalog : `pandas.DataFrame`

Catalog contaning DiaSource records. `None` is returned if

``read_sources_months`` configuration parameter is set to 0 or

when ``object_ids`` is empty.

Definition at line 855 of file apdbSql.py.

◆ _htm_indices()

Generate a set of HTM indices covering specified region.

Parameters

----------

region: `sphgeom.Region`

Region that needs to be indexed.

Returns

-------

Sequence of ranges, range is a tuple (minHtmID, maxHtmID).

Definition at line 1116 of file apdbSql.py.

◆ _makeEngine()

|

protected |

Make SQLALchemy engine based on configured parameters.

Parameters

----------

config : `ApdbSqlConfig`

Configuration object.

Definition at line 266 of file apdbSql.py.

◆ _makeSchema()

|

protected |

Definition at line 457 of file apdbSql.py.

◆ _storeDiaForcedSources()

|

protected |

Store a set of DiaForcedSources from current visit.

Parameters

----------

sources : `pandas.DataFrame`

Catalog containing DiaForcedSource records

Definition at line 1083 of file apdbSql.py.

◆ _storeDiaObjects()

|

protected |

Store catalog of DiaObjects from current visit.

Parameters

----------

objs : `pandas.DataFrame`

Catalog with DiaObject records.

visit_time : `astropy.time.Time`

Time of the visit.

insert_id : `ApdbInsertId`

Insert identifier.

Definition at line 924 of file apdbSql.py.

◆ _storeDiaSources()

|

protected |

Store catalog of DiaSources from current visit.

Parameters

----------

sources : `pandas.DataFrame`

Catalog containing DiaSource records

Definition at line 1050 of file apdbSql.py.

◆ _storeInsertId()

|

protected |

Definition at line 914 of file apdbSql.py.

◆ _versionCheck()

|

protected |

Check schema version compatibility.

Definition at line 299 of file apdbSql.py.

◆ apdbImplementationVersion()

| VersionTuple lsst.dax.apdb.apdbSql.ApdbSql.apdbImplementationVersion | ( | cls | ) |

Return version number for current APDB implementation.

Returns

-------

version : `VersionTuple`

Version of the code defined in implementation class.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 332 of file apdbSql.py.

◆ apdbSchemaVersion()

| VersionTuple lsst.dax.apdb.apdbSql.ApdbSql.apdbSchemaVersion | ( | self | ) |

Return schema version number as defined in config file.

Returns

-------

version : `VersionTuple`

Version of the schema defined in schema config file.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 424 of file apdbSql.py.

◆ containsCcdVisit()

| bool lsst.dax.apdb.apdbSql.ApdbSql.containsCcdVisit | ( | self, | |

| int | ccdVisitId ) |

Test whether data for a given visit-detector is present in the APDB.

This method is a placeholder until `Apdb.containsVisitDetector` can

be implemented.

Parameters

----------

ccdVisitId : `int`

The packed ID of the visit-detector to search for.

Returns

-------

present : `bool`

`True` if some DiaSource records exist for the specified

observation, `False` otherwise.

Definition at line 567 of file apdbSql.py.

◆ containsVisitDetector()

| bool lsst.dax.apdb.apdbSql.ApdbSql.containsVisitDetector | ( | self, | |

| int | visit, | ||

| int | detector ) |

Test whether data for a given visit-detector is present in the APDB.

Parameters

----------

visit, detector : `int`

The ID of the visit-detector to search for.

Returns

-------

present : `bool`

`True` if some DiaObject, DiaSource, or DiaForcedSource records

exist for the specified observation, `False` otherwise.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 563 of file apdbSql.py.

◆ countUnassociatedObjects()

| int lsst.dax.apdb.apdbSql.ApdbSql.countUnassociatedObjects | ( | self | ) |

Return the number of DiaObjects that have only one DiaSource

associated with them.

Used as part of ap_verify metrics.

Returns

-------

count : `int`

Number of DiaObjects with exactly one associated DiaSource.

Notes

-----

This method can be very inefficient or slow in some implementations.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 770 of file apdbSql.py.

◆ dailyJob()

| None lsst.dax.apdb.apdbSql.ApdbSql.dailyJob | ( | self | ) |

Implement daily activities like cleanup/vacuum. What should be done during daily activities is determined by specific implementation.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 766 of file apdbSql.py.

◆ deleteInsertIds()

| None lsst.dax.apdb.apdbSql.ApdbSql.deleteInsertIds | ( | self, | |

| Iterable[ApdbInsertId] | ids ) |

Remove insert identifiers from the database.

Parameters

----------

ids : `iterable` [`ApdbInsertId`]

Insert identifiers, can include items returned from `getInsertIds`.

Notes

-----

This method causes Apdb to forget about specified identifiers. If there

are any auxiliary data associated with the identifiers, it is also

removed from database (but data in regular tables is not removed).

This method should be called after successful transfer of data from

APDB to PPDB to free space used by history.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 620 of file apdbSql.py.

◆ getDiaForcedSources()

| pandas.DataFrame | None lsst.dax.apdb.apdbSql.ApdbSql.getDiaForcedSources | ( | self, | |

| Region | region, | ||

| Iterable[int] | None | object_ids, | ||

| astropy.time.Time | visit_time ) |

Return catalog of DiaForcedSource instances from a given region.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIASources.

object_ids : iterable [ `int` ], optional

List of DiaObject IDs to further constrain the set of returned

sources. If list is empty then empty catalog is returned with a

correct schema. If `None` then returned sources are not

constrained. Some implementations may not support latter case.

visit_time : `astropy.time.Time`

Time of the current visit.

Returns

-------

catalog : `pandas.DataFrame`, or `None`

Catalog containing DiaSource records. `None` is returned if

``read_forced_sources_months`` configuration parameter is set to 0.

Raises

------

NotImplementedError

May be raised by some implementations if ``object_ids`` is `None`.

Notes

-----

This method returns DiaForcedSource catalog for a region with

additional filtering based on DiaObject IDs. Only a subset of DiaSource

history is returned limited by ``read_forced_sources_months`` config

parameter, w.r.t. ``visit_time``. If ``object_ids`` is empty then an

empty catalog is always returned with the correct schema

(columns/types). If ``object_ids`` is `None` then no filtering is

performed and some of the returned records may be outside the specified

region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 538 of file apdbSql.py.

◆ getDiaForcedSourcesHistory()

| ApdbTableData lsst.dax.apdb.apdbSql.ApdbSql.getDiaForcedSourcesHistory | ( | self, | |

| Iterable[ApdbInsertId] | ids ) |

Return catalog of DiaForcedSource instances from a given time

period.

Parameters

----------

ids : `iterable` [`ApdbInsertId`]

Insert identifiers, can include items returned from `getInsertIds`.

Returns

-------

data : `ApdbTableData`

Catalog containing DiaForcedSource records. In addition to all

regular columns it will contain ``insert_id`` column.

Notes

-----

This part of API may not be very stable and can change before the

implementation finalizes.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 641 of file apdbSql.py.

◆ getDiaObjects()

| pandas.DataFrame lsst.dax.apdb.apdbSql.ApdbSql.getDiaObjects | ( | self, | |

| Region | region ) |

Return catalog of DiaObject instances from a given region.

This method returns only the last version of each DiaObject. Some

records in a returned catalog may be outside the specified region, it

is up to a client to ignore those records or cleanup the catalog before

futher use.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIAObjects.

Returns

-------

catalog : `pandas.DataFrame`

Catalog containing DiaObject records for a region that may be a

superset of the specified region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 493 of file apdbSql.py.

◆ getDiaObjectsHistory()

| ApdbTableData lsst.dax.apdb.apdbSql.ApdbSql.getDiaObjectsHistory | ( | self, | |

| Iterable[ApdbInsertId] | ids ) |

Return catalog of DiaObject instances from a given time period

including the history of each DiaObject.

Parameters

----------

ids : `iterable` [`ApdbInsertId`]

Insert identifiers, can include items returned from `getInsertIds`.

Returns

-------

data : `ApdbTableData`

Catalog containing DiaObject records. In addition to all regular

columns it will contain ``insert_id`` column.

Notes

-----

This part of API may not be very stable and can change before the

implementation finalizes.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 633 of file apdbSql.py.

◆ getDiaSources()

| pandas.DataFrame | None lsst.dax.apdb.apdbSql.ApdbSql.getDiaSources | ( | self, | |

| Region | region, | ||

| Iterable[int] | None | object_ids, | ||

| astropy.time.Time | visit_time ) |

Return catalog of DiaSource instances from a given region.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIASources.

object_ids : iterable [ `int` ], optional

List of DiaObject IDs to further constrain the set of returned

sources. If `None` then returned sources are not constrained. If

list is empty then empty catalog is returned with a correct

schema.

visit_time : `astropy.time.Time`

Time of the current visit.

Returns

-------

catalog : `pandas.DataFrame`, or `None`

Catalog containing DiaSource records. `None` is returned if

``read_sources_months`` configuration parameter is set to 0.

Notes

-----

This method returns DiaSource catalog for a region with additional

filtering based on DiaObject IDs. Only a subset of DiaSource history

is returned limited by ``read_sources_months`` config parameter, w.r.t.

``visit_time``. If ``object_ids`` is empty then an empty catalog is

always returned with the correct schema (columns/types). If

``object_ids`` is `None` then no filtering is performed and some of the

returned records may be outside the specified region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 524 of file apdbSql.py.

◆ getDiaSourcesHistory()

| ApdbTableData lsst.dax.apdb.apdbSql.ApdbSql.getDiaSourcesHistory | ( | self, | |

| Iterable[ApdbInsertId] | ids ) |

Return catalog of DiaSource instances from a given time period.

Parameters

----------

ids : `iterable` [`ApdbInsertId`]

Insert identifiers, can include items returned from `getInsertIds`.

Returns

-------

data : `ApdbTableData`

Catalog containing DiaSource records. In addition to all regular

columns it will contain ``insert_id`` column.

Notes

-----

This part of API may not be very stable and can change before the

implementation finalizes.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 637 of file apdbSql.py.

◆ getInsertIds()

| list[ApdbInsertId] | None lsst.dax.apdb.apdbSql.ApdbSql.getInsertIds | ( | self | ) |

Return collection of insert identifiers known to the database.

Returns

-------

ids : `list` [`ApdbInsertId`] or `None`

List of identifiers, they may be time-ordered if database supports

ordering. `None` is returned if database is not configured to store

insert identifiers.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 601 of file apdbSql.py.

◆ getSSObjects()

| pandas.DataFrame lsst.dax.apdb.apdbSql.ApdbSql.getSSObjects | ( | self | ) |

Return catalog of SSObject instances.

Returns

-------

catalog : `pandas.DataFrame`

Catalog containing SSObject records, all existing records are

returned.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 673 of file apdbSql.py.

◆ init_database()

| ApdbSqlConfig lsst.dax.apdb.apdbSql.ApdbSql.init_database | ( | cls, | |

| str | db_url, | ||

| *str | None | schema_file = None, | ||

| str | None | schema_name = None, | ||

| int | None | read_sources_months = None, | ||

| int | None | read_forced_sources_months = None, | ||

| bool | use_insert_id = False, | ||

| int | None | connection_timeout = None, | ||

| str | None | dia_object_index = None, | ||

| int | None | htm_level = None, | ||

| str | None | htm_index_column = None, | ||

| list[str] | None | ra_dec_columns = None, | ||

| str | None | prefix = None, | ||

| str | None | namespace = None, | ||

| bool | drop = False ) |

Initialize new APDB instance and make configuration object for it.

Parameters

----------

db_url : `str`

SQLAlchemy database URL.

schema_file : `str`, optional

Location of (YAML) configuration file with APDB schema. If not

specified then default location will be used.

schema_name : str | None

Name of the schema in YAML configuration file. If not specified

then default name will be used.

read_sources_months : `int`, optional

Number of months of history to read from DiaSource.

read_forced_sources_months : `int`, optional

Number of months of history to read from DiaForcedSource.

use_insert_id : `bool`

If True, make additional tables used for replication to PPDB.

connection_timeout : `int`, optional

Database connection timeout in seconds.

dia_object_index : `str`, optional

Indexing mode for DiaObject table.

htm_level : `int`, optional

HTM indexing level.

htm_index_column : `str`, optional

Name of a HTM index column for DiaObject and DiaSource tables.

ra_dec_columns : `list` [`str`], optional

Names of ra/dec columns in DiaObject table.

prefix : `str`, optional

Optional prefix for all table names.

namespace : `str`, optional

Name of the database schema for all APDB tables. If not specified

then default schema is used.

drop : `bool`, optional

If `True` then drop existing tables before re-creating the schema.

Returns

-------

config : `ApdbSqlConfig`

Resulting configuration object for a created APDB instance.

Definition at line 337 of file apdbSql.py.

◆ metadata()

| ApdbMetadata lsst.dax.apdb.apdbSql.ApdbSql.metadata | ( | self | ) |

Object controlling access to APDB metadata (`ApdbMetadata`).

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 787 of file apdbSql.py.

◆ reassignDiaSources()

| None lsst.dax.apdb.apdbSql.ApdbSql.reassignDiaSources | ( | self, | |

| Mapping[int, int] | idMap ) |

Associate DiaSources with SSObjects, dis-associating them

from DiaObjects.

Parameters

----------

idMap : `Mapping`

Maps DiaSource IDs to their new SSObject IDs.

Raises

------

ValueError

Raised if DiaSource ID does not exist in the database.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 746 of file apdbSql.py.

◆ store()

| None lsst.dax.apdb.apdbSql.ApdbSql.store | ( | self, | |

| astropy.time.Time | visit_time, | ||

| pandas.DataFrame | objects, | ||

| pandas.DataFrame | None | sources = None, | ||

| pandas.DataFrame | None | forced_sources = None ) |

Store all three types of catalogs in the database.

Parameters

----------

visit_time : `astropy.time.Time`

Time of the visit.

objects : `pandas.DataFrame`

Catalog with DiaObject records.

sources : `pandas.DataFrame`, optional

Catalog with DiaSource records.

forced_sources : `pandas.DataFrame`, optional

Catalog with DiaForcedSource records.

Notes

-----

This methods takes DataFrame catalogs, their schema must be

compatible with the schema of APDB table:

- column names must correspond to database table columns

- types and units of the columns must match database definitions,

no unit conversion is performed presently

- columns that have default values in database schema can be

omitted from catalog

- this method knows how to fill interval-related columns of DiaObject

(validityStart, validityEnd) they do not need to appear in a

catalog

- source catalogs have ``diaObjectId`` column associating sources

with objects

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 686 of file apdbSql.py.

◆ storeSSObjects()

| None lsst.dax.apdb.apdbSql.ApdbSql.storeSSObjects | ( | self, | |

| pandas.DataFrame | objects ) |

Store or update SSObject catalog.

Parameters

----------

objects : `pandas.DataFrame`

Catalog with SSObject records.

Notes

-----

If SSObjects with matching IDs already exist in the database, their

records will be updated with the information from provided records.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 714 of file apdbSql.py.

◆ tableDef()

| Table | None lsst.dax.apdb.apdbSql.ApdbSql.tableDef | ( | self, | |

| ApdbTables | table ) |

Return table schema definition for a given table.

Parameters

----------

table : `ApdbTables`

One of the known APDB tables.

Returns

-------

tableSchema : `felis.simple.Table` or `None`

Table schema description, `None` is returned if table is not

defined by this implementation.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 452 of file apdbSql.py.

◆ tableRowCount()

| dict[str, int] lsst.dax.apdb.apdbSql.ApdbSql.tableRowCount | ( | self | ) |

Return dictionary with the table names and row counts.

Used by ``ap_proto`` to keep track of the size of the database tables.

Depending on database technology this could be expensive operation.

Returns

-------

row_counts : `dict`

Dict where key is a table name and value is a row count.

Definition at line 428 of file apdbSql.py.

Member Data Documentation

◆ _engine

|

protected |

Definition at line 219 of file apdbSql.py.

◆ _frozen_parameters

|

staticprotected |

Definition at line 209 of file apdbSql.py.

◆ _metadata

|

protected |

Definition at line 227 of file apdbSql.py.

◆ _schema

|

protected |

Definition at line 239 of file apdbSql.py.

◆ config

| lsst.dax.apdb.apdbSql.ApdbSql.config |

Definition at line 234 of file apdbSql.py.

◆ ConfigClass

|

static |

Definition at line 198 of file apdbSql.py.

◆ metadataCodeVersionKey [1/2]

|

static |

Definition at line 203 of file apdbSql.py.

◆ metadataCodeVersionKey [2/2]

| lsst.dax.apdb.apdbSql.ApdbSql.metadataCodeVersionKey |

Definition at line 487 of file apdbSql.py.

◆ metadataConfigKey [1/2]

|

static |

Definition at line 206 of file apdbSql.py.

◆ metadataConfigKey [2/2]

| lsst.dax.apdb.apdbSql.ApdbSql.metadataConfigKey |

Definition at line 491 of file apdbSql.py.

◆ metadataSchemaVersionKey [1/2]

|

static |

Definition at line 200 of file apdbSql.py.

◆ metadataSchemaVersionKey [2/2]

| lsst.dax.apdb.apdbSql.ApdbSql.metadataSchemaVersionKey |

Definition at line 486 of file apdbSql.py.

◆ pixelator

| lsst.dax.apdb.apdbSql.ApdbSql.pixelator |

Definition at line 253 of file apdbSql.py.

◆ use_insert_id

| lsst.dax.apdb.apdbSql.ApdbSql.use_insert_id |

Definition at line 254 of file apdbSql.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-8.0.0/Linux64/dax_apdb/gd2a12a3803+f8351bc914/python/lsst/dax/apdb/apdbSql.py