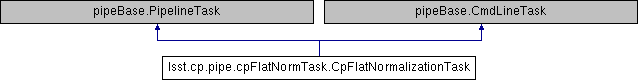

Inheritance diagram for lsst.cp.pipe.cpFlatNormTask.CpFlatNormalizationTask:

Public Member Functions | |

| def | runQuantum (self, butlerQC, inputRefs, outputRefs) |

| def | run (self, inputMDs, inputDims, camera) |

| def | measureScales (self, bgMatrix, bgCounts=None, iterations=10) |

Static Public Attributes | |

| ConfigClass = CpFlatNormalizationTaskConfig | |

Detailed Description

Rescale merged flat frames to remove unequal screen illumination.

Definition at line 182 of file cpFlatNormTask.py.

Member Function Documentation

◆ measureScales()

| def lsst.cp.pipe.cpFlatNormTask.CpFlatNormalizationTask.measureScales | ( | self, | |

| bgMatrix, | |||

bgCounts = None, |

|||

iterations = 10 |

|||

| ) |

Convert backgrounds to exposure and detector components.

Parameters

----------

bgMatrix : `np.ndarray`, (nDetectors, nExposures)

Input backgrounds indexed by exposure (axis=0) and

detector (axis=1).

bgCounts : `np.ndarray`, (nDetectors, nExposures), optional

Input pixel counts used to in measuring bgMatrix, indexed

identically.

iterations : `int`, optional

Number of iterations to use in decomposition.

Returns

-------

scaleResult : `lsst.pipe.base.Struct`

Result struct containing fields:

``vectorE``

Output E vector of exposure level scalings

(`np.array`, (nExposures)).

``vectorG``

Output G vector of detector level scalings

(`np.array`, (nExposures)).

``bgModel``

Expected model bgMatrix values, calculated from E and G

(`np.ndarray`, (nDetectors, nExposures)).

Notes

-----

The set of background measurements B[exposure, detector] of

flat frame data should be defined by a "Cartesian" product of

two vectors, E[exposure] and G[detector]. The E vector

represents the total flux incident on the focal plane. In a

perfect camera, this is simply the sum along the columns of B

(np.sum(B, axis=0)).

However, this simple model ignores differences in detector

gains, the vignetting of the detectors, and the illumination

pattern of the source lamp. The G vector describes these

detector dependent differences, which should be identical over

different exposures. For a perfect lamp of unit total

intensity, this is simply the sum along the rows of B

(np.sum(B, axis=1)). This algorithm divides G by the total

flux level, to provide the relative (not absolute) scales

between detectors.

The algorithm here, from pipe_drivers/constructCalibs.py and

from there from Eugene Magnier/PanSTARRS [1]_, attempts to

iteratively solve this decomposition from initial "perfect" E

and G vectors. The operation is performed in log space to

reduce the multiply and divides to linear additions and

subtractions.

References

----------

.. [1] https://svn.pan-starrs.ifa.hawaii.edu/trac/ipp/browser/trunk/psModules/src/detrend/pmFlatNormalize.c # noqa: E501

Definition at line 316 of file cpFlatNormTask.py.

◆ run()

| def lsst.cp.pipe.cpFlatNormTask.CpFlatNormalizationTask.run | ( | self, | |

| inputMDs, | |||

| inputDims, | |||

| camera | |||

| ) |

Normalize FLAT exposures to a consistent level.

Parameters

----------

inputMDs : `list` [`lsst.daf.base.PropertyList`]

Amplifier-level metadata used to construct scales.

inputDims : `list` [`dict`]

List of dictionaries of input data dimensions/values.

Each list entry should contain:

``"exposure"``

exposure id value (`int`)

``"detector"``

detector id value (`int`)

Returns

-------

outputScales : `dict` [`dict` [`dict` [`float`]]]

Dictionary of scales, indexed by detector (`int`),

amplifier (`int`), and exposure (`int`).

Raises

------

KeyError

Raised if the input dimensions do not contain detector and

exposure, or if the metadata does not contain the expected

statistic entry.

Definition at line 200 of file cpFlatNormTask.py.

294 outputScales = defaultdict(lambda: defaultdict(lambda: defaultdict(lambda: defaultdict(float))))

def run(self, skyInfo, tempExpRefList, imageScalerList, weightList, altMaskList=None, mask=None, supplementaryData=None)

Definition: assembleCoadd.py:764

◆ runQuantum()

| def lsst.cp.pipe.cpFlatNormTask.CpFlatNormalizationTask.runQuantum | ( | self, | |

| butlerQC, | |||

| inputRefs, | |||

| outputRefs | |||

| ) |

Definition at line 189 of file cpFlatNormTask.py.

Member Data Documentation

◆ ConfigClass

|

static |

Definition at line 186 of file cpFlatNormTask.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-0.6.0/Linux64/cp_pipe/22.0.1-18-gb17765a+2264247a6b/python/lsst/cp/pipe/cpFlatNormTask.py