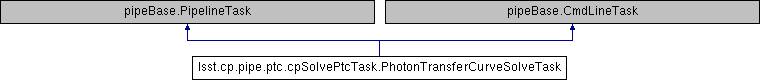

Inheritance diagram for lsst.cp.pipe.ptc.cpSolvePtcTask.PhotonTransferCurveSolveTask:

Public Member Functions | |

| def | runQuantum (self, butlerQC, inputRefs, outputRefs) |

| def | run (self, inputCovariances, camera=None, inputExpList=None) |

| def | fitCovariancesAstier (self, dataset) |

| def | getOutputPtcDataCovAstier (self, dataset, covFits, covFitsNoB) |

| def | fitPtc (self, dataset) |

| def | fillBadAmp (self, dataset, ptcFitType, ampName) |

Static Public Attributes | |

| ConfigClass = PhotonTransferCurveSolveConfig | |

Detailed Description

Task to fit the PTC from flat covariances. This task assembles the list of individual PTC datasets produced by `PhotonTransferCurveSolveTask` into one single final PTC dataset. The task fits the measured (co)variances to a polynomial model or to the models described in equations 16 and 20 of Astier+19 (referred to as `POLYNOMIAL`, `EXPAPPROXIMATION`, and `FULLCOVARIANCE` in the configuration options of the task, respectively). Parameters of interest such as tghe gain and noise are derived from the fits. Astier+19: "The Shape of the Photon Transfer Curve of CCD sensors", arXiv:1905.08677

Definition at line 158 of file cpSolvePtcTask.py.

Member Function Documentation

◆ fillBadAmp()

| def lsst.cp.pipe.ptc.cpSolvePtcTask.PhotonTransferCurveSolveTask.fillBadAmp | ( | self, | |

| dataset, | |||

| ptcFitType, | |||

| ampName | |||

| ) |

Fill the dataset with NaNs if there are not enough good points.

Parameters

----------

dataset : `lsst.ip.isr.ptcDataset.PhotonTransferCurveDataset`

The dataset containing the means, variances and exposure times.

ptcFitType : `str`

Fit a 'POLYNOMIAL' (degree: 'polynomialFitDegree') or

'EXPAPPROXIMATION' (Eq. 16 of Astier+19) to the PTC.

ampName : `str`

Amplifier name.

Definition at line 678 of file cpSolvePtcTask.py.

◆ fitCovariancesAstier()

| def lsst.cp.pipe.ptc.cpSolvePtcTask.PhotonTransferCurveSolveTask.fitCovariancesAstier | ( | self, | |

| dataset | |||

| ) |

Fit measured flat covariances to full model in Astier+19.

Parameters

----------

dataset : `lsst.ip.isr.ptcDataset.PhotonTransferCurveDataset`

The dataset containing information such as the means, (co)variances,

and exposure times.

Returns

-------

dataset: `lsst.ip.isr.ptcDataset.PhotonTransferCurveDataset`

This is the same dataset as the input paramter, however, it has been modified

to include information such as the fit vectors and the fit parameters. See

the class `PhotonTransferCurveDatase`.

Definition at line 280 of file cpSolvePtcTask.py.

def fitDataFullCovariance(dataset)

Definition: astierCovPtcUtils.py:263

◆ fitPtc()

| def lsst.cp.pipe.ptc.cpSolvePtcTask.PhotonTransferCurveSolveTask.fitPtc | ( | self, | |

| dataset | |||

| ) |

Fit the photon transfer curve to a polynomial or to Astier+19 approximation.

Fit the photon transfer curve with either a polynomial of the order

specified in the task config, or using the exponential approximation

in Astier+19 (Eq. 16).

Sigma clipping is performed iteratively for the fit, as well as an

initial clipping of data points that are more than

config.initialNonLinearityExclusionThreshold away from lying on a

straight line. This other step is necessary because the photon transfer

curve turns over catastrophically at very high flux (because saturation

drops the variance to ~0) and these far outliers cause the initial fit

to fail, meaning the sigma cannot be calculated to perform the

sigma-clipping.

Parameters

----------

dataset : `lsst.ip.isr.ptcDataset.PhotonTransferCurveDataset`

The dataset containing the means, variances and exposure times.

Returns

-------

dataset: `lsst.ip.isr.ptcDataset.PhotonTransferCurveDataset`

This is the same dataset as the input parameter, however, it has been modified

to include information such as the fit vectors and the fit parameters. See

the class `PhotonTransferCurveDatase`.

Raises

------

RuntimeError:

Raises if dataset.ptcFitType is None or empty.

Definition at line 493 of file cpSolvePtcTask.py.

647 # Masked variances (measured and modeled) and means. Need to pad the array so astropy.Table does

def fitBootstrap(initialParams, dataX, dataY, function, weightsY=None, confidenceSigma=1.)

Definition: utils.py:487

def fitLeastSq(initialParams, dataX, dataY, function, weightsY=None)

Definition: utils.py:420

◆ getOutputPtcDataCovAstier()

| def lsst.cp.pipe.ptc.cpSolvePtcTask.PhotonTransferCurveSolveTask.getOutputPtcDataCovAstier | ( | self, | |

| dataset, | |||

| covFits, | |||

| covFitsNoB | |||

| ) |

Get output data for PhotonTransferCurveCovAstierDataset from CovFit objects.

Parameters

----------

dataset : `lsst.ip.isr.ptcDataset.PhotonTransferCurveDataset`

The dataset containing information such as the means, variances and exposure times.

covFits: `dict`

Dictionary of CovFit objects, with amp names as keys.

covFitsNoB : `dict`

Dictionary of CovFit objects, with amp names as keys, and 'b=0' in Eq. 20 of Astier+19.

Returns

-------

dataset : `lsst.ip.isr.ptcDataset.PhotonTransferCurveDataset`

This is the same dataset as the input paramter, however, it has been modified

to include extra information such as the mask 1D array, gains, reoudout noise, measured signal,

measured variance, modeled variance, a, and b coefficient matrices (see Astier+19) per amplifier.

See the class `PhotonTransferCurveDatase`.

Definition at line 302 of file cpSolvePtcTask.py.

◆ run()

| def lsst.cp.pipe.ptc.cpSolvePtcTask.PhotonTransferCurveSolveTask.run | ( | self, | |

| inputCovariances, | |||

camera = None, |

|||

inputExpList = None |

|||

| ) |

Fit measure covariances to different models.

Parameters

----------

inputCovariances : `list` [`lsst.ip.isr.PhotonTransferCurveDataset`]

List of lsst.ip.isr.PhotonTransferCurveDataset datasets.

camera : `lsst.afw.cameraGeom.Camera`, optional

Input camera.

inputExpList : `list` [`~lsst.afw.image.exposure.exposure.ExposureF`], optional

List of exposures.

Returns

-------

results : `lsst.pipe.base.Struct`

The results struct containing:

``outputPtcDatset`` : `lsst.ip.isr.PhotonTransferCurveDataset`

Final PTC dataset, containing information such as the means, variances,

and exposure times.

Definition at line 191 of file cpSolvePtcTask.py.

std::shared_ptr< FrameSet > append(FrameSet const &first, FrameSet const &second)

Construct a FrameSet that performs two transformations in series.

Definition: functional.cc:33

def run(self, skyInfo, tempExpRefList, imageScalerList, weightList, altMaskList=None, mask=None, supplementaryData=None)

Definition: assembleCoadd.py:764

◆ runQuantum()

| def lsst.cp.pipe.ptc.cpSolvePtcTask.PhotonTransferCurveSolveTask.runQuantum | ( | self, | |

| butlerQC, | |||

| inputRefs, | |||

| outputRefs | |||

| ) |

Ensure that the input and output dimensions are passed along.

Parameters

----------

butlerQC : `~lsst.daf.butler.butlerQuantumContext.ButlerQuantumContext`

Butler to operate on.

inputRefs : `~lsst.pipe.base.connections.InputQuantizedConnection`

Input data refs to load.

ouptutRefs : `~lsst.pipe.base.connections.OutputQuantizedConnection`

Output data refs to persist.

Definition at line 175 of file cpSolvePtcTask.py.

Member Data Documentation

◆ ConfigClass

|

static |

Definition at line 172 of file cpSolvePtcTask.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-0.6.0/Linux64/cp_pipe/22.0.1-18-gb17765a+2264247a6b/python/lsst/cp/pipe/ptc/cpSolvePtcTask.py