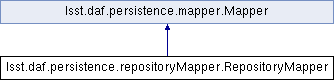

Inheritance diagram for lsst.daf.persistence.repositoryMapper.RepositoryMapper:

Public Member Functions | |

| def | __init__ (self, storage, policy) |

| def | __repr__ (self) |

| def | map_cfg (self, dataId, write) |

| def | map_repo (self, dataId, write) |

| def | __new__ (cls, *args, **kwargs) |

| def | __getstate__ (self) |

| def | __setstate__ (self, state) |

| def | keys (self) |

| def | queryMetadata (self, datasetType, format, dataId) |

| def | getDatasetTypes (self) |

| def | map (self, datasetType, dataId, write=False) |

| def | canStandardize (self, datasetType) |

| def | standardize (self, datasetType, item, dataId) |

| def | validate (self, dataId) |

| def | backup (self, datasetType, dataId) |

| def | getRegistry (self) |

Public Attributes | |

| policy | |

| storage | |

Detailed Description

"Base class for a mapper to find repository configurations within a butler repository.

.. warning::

cfg is 'wet paint' and very likely to change. Use of it in production code other than via the 'old

butler' API is strongly discouraged.

Definition at line 28 of file repositoryMapper.py.

Constructor & Destructor Documentation

◆ __init__()

| def lsst.daf.persistence.repositoryMapper.RepositoryMapper.__init__ | ( | self, | |

| storage, | |||

| policy | |||

| ) |

Definition at line 37 of file repositoryMapper.py.

Member Function Documentation

◆ __getstate__()

|

inherited |

◆ __new__()

|

inherited |

Create a new Mapper, saving arguments for pickling. This is in __new__ instead of __init__ to save the user from having to save the arguments themselves (either explicitly, or by calling the super's __init__ with all their *args,**kwargs. The resulting pickling system (of __new__, __getstate__ and __setstate__ is similar to how __reduce__ is usually used, except that we save the user from any responsibility (except when overriding __new__, but that is not common).

Definition at line 84 of file mapper.py.

◆ __repr__()

| def lsst.daf.persistence.repositoryMapper.RepositoryMapper.__repr__ | ( | self | ) |

Definition at line 44 of file repositoryMapper.py.

◆ __setstate__()

|

inherited |

◆ backup()

|

inherited |

Rename any existing object with the given type and dataId. Not implemented in the base mapper.

◆ canStandardize()

|

inherited |

Return true if this mapper can standardize an object of the given dataset type.

◆ getDatasetTypes()

|

inherited |

◆ getRegistry()

|

inherited |

◆ keys()

|

inherited |

Reimplemented in lsst.pipe.tasks.mocks.simpleMapper.SimpleMapper.

Definition at line 111 of file mapper.py.

◆ map()

|

inherited |

Map a data id using the mapping method for its dataset type.

Parameters

----------

datasetType : string

The datasetType to map

dataId : DataId instance

The dataId to use when mapping

write : bool, optional

Indicates if the map is being performed for a read operation

(False) or a write operation (True)

Returns

-------

ButlerLocation or a list of ButlerLocation

The location(s) found for the map operation. If write is True, a

list is returned. If write is False a single ButlerLocation is

returned.

Raises

------

NoResults

If no locaiton was found for this map operation, the derived mapper

class may raise a lsst.daf.persistence.NoResults exception. Butler

catches this and will look in the next Repository if there is one.

Definition at line 137 of file mapper.py.

◆ map_cfg()

| def lsst.daf.persistence.repositoryMapper.RepositoryMapper.map_cfg | ( | self, | |

| dataId, | |||

| write | |||

| ) |

Map a location for a cfg file.

:param dataId: keys & values to be applied to the template.

:param write: True if this map is being done do perform a write operation, else assumes read. Will

verify location exists if write is True.

:return: a butlerLocation that describes the mapped location.

Definition at line 50 of file repositoryMapper.py.

◆ map_repo()

| def lsst.daf.persistence.repositoryMapper.RepositoryMapper.map_repo | ( | self, | |

| dataId, | |||

| write | |||

| ) |

Definition at line 72 of file repositoryMapper.py.

◆ queryMetadata()

|

inherited |

◆ standardize()

|

inherited |

◆ validate()

|

inherited |

Member Data Documentation

◆ policy

| lsst.daf.persistence.repositoryMapper.RepositoryMapper.policy |

Definition at line 41 of file repositoryMapper.py.

◆ storage

| lsst.daf.persistence.repositoryMapper.RepositoryMapper.storage |

Definition at line 42 of file repositoryMapper.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-0.7.0/Linux64/daf_persistence/22.0.1-4-g243d05b+871c1b8305/python/lsst/daf/persistence/repositoryMapper.py