Public Member Functions | |

| def | __init__ (self, ApdbSqlConfig config) |

| Dict[str, int] | tableRowCount (self) |

| Optional[TableDef] | tableDef (self, ApdbTables table) |

| None | makeSchema (self, bool drop=False) |

| pandas.DataFrame | getDiaObjects (self, Region region) |

| Optional[pandas.DataFrame] | getDiaSources (self, Region region, Optional[Iterable[int]] object_ids, dafBase.DateTime visit_time) |

| Optional[pandas.DataFrame] | getDiaForcedSources (self, Region region, Optional[Iterable[int]] object_ids, dafBase.DateTime visit_time) |

| None | store (self, dafBase.DateTime visit_time, pandas.DataFrame objects, Optional[pandas.DataFrame] sources=None, Optional[pandas.DataFrame] forced_sources=None) |

| None | dailyJob (self) |

| int | countUnassociatedObjects (self) |

| ConfigurableField | makeField (cls, str doc) |

Public Attributes | |

| config | |

| pixelator | |

Static Public Attributes | |

| ConfigClass = ApdbSqlConfig | |

Detailed Description

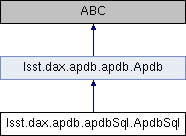

Implementation of APDB interface based on SQL database.

The implementation is configured via standard ``pex_config`` mechanism

using `ApdbSqlConfig` configuration class. For an example of different

configurations check ``config/`` folder.

Parameters

----------

config : `ApdbSqlConfig`

Configuration object.

Definition at line 197 of file apdbSql.py.

Constructor & Destructor Documentation

◆ __init__()

| def lsst.dax.apdb.apdbSql.ApdbSql.__init__ | ( | self, | |

| ApdbSqlConfig | config | ||

| ) |

Definition at line 212 of file apdbSql.py.

Member Function Documentation

◆ countUnassociatedObjects()

| int lsst.dax.apdb.apdbSql.ApdbSql.countUnassociatedObjects | ( | self | ) |

Return the number of DiaObjects that have only one DiaSource

associated with them.

Used as part of ap_verify metrics.

Returns

-------

count : `int`

Number of DiaObjects with exactly one associated DiaSource.

Notes

-----

This method can be very inefficient or slow in some implementations.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 435 of file apdbSql.py.

◆ dailyJob()

| None lsst.dax.apdb.apdbSql.ApdbSql.dailyJob | ( | self | ) |

Implement daily activities like cleanup/vacuum. What should be done during daily activities is determined by specific implementation.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 422 of file apdbSql.py.

◆ getDiaForcedSources()

| Optional[pandas.DataFrame] lsst.dax.apdb.apdbSql.ApdbSql.getDiaForcedSources | ( | self, | |

| Region | region, | ||

| Optional[Iterable[int]] | object_ids, | ||

| dafBase.DateTime | visit_time | ||

| ) |

Return catalog of DiaForcedSource instances from a given region.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIASources.

object_ids : iterable [ `int` ], optional

List of DiaObject IDs to further constrain the set of returned

sources. If list is empty then empty catalog is returned with a

correct schema.

visit_time : `lsst.daf.base.DateTime`

Time of the current visit.

Returns

-------

catalog : `pandas.DataFrame`, or `None`

Catalog containing DiaSource records. `None` is returned if

``read_sources_months`` configuration parameter is set to 0.

Raises

------

NotImplementedError

Raised if ``object_ids`` is `None`.

Notes

-----

Even though base class allows `None` to be passed for ``object_ids``,

this class requires ``object_ids`` to be not-`None`.

`NotImplementedError` is raised if `None` is passed.

This method returns DiaForcedSource catalog for a region with additional

filtering based on DiaObject IDs. Only a subset of DiaSource history

is returned limited by ``read_forced_sources_months`` config parameter,

w.r.t. ``visit_time``. If ``object_ids`` is empty then an empty catalog

is always returned with a correct schema (columns/types).

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 343 of file apdbSql.py.

◆ getDiaObjects()

| pandas.DataFrame lsst.dax.apdb.apdbSql.ApdbSql.getDiaObjects | ( | self, | |

| Region | region | ||

| ) |

Returns catalog of DiaObject instances from a given region.

This method returns only the last version of each DiaObject. Some

records in a returned catalog may be outside the specified region, it

is up to a client to ignore those records or cleanup the catalog before

futher use.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIAObjects.

Returns

-------

catalog : `pandas.DataFrame`

Catalog containing DiaObject records for a region that may be a

superset of the specified region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 285 of file apdbSql.py.

◆ getDiaSources()

| Optional[pandas.DataFrame] lsst.dax.apdb.apdbSql.ApdbSql.getDiaSources | ( | self, | |

| Region | region, | ||

| Optional[Iterable[int]] | object_ids, | ||

| dafBase.DateTime | visit_time | ||

| ) |

Return catalog of DiaSource instances from a given region.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIASources.

object_ids : iterable [ `int` ], optional

List of DiaObject IDs to further constrain the set of returned

sources. If `None` then returned sources are not constrained. If

list is empty then empty catalog is returned with a correct

schema.

visit_time : `lsst.daf.base.DateTime`

Time of the current visit.

Returns

-------

catalog : `pandas.DataFrame`, or `None`

Catalog containing DiaSource records. `None` is returned if

``read_sources_months`` configuration parameter is set to 0.

Notes

-----

This method returns DiaSource catalog for a region with additional

filtering based on DiaObject IDs. Only a subset of DiaSource history

is returned limited by ``read_sources_months`` config parameter, w.r.t.

``visit_time``. If ``object_ids`` is empty then an empty catalog is

always returned with the correct schema (columns/types). If

``object_ids`` is `None` then no filtering is performed and some of the

returned records may be outside the specified region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 329 of file apdbSql.py.

◆ makeField()

|

inherited |

◆ makeSchema()

| None lsst.dax.apdb.apdbSql.ApdbSql.makeSchema | ( | self, | |

| bool | drop = False |

||

| ) |

Create or re-create whole database schema.

Parameters

----------

drop : `bool`

If True then drop all tables before creating new ones.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 281 of file apdbSql.py.

◆ store()

| None lsst.dax.apdb.apdbSql.ApdbSql.store | ( | self, | |

| dafBase.DateTime | visit_time, | ||

| pandas.DataFrame | objects, | ||

| Optional[pandas.DataFrame] | sources = None, |

||

| Optional[pandas.DataFrame] | forced_sources = None |

||

| ) |

Store all three types of catalogs in the database.

Parameters

----------

visit_time : `lsst.daf.base.DateTime`

Time of the visit.

objects : `pandas.DataFrame`

Catalog with DiaObject records.

sources : `pandas.DataFrame`, optional

Catalog with DiaSource records.

forced_sources : `pandas.DataFrame`, optional

Catalog with DiaForcedSource records.

Notes

-----

This methods takes DataFrame catalogs, their schema must be

compatible with the schema of APDB table:

- column names must correspond to database table columns

- types and units of the columns must match database definitions,

no unit conversion is performed presently

- columns that have default values in database schema can be

omitted from catalog

- this method knows how to fill interval-related columns of DiaObject

(validityStart, validityEnd) they do not need to appear in a

catalog

- source catalogs have ``diaObjectId`` column associating sources

with objects

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 403 of file apdbSql.py.

◆ tableDef()

| Optional[TableDef] lsst.dax.apdb.apdbSql.ApdbSql.tableDef | ( | self, | |

| ApdbTables | table | ||

| ) |

Return table schema definition for a given table.

Parameters

----------

table : `ApdbTables`

One of the known APDB tables.

Returns

-------

tableSchema : `TableDef` or `None`

Table schema description, `None` is returned if table is not

defined by this implementation.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 277 of file apdbSql.py.

◆ tableRowCount()

| Dict[str, int] lsst.dax.apdb.apdbSql.ApdbSql.tableRowCount | ( | self | ) |

Returns dictionary with the table names and row counts.

Used by ``ap_proto`` to keep track of the size of the database tables.

Depending on database technology this could be expensive operation.

Returns

-------

row_counts : `dict`

Dict where key is a table name and value is a row count.

Definition at line 254 of file apdbSql.py.

Member Data Documentation

◆ config

| lsst.dax.apdb.apdbSql.ApdbSql.config |

Definition at line 214 of file apdbSql.py.

◆ ConfigClass

|

static |

Definition at line 210 of file apdbSql.py.

◆ pixelator

| lsst.dax.apdb.apdbSql.ApdbSql.pixelator |

Definition at line 252 of file apdbSql.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-0.7.0/Linux64/dax_apdb/22.0.1-5-g75bb458+99c117b92f/python/lsst/dax/apdb/apdbSql.py