Public Member Functions | |

| def | adaptArgsAndRun (self, inputData, inputDataIds, outputDataIds, butler) |

| def | filterReferences (self, exposure, refCat, refWcs) |

| def | makeIdFactory (self, dataRef) |

| def | getExposureId (self, dataRef) |

| def | fetchReferences (self, dataRef, exposure) |

| def | getExposure (self, dataRef) |

| def | getInitOutputDatasets (self) |

| def | generateMeasCat (self, exposureDataId, exposure, refCat, refWcs, idPackerName, butler) |

| def | runDataRef (self, dataRef, psfCache=None) |

| def | run (self, measCat, exposure, refCat, refWcs, exposureId=None) |

| def | run (self, kwargs) |

| def | attachFootprints (self, sources, refCat, exposure, refWcs, dataRef) |

| def | writeOutput (self, dataRef, sources) |

| def | getSchemaCatalogs (self) |

| def | getInputDatasetTypes (cls, config) |

| def | getOutputDatasetTypes (cls, config) |

| def | getPrerequisiteDatasetTypes (cls, config) |

| def | getInitInputDatasetTypes (cls, config) |

| def | getInitOutputDatasetTypes (cls, config) |

| def | getDatasetTypes (cls, config, configClass) |

| def | getPerDatasetTypeDimensions (cls, config) |

| def | runQuantum (self, quantum, butler) |

| def | saveStruct (self, struct, outputDataRefs, butler) |

| def | getResourceConfig (self) |

| def | emptyMetadata (self) |

| def | emptyMetadata (self) |

| def | getAllSchemaCatalogs (self) |

| def | getAllSchemaCatalogs (self) |

| def | getFullMetadata (self) |

| def | getFullMetadata (self) |

| def | getFullName (self) |

| def | getFullName (self) |

| def | getName (self) |

| def | getName (self) |

| def | getTaskDict (self) |

| def | getTaskDict (self) |

| def | makeSubtask (self, name, keyArgs) |

| def | makeSubtask (self, name, keyArgs) |

| def | timer (self, name, logLevel=Log.DEBUG) |

| def | timer (self, name, logLevel=Log.DEBUG) |

| def | makeField (cls, doc) |

| def | makeField (cls, doc) |

| def | __reduce__ (self) |

| def | __reduce__ (self) |

| def | applyOverrides (cls, config) |

| def | parseAndRun (cls, args=None, config=None, log=None, doReturnResults=False) |

| def | writeConfig (self, butler, clobber=False, doBackup=True) |

| def | writeSchemas (self, butler, clobber=False, doBackup=True) |

| def | writeMetadata (self, dataRef) |

| def | writePackageVersions (self, butler, clobber=False, doBackup=True, dataset="packages") |

Public Attributes | |

| metadata | |

| metadata | |

| log | |

| log | |

| config | |

| config | |

Static Public Attributes | |

| ConfigClass = ForcedPhotCcdConfig | |

| RunnerClass = lsst.pipe.base.ButlerInitializedTaskRunner | |

| string | dataPrefix = "" |

| bool | canMultiprocess = True |

| bool | canMultiprocess = True |

Detailed Description

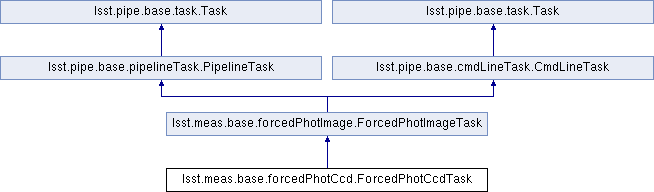

A command-line driver for performing forced measurement on CCD images. Notes ----- This task is a subclass of :lsst-task:`lsst.meas.base.forcedPhotImage.ForcedPhotImageTask` which is specifically for doing forced measurement on a single CCD exposure, using as a reference catalog the detections which were made on overlapping coadds. The `run` method (inherited from `ForcedPhotImageTask`) takes a `~lsst.daf.persistence.ButlerDataRef` argument that corresponds to a single CCD. This should contain the data ID keys that correspond to the ``forced_src`` dataset (the output dataset for this task), which are typically all those used to specify the ``calexp`` dataset (``visit``, ``raft``, ``sensor`` for LSST data) as well as a coadd tract. The tract is used to look up the appropriate coadd measurement catalogs to use as references (e.g. ``deepCoadd_src``; see :lsst-task:`lsst.meas.base.references.CoaddSrcReferencesTask` for more information). While the tract must be given as part of the dataRef, the patches are determined automatically from the bounding box and WCS of the calexp to be measured, and the filter used to fetch references is set via the ``filter`` option in the configuration of :lsst-task:`lsst.meas.base.references.BaseReferencesTask`). In addition to the `run` method, `ForcedPhotCcdTask` overrides several methods of `ForcedPhotImageTask` to specialize it for single-CCD processing, including `~ForcedPhotImageTask.makeIdFactory`, `~ForcedPhotImageTask.fetchReferences`, and `~ForcedPhotImageTask.getExposure`. None of these should be called directly by the user, though it may be useful to override them further in subclasses.

Definition at line 168 of file forcedPhotCcd.py.

Member Function Documentation

◆ __reduce__() [1/2]

|

inherited |

Pickler.

◆ __reduce__() [2/2]

|

inherited |

Pickler.

◆ adaptArgsAndRun()

| def lsst.meas.base.forcedPhotCcd.ForcedPhotCcdTask.adaptArgsAndRun | ( | self, | |

| inputData, | |||

| inputDataIds, | |||

| outputDataIds, | |||

| butler | |||

| ) |

Definition at line 208 of file forcedPhotCcd.py.

◆ applyOverrides()

|

inherited |

A hook to allow a task to change the values of its config *after* the camera-specific

overrides are loaded but before any command-line overrides are applied.

Parameters

----------

config : instance of task's ``ConfigClass``

Task configuration.

Notes

-----

This is necessary in some cases because the camera-specific overrides may retarget subtasks,

wiping out changes made in ConfigClass.setDefaults. See LSST Trac ticket #2282 for more discussion.

.. warning::

This is called by CmdLineTask.parseAndRun; other ways of constructing a config will not apply

these overrides.

Definition at line 527 of file cmdLineTask.py.

◆ attachFootprints()

|

inherited |

Attach footprints to blank sources prior to measurements. Notes ----- `~lsst.afw.detection.Footprint`\ s for forced photometry must be in the pixel coordinate system of the image being measured, while the actual detections may start out in a different coordinate system. Subclasses of this class must implement this method to define how those `~lsst.afw.detection.Footprint`\ s should be generated. This default implementation transforms the `~lsst.afw.detection.Footprint`\ s from the reference catalog from the reference WCS to the exposure's WcS, which downgrades `lsst.afw.detection.heavyFootprint.HeavyFootprint`\ s into regular `~lsst.afw.detection.Footprint`\ s, destroying deblend information.

Definition at line 327 of file forcedPhotImage.py.

◆ emptyMetadata() [1/2]

|

inherited |

Empty (clear) the metadata for this Task and all sub-Tasks.

Definition at line 153 of file task.py.

◆ emptyMetadata() [2/2]

|

inherited |

Empty (clear) the metadata for this Task and all sub-Tasks.

Definition at line 153 of file task.py.

◆ fetchReferences()

| def lsst.meas.base.forcedPhotCcd.ForcedPhotCcdTask.fetchReferences | ( | self, | |

| dataRef, | |||

| exposure | |||

| ) |

Get sources that overlap the exposure.

Parameters

----------

dataRef : `lsst.daf.persistence.ButlerDataRef`

Butler data reference corresponding to the image to be measured;

should have ``tract``, ``patch``, and ``filter`` keys.

exposure : `lsst.afw.image.Exposure`

The image to be measured (used only to obtain a WCS and bounding

box).

Returns

-------

referencs : `lsst.afw.table.SourceCatalog`

Catalog of sources that overlap the exposure

Notes

-----

The returned catalog is sorted by ID and guarantees that all included

children have their parent included and that all Footprints are valid.

All work is delegated to the references subtask; see

:lsst-task:`lsst.meas.base.references.CoaddSrcReferencesTask`

for information about the default behavior.

Definition at line 311 of file forcedPhotCcd.py.

◆ filterReferences()

| def lsst.meas.base.forcedPhotCcd.ForcedPhotCcdTask.filterReferences | ( | self, | |

| exposure, | |||

| refCat, | |||

| refWcs | |||

| ) |

Filter reference catalog so that all sources are within the

boundaries of the exposure.

Parameters

----------

exposure : `lsst.afw.image.exposure.Exposure`

Exposure to generate the catalog for.

refCat : `lsst.afw.table.SourceCatalog`

Catalog of shapes and positions at which to force photometry.

refWcs : `lsst.afw.image.SkyWcs`

Reference world coordinate system.

Returns

-------

refSources : `lsst.afw.table.SourceCatalog`

Filtered catalog of forced sources to measure.

Notes

-----

Filtering the reference catalog is currently handled by Gen2

specific methods. To function for Gen3, this method copies

code segments to do the filtering and transformation. The

majority of this code is based on the methods of

lsst.meas.algorithms.loadReferenceObjects.ReferenceObjectLoader

Definition at line 219 of file forcedPhotCcd.py.

◆ generateMeasCat()

|

inherited |

Generate a measurement catalog for Gen3.

Parameters

----------

exposureDataId : `DataId`

Butler dataId for this exposure.

exposure : `lsst.afw.image.exposure.Exposure`

Exposure to generate the catalog for.

refCat : `lsst.afw.table.SourceCatalog`

Catalog of shapes and positions at which to force photometry.

refWcs : `lsst.afw.image.SkyWcs`

Reference world coordinate system.

idPackerName : `str`

Type of ID packer to construct from the registry.

butler : `lsst.daf.persistence.butler.Butler`

Butler to use to construct id packer.

Returns

-------

measCat : `lsst.afw.table.SourceCatalog`

Catalog of forced sources to measure.

Definition at line 189 of file forcedPhotImage.py.

◆ getAllSchemaCatalogs() [1/2]

|

inherited |

Get schema catalogs for all tasks in the hierarchy, combining the results into a single dict.

Returns

-------

schemacatalogs : `dict`

Keys are butler dataset type, values are a empty catalog (an instance of the appropriate

lsst.afw.table Catalog type) for all tasks in the hierarchy, from the top-level task down

through all subtasks.

Notes

-----

This method may be called on any task in the hierarchy; it will return the same answer, regardless.

The default implementation should always suffice. If your subtask uses schemas the override

`Task.getSchemaCatalogs`, not this method.

◆ getAllSchemaCatalogs() [2/2]

|

inherited |

Get schema catalogs for all tasks in the hierarchy, combining the results into a single dict.

Returns

-------

schemacatalogs : `dict`

Keys are butler dataset type, values are a empty catalog (an instance of the appropriate

lsst.afw.table Catalog type) for all tasks in the hierarchy, from the top-level task down

through all subtasks.

Notes

-----

This method may be called on any task in the hierarchy; it will return the same answer, regardless.

The default implementation should always suffice. If your subtask uses schemas the override

`Task.getSchemaCatalogs`, not this method.

◆ getDatasetTypes()

|

inherited |

Return dataset type descriptors defined in task configuration.

This method can be used by other methods that need to extract dataset

types from task configuration (e.g. `getInputDatasetTypes` or

sub-class methods).

Parameters

----------

config : `Config`

Configuration for this task. Typically datasets are defined in

a task configuration.

configClass : `type`

Class of the configuration object which defines dataset type.

Returns

-------

Dictionary where key is the name (arbitrary) of the output dataset

and value is the `DatasetTypeDescriptor` instance. Default

implementation uses configuration field name as dictionary key.

Returns empty dict if configuration has no fields with the specified

``configClass``.

Definition at line 353 of file pipelineTask.py.

◆ getExposure()

| def lsst.meas.base.forcedPhotCcd.ForcedPhotCcdTask.getExposure | ( | self, | |

| dataRef | |||

| ) |

Read input exposure for measurement.

Parameters

----------

dataRef : `lsst.daf.persistence.ButlerDataRef`

Butler data reference. Only the ``calexp`` dataset is used, unless

``config.doApplyUberCal`` is `True`, in which case the

corresponding meas_mosaic outputs are used as well.

Definition at line 354 of file forcedPhotCcd.py.

◆ getExposureId()

| def lsst.meas.base.forcedPhotCcd.ForcedPhotCcdTask.getExposureId | ( | self, | |

| dataRef | |||

| ) |

Definition at line 308 of file forcedPhotCcd.py.

◆ getFullMetadata() [1/2]

|

inherited |

Get metadata for all tasks.

Returns

-------

metadata : `lsst.daf.base.PropertySet`

The `~lsst.daf.base.PropertySet` keys are the full task name. Values are metadata

for the top-level task and all subtasks, sub-subtasks, etc..

Notes

-----

The returned metadata includes timing information (if ``@timer.timeMethod`` is used)

and any metadata set by the task. The name of each item consists of the full task name

with ``.`` replaced by ``:``, followed by ``.`` and the name of the item, e.g.::

topLevelTaskName:subtaskName:subsubtaskName.itemName

using ``:`` in the full task name disambiguates the rare situation that a task has a subtask

and a metadata item with the same name.

Definition at line 210 of file task.py.

◆ getFullMetadata() [2/2]

|

inherited |

Get metadata for all tasks.

Returns

-------

metadata : `lsst.daf.base.PropertySet`

The `~lsst.daf.base.PropertySet` keys are the full task name. Values are metadata

for the top-level task and all subtasks, sub-subtasks, etc..

Notes

-----

The returned metadata includes timing information (if ``@timer.timeMethod`` is used)

and any metadata set by the task. The name of each item consists of the full task name

with ``.`` replaced by ``:``, followed by ``.`` and the name of the item, e.g.::

topLevelTaskName:subtaskName:subsubtaskName.itemName

using ``:`` in the full task name disambiguates the rare situation that a task has a subtask

and a metadata item with the same name.

Definition at line 210 of file task.py.

◆ getFullName() [1/2]

|

inherited |

Get the task name as a hierarchical name including parent task names.

Returns

-------

fullName : `str`

The full name consists of the name of the parent task and each subtask separated by periods.

For example:

- The full name of top-level task "top" is simply "top".

- The full name of subtask "sub" of top-level task "top" is "top.sub".

- The full name of subtask "sub2" of subtask "sub" of top-level task "top" is "top.sub.sub2".

Definition at line 235 of file task.py.

◆ getFullName() [2/2]

|

inherited |

Get the task name as a hierarchical name including parent task names.

Returns

-------

fullName : `str`

The full name consists of the name of the parent task and each subtask separated by periods.

For example:

- The full name of top-level task "top" is simply "top".

- The full name of subtask "sub" of top-level task "top" is "top.sub".

- The full name of subtask "sub2" of subtask "sub" of top-level task "top" is "top.sub.sub2".

Definition at line 235 of file task.py.

◆ getInitInputDatasetTypes()

|

inherited |

Return dataset type descriptors that can be used to retrieve the

``initInputs`` constructor argument.

Datasets used in initialization may not be associated with any

Dimension (i.e. their data IDs must be empty dictionaries).

Default implementation finds all fields of type

`InitInputInputDatasetConfig` in configuration (non-recursively) and

uses them for constructing `DatasetTypeDescriptor` instances. The

names of these fields are used as keys in returned dictionary.

Subclasses can override this behavior.

Parameters

----------

config : `Config`

Configuration for this task. Typically datasets are defined in

a task configuration.

Returns

-------

Dictionary where key is the name (arbitrary) of the input dataset

and value is the `DatasetTypeDescriptor` instance. Default

implementation uses configuration field name as dictionary key.

When the task requires no initialization inputs, should return an

empty dict.

Definition at line 291 of file pipelineTask.py.

◆ getInitOutputDatasets()

|

inherited |

Definition at line 177 of file forcedPhotImage.py.

◆ getInitOutputDatasetTypes()

|

inherited |

Return dataset type descriptors that can be used to write the

objects returned by `getOutputDatasets`.

Datasets used in initialization may not be associated with any

Dimension (i.e. their data IDs must be empty dictionaries).

Default implementation finds all fields of type

`InitOutputDatasetConfig` in configuration (non-recursively) and uses

them for constructing `DatasetTypeDescriptor` instances. The names of

these fields are used as keys in returned dictionary. Subclasses can

override this behavior.

Parameters

----------

config : `Config`

Configuration for this task. Typically datasets are defined in

a task configuration.

Returns

-------

Dictionary where key is the name (arbitrary) of the output dataset

and value is the `DatasetTypeDescriptor` instance. Default

implementation uses configuration field name as dictionary key.

When the task produces no initialization outputs, should return an

empty dict.

Definition at line 322 of file pipelineTask.py.

◆ getInputDatasetTypes()

|

inherited |

Return input dataset type descriptors for this task.

Default implementation finds all fields of type `InputDatasetConfig`

in configuration (non-recursively) and uses them for constructing

`DatasetTypeDescriptor` instances. The names of these fields are used

as keys in returned dictionary. Subclasses can override this behavior.

Parameters

----------

config : `Config`

Configuration for this task. Typically datasets are defined in

a task configuration.

Returns

-------

Dictionary where key is the name (arbitrary) of the input dataset

and value is the `DatasetTypeDescriptor` instance. Default

implementation uses configuration field name as dictionary key.

Definition at line 214 of file pipelineTask.py.

◆ getName() [1/2]

|

inherited |

Get the name of the task.

Returns

-------

taskName : `str`

Name of the task.

See also

--------

getFullName

◆ getName() [2/2]

|

inherited |

Get the name of the task.

Returns

-------

taskName : `str`

Name of the task.

See also

--------

getFullName

◆ getOutputDatasetTypes()

|

inherited |

Return output dataset type descriptors for this task.

Default implementation finds all fields of type `OutputDatasetConfig`

in configuration (non-recursively) and uses them for constructing

`DatasetTypeDescriptor` instances. The keys of these fields are used

as keys in returned dictionary. Subclasses can override this behavior.

Parameters

----------

config : `Config`

Configuration for this task. Typically datasets are defined in

a task configuration.

Returns

-------

Dictionary where key is the name (arbitrary) of the output dataset

and value is the `DatasetTypeDescriptor` instance. Default

implementation uses configuration field name as dictionary key.

Definition at line 237 of file pipelineTask.py.

◆ getPerDatasetTypeDimensions()

|

inherited |

Return any Dimensions that are permitted to have different values

for different DatasetTypes within the same quantum.

Parameters

----------

config : `Config`

Configuration for this task.

Returns

-------

dimensions : `~collections.abc.Set` of `Dimension` or `str`

The dimensions or names thereof that should be considered

per-DatasetType.

Notes

-----

Any Dimension declared to be per-DatasetType by a PipelineTask must

also be declared to be per-DatasetType by other PipelineTasks in the

same Pipeline.

The classic example of a per-DatasetType dimension is the

``CalibrationLabel`` dimension that maps to a validity range for

master calibrations. When running Instrument Signature Removal, one

does not care that different dataset types like flat, bias, and dark

have different validity ranges, as long as those validity ranges all

overlap the relevant observation.

Definition at line 383 of file pipelineTask.py.

◆ getPrerequisiteDatasetTypes()

|

inherited |

Return the local names of input dataset types that should be

assumed to exist instead of constraining what data to process with

this task.

Usually, when running a `PipelineTask`, the presence of input datasets

constrains the processing to be done (as defined by the `QuantumGraph`

generated during "preflight"). "Prerequisites" are special input

datasets that do not constrain that graph, but instead cause a hard

failure when missing. Calibration products and reference catalogs

are examples of dataset types that should usually be marked as

prerequisites.

Parameters

----------

config : `Config`

Configuration for this task. Typically datasets are defined in

a task configuration.

Returns

-------

prerequisite : `~collections.abc.Set` of `str`

The keys in the dictionary returned by `getInputDatasetTypes` that

represent dataset types that should be considered prerequisites.

Names returned here that are not keys in that dictionary are

ignored; that way, if a config option removes an input dataset type

only `getInputDatasetTypes` needs to be updated.

Definition at line 260 of file pipelineTask.py.

◆ getResourceConfig()

|

inherited |

Return resource configuration for this task. Returns ------- Object of type `~config.ResourceConfig` or ``None`` if resource configuration is not defined for this task.

Definition at line 641 of file pipelineTask.py.

◆ getSchemaCatalogs()

|

inherited |

The schema catalogs that will be used by this task.

Returns

-------

schemaCatalogs : `dict`

Dictionary mapping dataset type to schema catalog.

Notes

-----

There is only one schema for each type of forced measurement. The

dataset type for this measurement is defined in the mapper.

Definition at line 370 of file forcedPhotImage.py.

◆ getTaskDict() [1/2]

|

inherited |

Get a dictionary of all tasks as a shallow copy.

Returns

-------

taskDict : `dict`

Dictionary containing full task name: task object for the top-level task and all subtasks,

sub-subtasks, etc..

Definition at line 264 of file task.py.

◆ getTaskDict() [2/2]

|

inherited |

Get a dictionary of all tasks as a shallow copy.

Returns

-------

taskDict : `dict`

Dictionary containing full task name: task object for the top-level task and all subtasks,

sub-subtasks, etc..

Definition at line 264 of file task.py.

◆ makeField() [1/2]

|

inherited |

Make a `lsst.pex.config.ConfigurableField` for this task.

Parameters

----------

doc : `str`

Help text for the field.

Returns

-------

configurableField : `lsst.pex.config.ConfigurableField`

A `~ConfigurableField` for this task.

Examples

--------

Provides a convenient way to specify this task is a subtask of another task.

Here is an example of use::

class OtherTaskConfig(lsst.pex.config.Config)

aSubtask = ATaskClass.makeField("a brief description of what this task does")

Definition at line 329 of file task.py.

◆ makeField() [2/2]

|

inherited |

Make a `lsst.pex.config.ConfigurableField` for this task.

Parameters

----------

doc : `str`

Help text for the field.

Returns

-------

configurableField : `lsst.pex.config.ConfigurableField`

A `~ConfigurableField` for this task.

Examples

--------

Provides a convenient way to specify this task is a subtask of another task.

Here is an example of use::

class OtherTaskConfig(lsst.pex.config.Config)

aSubtask = ATaskClass.makeField("a brief description of what this task does")

Definition at line 329 of file task.py.

◆ makeIdFactory()

| def lsst.meas.base.forcedPhotCcd.ForcedPhotCcdTask.makeIdFactory | ( | self, | |

| dataRef | |||

| ) |

Create an object that generates globally unique source IDs.

Source IDs are created based on a per-CCD ID and the ID of the CCD

itself.

Parameters

----------

dataRef : `lsst.daf.persistence.ButlerDataRef`

Butler data reference. The ``ccdExposureId_bits`` and

``ccdExposureId`` datasets are accessed. The data ID must have the

keys that correspond to ``ccdExposureId``, which are generally the

same as those that correspond to ``calexp`` (``visit``, ``raft``,

``sensor`` for LSST data).

Definition at line 289 of file forcedPhotCcd.py.

◆ makeSubtask() [1/2]

|

inherited |

Create a subtask as a new instance as the ``name`` attribute of this task.

Parameters

----------

name : `str`

Brief name of the subtask.

keyArgs

Extra keyword arguments used to construct the task. The following arguments are automatically

provided and cannot be overridden:

- "config".

- "parentTask".

Notes

-----

The subtask must be defined by ``Task.config.name``, an instance of pex_config ConfigurableField

or RegistryField.

◆ makeSubtask() [2/2]

|

inherited |

Create a subtask as a new instance as the ``name`` attribute of this task.

Parameters

----------

name : `str`

Brief name of the subtask.

keyArgs

Extra keyword arguments used to construct the task. The following arguments are automatically

provided and cannot be overridden:

- "config".

- "parentTask".

Notes

-----

The subtask must be defined by ``Task.config.name``, an instance of pex_config ConfigurableField

or RegistryField.

◆ parseAndRun()

|

inherited |

Parse an argument list and run the command.

Parameters

----------

args : `list`, optional

List of command-line arguments; if `None` use `sys.argv`.

config : `lsst.pex.config.Config`-type, optional

Config for task. If `None` use `Task.ConfigClass`.

log : `lsst.log.Log`-type, optional

Log. If `None` use the default log.

doReturnResults : `bool`, optional

If `True`, return the results of this task. Default is `False`. This is only intended for

unit tests and similar use. It can easily exhaust memory (if the task returns enough data and you

call it enough times) and it will fail when using multiprocessing if the returned data cannot be

pickled.

Returns

-------

struct : `lsst.pipe.base.Struct`

Fields are:

``argumentParser``

the argument parser (`lsst.pipe.base.ArgumentParser`).

``parsedCmd``

the parsed command returned by the argument parser's

`~lsst.pipe.base.ArgumentParser.parse_args` method

(`argparse.Namespace`).

``taskRunner``

the task runner used to run the task (an instance of `Task.RunnerClass`).

``resultList``

results returned by the task runner's ``run`` method, one entry

per invocation (`list`). This will typically be a list of

`Struct`, each containing at least an ``exitStatus`` integer

(0 or 1); see `Task.RunnerClass` (`TaskRunner` by default) for

more details.

Notes

-----

Calling this method with no arguments specified is the standard way to run a command-line task

from the command-line. For an example see ``pipe_tasks`` ``bin/makeSkyMap.py`` or almost any other

file in that directory.

If one or more of the dataIds fails then this routine will exit (with a status giving the

number of failed dataIds) rather than returning this struct; this behaviour can be

overridden by specifying the ``--noExit`` command-line option.

Definition at line 549 of file cmdLineTask.py.

◆ run() [1/2]

|

inherited |

Perform forced measurement on a single exposure.

Parameters

----------

measCat : `lsst.afw.table.SourceCatalog`

The measurement catalog, based on the sources listed in the

reference catalog.

exposure : `lsst.afw.image.Exposure`

The measurement image upon which to perform forced detection.

refCat : `lsst.afw.table.SourceCatalog`

The reference catalog of sources to measure.

refWcs : `lsst.afw.image.SkyWcs`

The WCS for the references.

exposureId : `int`

Optional unique exposureId used for random seed in measurement

task.

Returns

-------

result : `lsst.pipe.base.Struct`

Structure with fields:

``measCat``

Catalog of forced measurement results

(`lsst.afw.table.SourceCatalog`).

Definition at line 263 of file forcedPhotImage.py.

◆ run() [2/2]

|

inherited |

Run task algorithm on in-memory data.

This method should be implemented in a subclass unless tasks overrides

`adaptArgsAndRun` to do something different from its default

implementation. With default implementation of `adaptArgsAndRun` this

method will receive keyword arguments whose names will be the same as

names of configuration fields describing input dataset types. Argument

values will be data objects retrieved from data butler. If a dataset

type is configured with ``scalar`` field set to ``True`` then argument

value will be a single object, otherwise it will be a list of objects.

If the task needs to know its input or output DataIds then it has to

override `adaptArgsAndRun` method instead.

Returns

-------

struct : `Struct`

See description of `adaptArgsAndRun` method.

Examples

--------

Typical implementation of this method may look like::

def run(self, input, calib):

# "input", "calib", and "output" are the names of the config fields

# Assuming that input/calib datasets are `scalar` they are simple objects,

# do something with inputs and calibs, produce output image.

image = self.makeImage(input, calib)

# If output dataset is `scalar` then return object, not list

return Struct(output=image)

Definition at line 469 of file pipelineTask.py.

◆ runDataRef()

|

inherited |

Perform forced measurement on a single exposure.

Parameters

----------

dataRef : `lsst.daf.persistence.ButlerDataRef`

Passed to the ``references`` subtask to obtain the reference WCS,

the ``getExposure`` method (implemented by derived classes) to

read the measurment image, and the ``fetchReferences`` method to

get the exposure and load the reference catalog (see

:lsst-task`lsst.meas.base.references.CoaddSrcReferencesTask`).

Refer to derived class documentation for details of the datasets

and data ID keys which are used.

psfCache : `int`, optional

Size of PSF cache, or `None`. The size of the PSF cache can have

a significant effect upon the runtime for complicated PSF models.

Notes

-----

Sources are generated with ``generateMeasCat`` in the ``measurement``

subtask. These are passed to ``measurement``'s ``run`` method, which

fills the source catalog with the forced measurement results. The

sources are then passed to the ``writeOutputs`` method (implemented by

derived classes) which writes the outputs.

Definition at line 221 of file forcedPhotImage.py.

◆ runQuantum()

|

inherited |

Execute PipelineTask algorithm on single quantum of data.

Typical implementation of this method will use inputs from quantum

to retrieve Python-domain objects from data butler and call

`adaptArgsAndRun` method on that data. On return from

`adaptArgsAndRun` this method will extract data from returned

`Struct` instance and save that data to butler.

The `Struct` returned from `adaptArgsAndRun` is expected to contain

data attributes with the names equal to the names of the

configuration fields defining output dataset types. The values of

the data attributes must be data objects corresponding to

the DataIds of output dataset types. All data objects will be

saved in butler using DataRefs from Quantum's output dictionary.

This method does not return anything to the caller, on errors

corresponding exception is raised.

Parameters

----------

quantum : `Quantum`

Object describing input and output corresponding to this

invocation of PipelineTask instance.

butler : object

Data butler instance.

Raises

------

`ScalarError` if a dataset type is configured as scalar but receives

multiple DataIds in `quantum`. Any exceptions that happen in data

butler or in `adaptArgsAndRun` method.

Definition at line 506 of file pipelineTask.py.

◆ saveStruct()

|

inherited |

Save data in butler.

Convention is that struct returned from ``run()`` method has data

field(s) with the same names as the config fields defining

output DatasetTypes. Subclasses may override this method to implement

different convention for `Struct` content or in case any

post-processing of data may be needed.

Parameters

----------

struct : `Struct`

Data produced by the task packed into `Struct` instance

outputDataRefs : `dict`

Dictionary whose keys are the names of the configuration fields

describing output dataset types and values are lists of DataRefs.

DataRefs must match corresponding data objects in ``struct`` in

number and order.

butler : object

Data butler instance.

Definition at line 607 of file pipelineTask.py.

◆ timer() [1/2]

|

inherited |

Context manager to log performance data for an arbitrary block of code.

Parameters

----------

name : `str`

Name of code being timed; data will be logged using item name: ``Start`` and ``End``.

logLevel

A `lsst.log` level constant.

Examples

--------

Creating a timer context::

with self.timer("someCodeToTime"):

pass # code to time

See also

--------

timer.logInfo

Definition at line 301 of file task.py.

◆ timer() [2/2]

|

inherited |

Context manager to log performance data for an arbitrary block of code.

Parameters

----------

name : `str`

Name of code being timed; data will be logged using item name: ``Start`` and ``End``.

logLevel

A `lsst.log` level constant.

Examples

--------

Creating a timer context::

with self.timer("someCodeToTime"):

pass # code to time

See also

--------

timer.logInfo

Definition at line 301 of file task.py.

◆ writeConfig()

|

inherited |

Write the configuration used for processing the data, or check that an existing

one is equal to the new one if present.

Parameters

----------

butler : `lsst.daf.persistence.Butler`

Data butler used to write the config. The config is written to dataset type

`CmdLineTask._getConfigName`.

clobber : `bool`, optional

A boolean flag that controls what happens if a config already has been saved:

- `True`: overwrite or rename the existing config, depending on ``doBackup``.

- `False`: raise `TaskError` if this config does not match the existing config.

doBackup : bool, optional

Set to `True` to backup the config files if clobbering.

Definition at line 656 of file cmdLineTask.py.

◆ writeMetadata()

|

inherited |

Write the metadata produced from processing the data.

Parameters

----------

dataRef

Butler data reference used to write the metadata.

The metadata is written to dataset type `CmdLineTask._getMetadataName`.

Definition at line 731 of file cmdLineTask.py.

◆ writeOutput()

|

inherited |

Write forced source table

Parameters

----------

dataRef : `lsst.daf.persistence.ButlerDataRef`

Butler data reference. The forced_src dataset (with

self.dataPrefix prepended) is all that will be modified.

sources : `lsst.afw.table.SourceCatalog`

Catalog of sources to save.

Definition at line 357 of file forcedPhotImage.py.

◆ writePackageVersions()

|

inherited |

Compare and write package versions.

Parameters

----------

butler : `lsst.daf.persistence.Butler`

Data butler used to read/write the package versions.

clobber : `bool`, optional

A boolean flag that controls what happens if versions already have been saved:

- `True`: overwrite or rename the existing version info, depending on ``doBackup``.

- `False`: raise `TaskError` if this version info does not match the existing.

doBackup : `bool`, optional

If `True` and clobbering, old package version files are backed up.

dataset : `str`, optional

Name of dataset to read/write.

Raises

------

TaskError

Raised if there is a version mismatch with current and persisted lists of package versions.

Notes

-----

Note that this operation is subject to a race condition.

Definition at line 747 of file cmdLineTask.py.

◆ writeSchemas()

|

inherited |

Write the schemas returned by `lsst.pipe.base.Task.getAllSchemaCatalogs`.

Parameters

----------

butler : `lsst.daf.persistence.Butler`

Data butler used to write the schema. Each schema is written to the dataset type specified as the

key in the dict returned by `~lsst.pipe.base.Task.getAllSchemaCatalogs`.

clobber : `bool`, optional

A boolean flag that controls what happens if a schema already has been saved:

- `True`: overwrite or rename the existing schema, depending on ``doBackup``.

- `False`: raise `TaskError` if this schema does not match the existing schema.

doBackup : `bool`, optional

Set to `True` to backup the schema files if clobbering.

Notes

-----

If ``clobber`` is `False` and an existing schema does not match a current schema,

then some schemas may have been saved successfully and others may not, and there is no easy way to

tell which is which.

Definition at line 696 of file cmdLineTask.py.

Member Data Documentation

◆ canMultiprocess [1/2]

|

staticinherited |

Definition at line 186 of file pipelineTask.py.

◆ canMultiprocess [2/2]

|

staticinherited |

Definition at line 524 of file cmdLineTask.py.

◆ config [1/2]

◆ config [2/2]

◆ ConfigClass

|

static |

Definition at line 203 of file forcedPhotCcd.py.

◆ dataPrefix

|

static |

Definition at line 206 of file forcedPhotCcd.py.

◆ log [1/2]

◆ log [2/2]

◆ metadata [1/2]

◆ metadata [2/2]

◆ RunnerClass

|

static |

Definition at line 204 of file forcedPhotCcd.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/Linux64/meas_base/18.1.0-2-g9c63283+13/python/lsst/meas/base/forcedPhotCcd.py

Generated on Fri Aug 30 2019 07:30:59 for LSSTApplications by

1.8.13

1.8.13