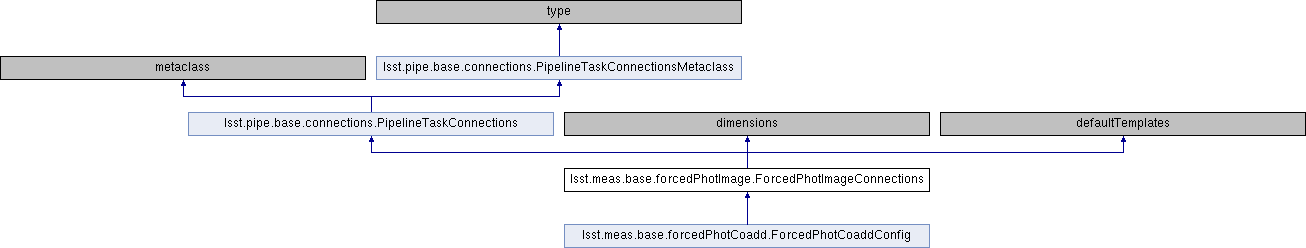

Inheritance diagram for lsst.meas.base.forcedPhotImage.ForcedPhotImageConnections:

Public Member Functions | |

| typing.Tuple[InputQuantizedConnection, OutputQuantizedConnection] | buildDatasetRefs (self, Quantum quantum) |

| NamedKeyDict[DatasetType, typing.Set[DatasetRef]] | adjustQuantum (self, NamedKeyDict[DatasetType, typing.Set[DatasetRef]] datasetRefMap) |

| def | __prepare__ (name, bases, **kwargs) |

| def | __new__ (cls, name, bases, dct, **kwargs) |

Public Attributes | |

| inputs | |

| prerequisiteInputs | |

| outputs | |

| initInputs | |

| initOutputs | |

| allConnections | |

| config | |

Detailed Description

Definition at line 45 of file forcedPhotImage.py.

Member Function Documentation

◆ __new__()

|

inherited |

Definition at line 110 of file connections.py.

◆ __prepare__()

|

inherited |

Definition at line 99 of file connections.py.

◆ adjustQuantum()

|

inherited |

Override to make adjustments to `lsst.daf.butler.DatasetRef` objects

in the `lsst.daf.butler.core.Quantum` during the graph generation stage

of the activator.

The base class implementation simply checks that input connections with

``multiple`` set to `False` have no more than one dataset.

Parameters

----------

datasetRefMap : `NamedKeyDict`

Mapping from dataset type to a `set` of

`lsst.daf.butler.DatasetRef` objects

Returns

-------

datasetRefMap : `NamedKeyDict`

Modified mapping of input with possibly adjusted

`lsst.daf.butler.DatasetRef` objects.

Raises

------

ScalarError

Raised if any `Input` or `PrerequisiteInput` connection has

``multiple`` set to `False`, but multiple datasets.

Exception

Overrides of this function have the option of raising an Exception

if a field in the input does not satisfy a need for a corresponding

pipelineTask, i.e. no reference catalogs are found.

Definition at line 459 of file connections.py.

typing.Generator[BaseConnection, None, None] iterConnections(PipelineTaskConnections connections, Union[str, Iterable[str]] connectionType)

Definition: connections.py:503

◆ buildDatasetRefs()

|

inherited |

Builds QuantizedConnections corresponding to input Quantum

Parameters

----------

quantum : `lsst.daf.butler.Quantum`

Quantum object which defines the inputs and outputs for a given

unit of processing

Returns

-------

retVal : `tuple` of (`InputQuantizedConnection`,

`OutputQuantizedConnection`) Namespaces mapping attribute names

(identifiers of connections) to butler references defined in the

input `lsst.daf.butler.Quantum`

Definition at line 392 of file connections.py.

Member Data Documentation

◆ allConnections

|

inherited |

Definition at line 370 of file connections.py.

◆ config

|

inherited |

Definition at line 375 of file connections.py.

◆ initInputs

|

inherited |

Definition at line 368 of file connections.py.

◆ initOutputs

|

inherited |

Definition at line 369 of file connections.py.

◆ inputs

|

inherited |

Definition at line 365 of file connections.py.

◆ outputs

|

inherited |

Definition at line 367 of file connections.py.

◆ prerequisiteInputs

|

inherited |

Definition at line 366 of file connections.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/0.4.1/Linux64/meas_base/21.0.0-5-g073e055+57e5e98977/python/lsst/meas/base/forcedPhotImage.py