Public Member Functions | |

| def | __init__ (self, **kwds) |

| bool | isDatasetTypeSpecial (self, str datasetTypeName) |

| List[str] | getSpecialDirectories (self) |

| Tuple[Optional[BaseSkyMap], Optional[str]] | findMatchingSkyMap (self, str datasetTypeName) |

| def | runRawIngest (self, pool=None) |

| def | runDefineVisits (self, pool=None) |

| def | prep (self) |

| Iterator[FileDataset] | iterDatasets (self) |

| str | getRun (self, str datasetTypeName, Optional[str] calibDate=None) |

| Iterator[Tuple[str, CameraMapperMapping]] | iterMappings (self) |

| RepoWalker.Target | makeRepoWalkerTarget (self, str datasetTypeName, str template, Dict[str, type] keys, StorageClass storageClass, FormatterParameter formatter=None, Optional[PathElementHandler] targetHandler=None) |

| List[str] | getCollectionChain (self) |

| def | findDatasets (self) |

| def | expandDataIds (self) |

| def | ingest (self) |

| None | finish (self) |

Public Attributes | |

| butler2 | |

| mapper | |

| task | |

| root | |

| instrument | |

| subset | |

Detailed Description

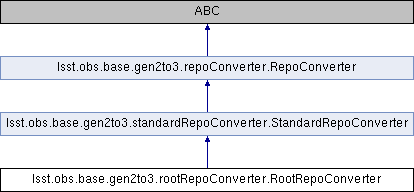

A specialization of `RepoConverter` for root data repositories.

`RootRepoConverter` adds support for raw images (mostly delegated to the

parent task's `RawIngestTask` subtask) and reference catalogs.

Parameters

----------

kwds

Keyword arguments are forwarded to (and required by) `RepoConverter`.

Definition at line 63 of file rootRepoConverter.py.

Constructor & Destructor Documentation

◆ __init__()

| def lsst.obs.base.gen2to3.rootRepoConverter.RootRepoConverter.__init__ | ( | self, | |

| ** | kwds | ||

| ) |

Reimplemented from lsst.obs.base.gen2to3.standardRepoConverter.StandardRepoConverter.

Definition at line 75 of file rootRepoConverter.py.

Member Function Documentation

◆ expandDataIds()

|

inherited |

Expand the data IDs for all datasets to be inserted. Subclasses may override this method, but must delegate to the base class implementation if they do. This involves queries to the registry, but not writes. It is guaranteed to be called between `findDatasets` and `ingest`.

Definition at line 441 of file repoConverter.py.

◆ findDatasets()

|

inherited |

Definition at line 424 of file repoConverter.py.

◆ findMatchingSkyMap()

| Tuple[Optional[BaseSkyMap], Optional[str]] lsst.obs.base.gen2to3.rootRepoConverter.RootRepoConverter.findMatchingSkyMap | ( | self, | |

| str | datasetTypeName | ||

| ) |

Return the appropriate SkyMap for the given dataset type.

Parameters

----------

datasetTypeName : `str`

Name of the dataset type for which a skymap is sought.

Returns

-------

skyMap : `BaseSkyMap` or `None`

The `BaseSkyMap` instance, or `None` if there was no match.

skyMapName : `str` or `None`

The Gen3 name for the SkyMap, or `None` if there was no match.

Reimplemented from lsst.obs.base.gen2to3.standardRepoConverter.StandardRepoConverter.

Definition at line 97 of file rootRepoConverter.py.

◆ finish()

|

inherited |

Finish conversion of a repository. This is run after ``ingest``, and delegates to `_finish`, which should be overridden by derived classes instead of this method.

Definition at line 511 of file repoConverter.py.

◆ getCollectionChain()

|

inherited |

Return run names that can be used to construct a chained collection that refers to the converted repository (`list` [ `str` ]).

Definition at line 207 of file standardRepoConverter.py.

◆ getRun()

| str lsst.obs.base.gen2to3.rootRepoConverter.RootRepoConverter.getRun | ( | self, | |

| str | datasetTypeName, | ||

| Optional[str] | calibDate = None |

||

| ) |

Return the name of the run to insert instances of the given dataset

type into in this collection.

Parameters

----------

datasetTypeName : `str`

Name of the dataset type.

calibDate : `str`, optional

If not `None`, the "CALIBDATE" associated with this (calibration)

dataset in the Gen2 data repository.

Returns

-------

run : `str`

Name of the `~lsst.daf.butler.CollectionType.RUN` collection.

Reimplemented from lsst.obs.base.gen2to3.standardRepoConverter.StandardRepoConverter.

Definition at line 186 of file rootRepoConverter.py.

◆ getSpecialDirectories()

| List[str] lsst.obs.base.gen2to3.rootRepoConverter.RootRepoConverter.getSpecialDirectories | ( | self | ) |

Return a list of directory paths that should not be searched for

files.

These may be directories that simply do not contain datasets (or

contain datasets in another repository), or directories whose datasets

are handled specially by a subclass.

Returns

-------

directories : `list` [`str`]

The full paths of directories to skip, relative to the repository

root.

Reimplemented from lsst.obs.base.gen2to3.repoConverter.RepoConverter.

Definition at line 93 of file rootRepoConverter.py.

◆ ingest()

|

inherited |

Insert converted datasets into the Gen3 repository. Subclasses may override this method, but must delegate to the base class implementation at some point in their own logic. This method is guaranteed to be called after `expandDataIds`.

Definition at line 483 of file repoConverter.py.

◆ isDatasetTypeSpecial()

| bool lsst.obs.base.gen2to3.rootRepoConverter.RootRepoConverter.isDatasetTypeSpecial | ( | self, | |

| str | datasetTypeName | ||

| ) |

Test whether the given dataset is handled specially by this

converter and hence should be ignored by generic base-class logic that

searches for dataset types to convert.

Parameters

----------

datasetTypeName : `str`

Name of the dataset type to test.

Returns

-------

special : `bool`

`True` if the dataset type is special.

Reimplemented from lsst.obs.base.gen2to3.standardRepoConverter.StandardRepoConverter.

Definition at line 84 of file rootRepoConverter.py.

◆ iterDatasets()

| Iterator[FileDataset] lsst.obs.base.gen2to3.rootRepoConverter.RootRepoConverter.iterDatasets | ( | self | ) |

Iterate over datasets in the repository that should be ingested into

the Gen3 repository.

The base class implementation yields nothing; the datasets handled by

the `RepoConverter` base class itself are read directly in

`findDatasets`.

Subclasses should override this method if they support additional

datasets that are handled some other way.

Yields

------

dataset : `FileDataset`

Structures representing datasets to be ingested. Paths should be

absolute.

Reimplemented from lsst.obs.base.gen2to3.standardRepoConverter.StandardRepoConverter.

Definition at line 163 of file rootRepoConverter.py.

◆ iterMappings()

|

inherited |

Iterate over all `CameraMapper` `Mapping` objects that should be

considered for conversion by this repository.

This this should include any datasets that may appear in the

repository, including those that are special (see

`isDatasetTypeSpecial`) and those that are being ignored (see

`ConvertRepoTask.isDatasetTypeIncluded`); this allows the converter

to identify and hence skip these datasets quietly instead of warning

about them as unrecognized.

Yields

------

datasetTypeName: `str`

Name of the dataset type.

mapping : `lsst.obs.base.mapping.Mapping`

Mapping object used by the Gen2 `CameraMapper` to describe the

dataset type.

Reimplemented from lsst.obs.base.gen2to3.repoConverter.RepoConverter.

Definition at line 124 of file standardRepoConverter.py.

◆ makeRepoWalkerTarget()

|

inherited |

Make a struct that identifies a dataset type to be extracted by

walking the repo directory structure.

Parameters

----------

datasetTypeName : `str`

Name of the dataset type (the same in both Gen2 and Gen3).

template : `str`

The full Gen2 filename template.

keys : `dict` [`str`, `type`]

A dictionary mapping Gen2 data ID key to the type of its value.

storageClass : `lsst.daf.butler.StorageClass`

Gen3 storage class for this dataset type.

formatter : `lsst.daf.butler.Formatter` or `str`, optional

A Gen 3 formatter class or fully-qualified name.

targetHandler : `PathElementHandler`, optional

Specialist target handler to use for this dataset type.

Returns

-------

target : `RepoWalker.Target`

A struct containing information about the target dataset (much of

it simplify forwarded from the arguments).

Reimplemented from lsst.obs.base.gen2to3.repoConverter.RepoConverter.

Definition at line 167 of file standardRepoConverter.py.

◆ prep()

| def lsst.obs.base.gen2to3.rootRepoConverter.RootRepoConverter.prep | ( | self | ) |

Perform preparatory work associated with the dataset types to be converted from this repository (but not the datasets themselves). Notes ----- This should be a relatively fast operation that should not depend on the size of the repository. Subclasses may override this method, but must delegate to the base class implementation at some point in their own logic. More often, subclasses will specialize the behavior of `prep` by overriding other methods to which the base class implementation delegates. These include: - `iterMappings` - `isDatasetTypeSpecial` - `getSpecialDirectories` - `makeRepoWalkerTarget` This should not perform any write operations to the Gen3 repository. It is guaranteed to be called before `ingest`.

Reimplemented from lsst.obs.base.gen2to3.standardRepoConverter.StandardRepoConverter.

Definition at line 133 of file rootRepoConverter.py.

◆ runDefineVisits()

| def lsst.obs.base.gen2to3.rootRepoConverter.RootRepoConverter.runDefineVisits | ( | self, | |

pool = None |

|||

| ) |

Definition at line 125 of file rootRepoConverter.py.

◆ runRawIngest()

| def lsst.obs.base.gen2to3.rootRepoConverter.RootRepoConverter.runRawIngest | ( | self, | |

pool = None |

|||

| ) |

Definition at line 109 of file rootRepoConverter.py.

Member Data Documentation

◆ butler2

|

inherited |

Definition at line 90 of file standardRepoConverter.py.

◆ instrument

|

inherited |

Definition at line 213 of file repoConverter.py.

◆ mapper

|

inherited |

Definition at line 91 of file standardRepoConverter.py.

◆ root

|

inherited |

Definition at line 212 of file repoConverter.py.

◆ subset

|

inherited |

Definition at line 214 of file repoConverter.py.

◆ task

|

inherited |

Definition at line 211 of file repoConverter.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/0.2.1/Linux64/obs_base/21.0.0-27-gbbd0d29+ae871e0f33/python/lsst/obs/base/gen2to3/rootRepoConverter.py