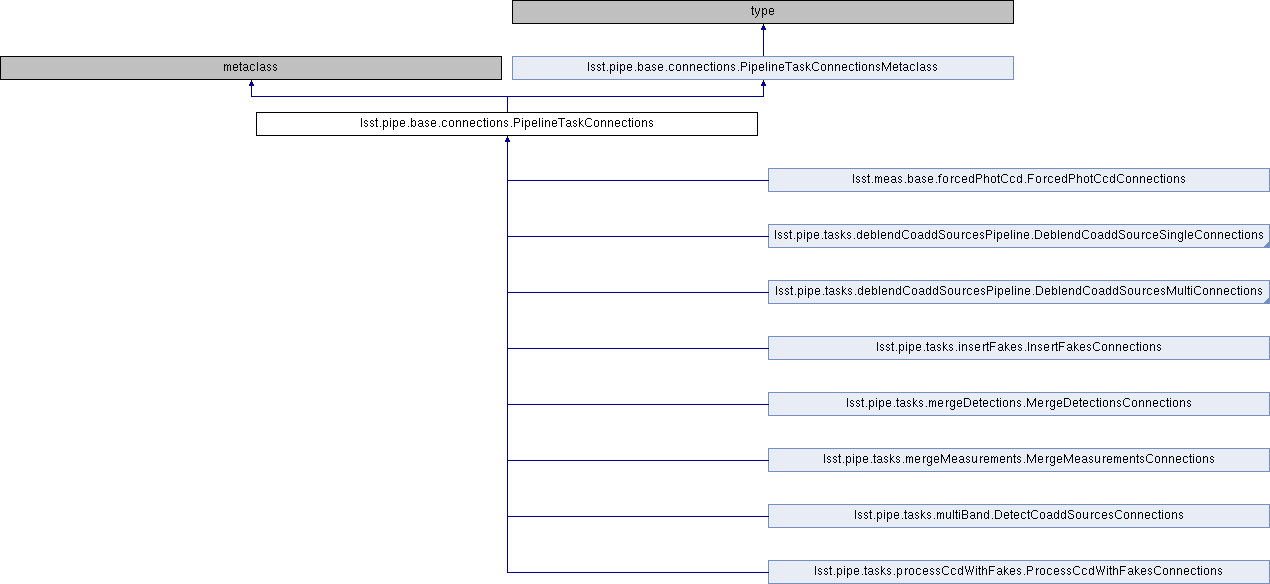

Inheritance diagram for lsst.pipe.base.connections.PipelineTaskConnections:

Public Member Functions | |

| def | __init__ (self, *'PipelineTaskConfig' config=None) |

| typing.Tuple[InputQuantizedConnection, OutputQuantizedConnection] | buildDatasetRefs (self, Quantum quantum) |

| NamedKeyDict[DatasetType, typing.Set[DatasetRef]] | adjustQuantum (self, NamedKeyDict[DatasetType, typing.Set[DatasetRef]] datasetRefMap) |

| def | __prepare__ (name, bases, **kwargs) |

| def | __new__ (cls, name, bases, dct, **kwargs) |

Public Attributes | |

| inputs | |

| prerequisiteInputs | |

| outputs | |

| initInputs | |

| initOutputs | |

| allConnections | |

| config | |

Detailed Description

PipelineTaskConnections is a class used to declare desired IO when a

PipelineTask is run by an activator

Parameters

----------

config : `PipelineTaskConfig`

A `PipelineTaskConfig` class instance whose class has been configured

to use this `PipelineTaskConnectionsClass`

Notes

-----

``PipelineTaskConnection`` classes are created by declaring class

attributes of types defined in `lsst.pipe.base.connectionTypes` and are

listed as follows:

* ``InitInput`` - Defines connections in a quantum graph which are used as

inputs to the ``__init__`` function of the `PipelineTask` corresponding

to this class

* ``InitOuput`` - Defines connections in a quantum graph which are to be

persisted using a butler at the end of the ``__init__`` function of the

`PipelineTask` corresponding to this class. The variable name used to

define this connection should be the same as an attribute name on the

`PipelineTask` instance. E.g. if an ``InitOutput`` is declared with

the name ``outputSchema`` in a ``PipelineTaskConnections`` class, then

a `PipelineTask` instance should have an attribute

``self.outputSchema`` defined. Its value is what will be saved by the

activator framework.

* ``PrerequisiteInput`` - An input connection type that defines a

`lsst.daf.butler.DatasetType` that must be present at execution time,

but that will not be used during the course of creating the quantum

graph to be executed. These most often are things produced outside the

processing pipeline, such as reference catalogs.

* ``Input`` - Input `lsst.daf.butler.DatasetType` objects that will be used

in the ``run`` method of a `PipelineTask`. The name used to declare

class attribute must match a function argument name in the ``run``

method of a `PipelineTask`. E.g. If the ``PipelineTaskConnections``

defines an ``Input`` with the name ``calexp``, then the corresponding

signature should be ``PipelineTask.run(calexp, ...)``

* ``Output`` - A `lsst.daf.butler.DatasetType` that will be produced by an

execution of a `PipelineTask`. The name used to declare the connection

must correspond to an attribute of a `Struct` that is returned by a

`PipelineTask` ``run`` method. E.g. if an output connection is

defined with the name ``measCat``, then the corresponding

``PipelineTask.run`` method must return ``Struct(measCat=X,..)`` where

X matches the ``storageClass`` type defined on the output connection.

The process of declaring a ``PipelineTaskConnection`` class involves

parameters passed in the declaration statement.

The first parameter is ``dimensions`` which is an iterable of strings which

defines the unit of processing the run method of a corresponding

`PipelineTask` will operate on. These dimensions must match dimensions that

exist in the butler registry which will be used in executing the

corresponding `PipelineTask`.

The second parameter is labeled ``defaultTemplates`` and is conditionally

optional. The name attributes of connections can be specified as python

format strings, with named format arguments. If any of the name parameters

on connections defined in a `PipelineTaskConnections` class contain a

template, then a default template value must be specified in the

``defaultTemplates`` argument. This is done by passing a dictionary with

keys corresponding to a template identifier, and values corresponding to

the value to use as a default when formatting the string. For example if

``ConnectionClass.calexp.name = '{input}Coadd_calexp'`` then

``defaultTemplates`` = {'input': 'deep'}.

Once a `PipelineTaskConnections` class is created, it is used in the

creation of a `PipelineTaskConfig`. This is further documented in the

documentation of `PipelineTaskConfig`. For the purposes of this

documentation, the relevant information is that the config class allows

configuration of connection names by users when running a pipeline.

Instances of a `PipelineTaskConnections` class are used by the pipeline

task execution framework to introspect what a corresponding `PipelineTask`

will require, and what it will produce.

Examples

--------

>>> from lsst.pipe.base import connectionTypes as cT

>>> from lsst.pipe.base import PipelineTaskConnections

>>> from lsst.pipe.base import PipelineTaskConfig

>>> class ExampleConnections(PipelineTaskConnections,

... dimensions=("A", "B"),

... defaultTemplates={"foo": "Example"}):

... inputConnection = cT.Input(doc="Example input",

... dimensions=("A", "B"),

... storageClass=Exposure,

... name="{foo}Dataset")

... outputConnection = cT.Output(doc="Example output",

... dimensions=("A", "B"),

... storageClass=Exposure,

... name="{foo}output")

>>> class ExampleConfig(PipelineTaskConfig,

... pipelineConnections=ExampleConnections):

... pass

>>> config = ExampleConfig()

>>> config.connections.foo = Modified

>>> config.connections.outputConnection = "TotallyDifferent"

>>> connections = ExampleConnections(config=config)

>>> assert(connections.inputConnection.name == "ModifiedDataset")

>>> assert(connections.outputConnection.name == "TotallyDifferent")

Definition at line 260 of file connections.py.

Constructor & Destructor Documentation

◆ __init__()

| def lsst.pipe.base.connections.PipelineTaskConnections.__init__ | ( | self, | |

| *'PipelineTaskConfig' | config = None |

||

| ) |

Definition at line 364 of file connections.py.

def format(config, name=None, writeSourceLine=True, prefix="", verbose=False)

Definition: history.py:174

Member Function Documentation

◆ __new__()

|

inherited |

Definition at line 110 of file connections.py.

◆ __prepare__()

|

inherited |

Definition at line 99 of file connections.py.

◆ adjustQuantum()

| NamedKeyDict[DatasetType, typing.Set[DatasetRef]] lsst.pipe.base.connections.PipelineTaskConnections.adjustQuantum | ( | self, | |

| NamedKeyDict[DatasetType, typing.Set[DatasetRef]] | datasetRefMap | ||

| ) |

Override to make adjustments to `lsst.daf.butler.DatasetRef` objects

in the `lsst.daf.butler.core.Quantum` during the graph generation stage

of the activator.

The base class implementation simply checks that input connections with

``multiple`` set to `False` have no more than one dataset.

Parameters

----------

datasetRefMap : `NamedKeyDict`

Mapping from dataset type to a `set` of

`lsst.daf.butler.DatasetRef` objects

Returns

-------

datasetRefMap : `NamedKeyDict`

Modified mapping of input with possibly adjusted

`lsst.daf.butler.DatasetRef` objects.

Raises

------

ScalarError

Raised if any `Input` or `PrerequisiteInput` connection has

``multiple`` set to `False`, but multiple datasets.

Exception

Overrides of this function have the option of raising an Exception

if a field in the input does not satisfy a need for a corresponding

pipelineTask, i.e. no reference catalogs are found.

Definition at line 459 of file connections.py.

typing.Generator[BaseConnection, None, None] iterConnections(PipelineTaskConnections connections, Union[str, Iterable[str]] connectionType)

Definition: connections.py:503

◆ buildDatasetRefs()

| typing.Tuple[InputQuantizedConnection, OutputQuantizedConnection] lsst.pipe.base.connections.PipelineTaskConnections.buildDatasetRefs | ( | self, | |

| Quantum | quantum | ||

| ) |

Builds QuantizedConnections corresponding to input Quantum

Parameters

----------

quantum : `lsst.daf.butler.Quantum`

Quantum object which defines the inputs and outputs for a given

unit of processing

Returns

-------

retVal : `tuple` of (`InputQuantizedConnection`,

`OutputQuantizedConnection`) Namespaces mapping attribute names

(identifiers of connections) to butler references defined in the

input `lsst.daf.butler.Quantum`

Definition at line 392 of file connections.py.

Member Data Documentation

◆ allConnections

| lsst.pipe.base.connections.PipelineTaskConnections.allConnections |

Definition at line 370 of file connections.py.

◆ config

| lsst.pipe.base.connections.PipelineTaskConnections.config |

Definition at line 375 of file connections.py.

◆ initInputs

| lsst.pipe.base.connections.PipelineTaskConnections.initInputs |

Definition at line 368 of file connections.py.

◆ initOutputs

| lsst.pipe.base.connections.PipelineTaskConnections.initOutputs |

Definition at line 369 of file connections.py.

◆ inputs

| lsst.pipe.base.connections.PipelineTaskConnections.inputs |

Definition at line 365 of file connections.py.

◆ outputs

| lsst.pipe.base.connections.PipelineTaskConnections.outputs |

Definition at line 367 of file connections.py.

◆ prerequisiteInputs

| lsst.pipe.base.connections.PipelineTaskConnections.prerequisiteInputs |

Definition at line 366 of file connections.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-0.4.3/Linux64/pipe_base/22.0.1+94e66cc9ed/python/lsst/pipe/base/connections.py