Merge measurements from multiple bands. More...

Public Member Functions | |

| def | getInputSchema (self, butler=None, schema=None) |

| def | getInitOutputDatasets (self) |

| def | adaptArgsAndRun (self, inputData, inputDataIds, outputDataIds, butler) |

| def | __init__ (self, butler=None, schema=None, initInputs=None, kwargs) |

| Initialize the task. More... | |

| def | runDataRef (self, patchRefList) |

| Merge coadd sources from multiple bands. More... | |

| def | run (self, catalogs) |

| Merge measurement catalogs to create a single reference catalog for forced photometry. More... | |

| def | write (self, patchRef, catalog) |

| Write the output. More... | |

| def | writeMetadata (self, dataRefList) |

| No metadata to write, and not sure how to write it for a list of dataRefs. More... | |

Public Attributes | |

| schemaMapper | |

| instFluxKey | |

| instFluxErrKey | |

| fluxFlagKey | |

| flagKeys | |

| schema | |

| pseudoFilterKeys | |

| badFlags | |

Static Public Attributes | |

| ConfigClass = MergeMeasurementsConfig | |

| RunnerClass = MergeSourcesRunner | |

| string | inputDataset = "meas" |

| string | outputDataset = "ref" |

| getSchemaCatalogs = _makeGetSchemaCatalogs("ref") | |

Detailed Description

Merge measurements from multiple bands.

Contents

- Description

- Task initialization

- Invoking the Task

- Configuration parameters

- Debug variables

- A complete example

Description

Command-line task that merges measurements from multiple bands.

Combines consistent (i.e. with the same peaks and footprints) catalogs of sources from multiple filter bands to construct a unified catalog that is suitable for driving forced photometry. Every source is required to have centroid, shape and flux measurements in each band.

- Inputs:

- deepCoadd_meas{tract,patch,filter}: SourceCatalog

- Outputs:

- deepCoadd_ref{tract,patch}: SourceCatalog

- Data Unit:

- tract, patch

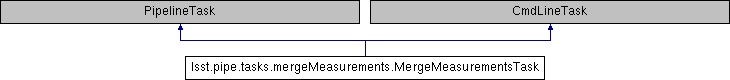

MergeMeasurementsTask subclasses CmdLineTask.

Task initialization

Initialize the task.

- Parameters

-

[in] schema the schema of the detection catalogs used as input to this one [in] butler a butler used to read the input schema from disk, if schema is None

The task will set its own self.schema attribute to the schema of the output merged catalog.

Invoking the Task

Merge measurement catalogs to create a single reference catalog for forced photometry.

- Parameters

-

[in] catalogs the catalogs to be merged

For parent sources, we choose the first band in config.priorityList for which the merge_footprint flag for that band is is True.

For child sources, the logic is the same, except that we use the merge_peak flags.

Configuration parameters

Debug variables

The command line task interface supports a flag -d to import debug.py from your PYTHONPATH; see Using lsstDebug to control debugging output for more about debug.py files.

MergeMeasurementsTask has no debug variables.

A complete example

of using MergeMeasurementsTask

MergeMeasurementsTask is meant to be run after deblending & measuring sources in every band. The purpose of the task is to generate a catalog of sources suitable for driving forced photometry in coadds and individual exposures. Command-line usage of MergeMeasurementsTask expects a data reference to the coadds to be processed. A list of the available optional arguments can be obtained by calling mergeCoaddMeasurements.py with the --help command line argument:

To demonstrate usage of the DetectCoaddSourcesTask in the larger context of multi-band processing, we will process HSC data in the ci_hsc package. Assuming one has finished step 7 at pipeTasks_multiBand, one may merge the catalogs generated after deblending and measuring as follows:

This will merge the HSC-I & HSC-R band catalogs. The results are written in $CI_HSC_DIR/DATA/deepCoadd-results/.

Definition at line 126 of file mergeMeasurements.py.

Constructor & Destructor Documentation

◆ __init__()

| def lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.__init__ | ( | self, | |

butler = None, |

|||

schema = None, |

|||

initInputs = None, |

|||

| kwargs | |||

| ) |

Initialize the task.

- Parameters

-

[in] schema the schema of the detection catalogs used as input to this one [in] butler a butler used to read the input schema from disk, if schema is None

The task will set its own self.schema attribute to the schema of the output merged catalog.

Definition at line 225 of file mergeMeasurements.py.

Member Function Documentation

◆ adaptArgsAndRun()

| def lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.adaptArgsAndRun | ( | self, | |

| inputData, | |||

| inputDataIds, | |||

| outputDataIds, | |||

| butler | |||

| ) |

Definition at line 218 of file mergeMeasurements.py.

◆ getInitOutputDatasets()

| def lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.getInitOutputDatasets | ( | self | ) |

Definition at line 215 of file mergeMeasurements.py.

◆ getInputSchema()

| def lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.getInputSchema | ( | self, | |

butler = None, |

|||

schema = None |

|||

| ) |

Definition at line 212 of file mergeMeasurements.py.

◆ run()

| def lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.run | ( | self, | |

| catalogs | |||

| ) |

Merge measurement catalogs to create a single reference catalog for forced photometry.

- Parameters

-

[in] catalogs the catalogs to be merged

For parent sources, we choose the first band in config.priorityList for which the merge_footprint flag for that band is is True.

For child sources, the logic is the same, except that we use the merge_peak flags.

Definition at line 282 of file mergeMeasurements.py.

◆ runDataRef()

| def lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.runDataRef | ( | self, | |

| patchRefList | |||

| ) |

Merge coadd sources from multiple bands.

Calls run.

- Parameters

-

[in] patchRefList list of data references for each filter

Definition at line 273 of file mergeMeasurements.py.

◆ write()

| def lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.write | ( | self, | |

| patchRef, | |||

| catalog | |||

| ) |

Write the output.

- Parameters

-

[in] patchRef data reference for patch [in] catalog catalog

We write as the dataset provided by the 'outputDataset' class variable.

Definition at line 387 of file mergeMeasurements.py.

◆ writeMetadata()

| def lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.writeMetadata | ( | self, | |

| dataRefList | |||

| ) |

No metadata to write, and not sure how to write it for a list of dataRefs.

Definition at line 404 of file mergeMeasurements.py.

Member Data Documentation

◆ badFlags

| lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.badFlags |

Definition at line 266 of file mergeMeasurements.py.

◆ ConfigClass

|

static |

Definition at line 202 of file mergeMeasurements.py.

◆ flagKeys

| lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.flagKeys |

Definition at line 246 of file mergeMeasurements.py.

◆ fluxFlagKey

| lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.fluxFlagKey |

Definition at line 244 of file mergeMeasurements.py.

◆ getSchemaCatalogs

|

static |

Definition at line 206 of file mergeMeasurements.py.

◆ inputDataset

|

static |

Definition at line 204 of file mergeMeasurements.py.

◆ instFluxErrKey

| lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.instFluxErrKey |

Definition at line 243 of file mergeMeasurements.py.

◆ instFluxKey

| lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.instFluxKey |

Definition at line 242 of file mergeMeasurements.py.

◆ outputDataset

|

static |

Definition at line 205 of file mergeMeasurements.py.

◆ pseudoFilterKeys

| lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.pseudoFilterKeys |

Definition at line 259 of file mergeMeasurements.py.

◆ RunnerClass

|

static |

Definition at line 203 of file mergeMeasurements.py.

◆ schema

| lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.schema |

Definition at line 257 of file mergeMeasurements.py.

◆ schemaMapper

| lsst.pipe.tasks.mergeMeasurements.MergeMeasurementsTask.schemaMapper |

Definition at line 240 of file mergeMeasurements.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/Linux64/pipe_tasks/18.1.0-11-gb2589d7b/python/lsst/pipe/tasks/mergeMeasurements.py

Generated on Fri Aug 30 2019 07:31:07 for LSSTApplications by

1.8.13

1.8.13