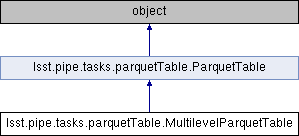

Inheritance diagram for lsst.pipe.tasks.parquetTable.MultilevelParquetTable:

Public Member Functions | |

| def | __init__ (self, *args, **kwargs) |

| def | columnLevelNames (self) |

| def | columnLevels (self) |

| def | toDataFrame (self, columns=None, droplevels=True) |

| def | write (self, filename) |

| def | pandasMd (self) |

| def | columnIndex (self) |

| def | columns (self) |

| def | toDataFrame (self, columns=None) |

Public Attributes | |

| filename | |

Detailed Description

Wrapper to access dataframe with multi-level column index from Parquet

This subclass of `ParquetTable` to handle the multi-level is necessary

because there is not a convenient way to request specific table subsets

by level via Parquet through pyarrow, as there is with a `pandas.DataFrame`.

Additionally, pyarrow stores multilevel index information in a very strange

way. Pandas stores it as a tuple, so that one can access a single column

from a pandas dataframe as `df[('ref', 'HSC-G', 'coord_ra')]`. However, for

some reason pyarrow saves these indices as "stringified" tuples, such that

in order to read thissame column from a table written to Parquet, you would

have to do the following:

pf = pyarrow.ParquetFile(filename)

df = pf.read(columns=["('ref', 'HSC-G', 'coord_ra')"])

See also https://github.com/apache/arrow/issues/1771, where we've raised

this issue.

As multilevel-indexed dataframes can be very useful to store data like

multiple filters' worth of data in the same table, this case deserves a

wrapper to enable easier access;

that's what this object is for. For example,

parq = MultilevelParquetTable(filename)

columnDict = {'dataset':'meas',

'filter':'HSC-G',

'column':['coord_ra', 'coord_dec']}

df = parq.toDataFrame(columns=columnDict)

will return just the coordinate columns; the equivalent of calling

`df['meas']['HSC-G'][['coord_ra', 'coord_dec']]` on the total dataframe,

but without having to load the whole frame into memory---this reads just

those columns from disk. You can also request a sub-table; e.g.,

parq = MultilevelParquetTable(filename)

columnDict = {'dataset':'meas',

'filter':'HSC-G'}

df = parq.toDataFrame(columns=columnDict)

and this will be the equivalent of `df['meas']['HSC-G']` on the total dataframe.

Parameters

----------

filename : str, optional

Path to Parquet file.

dataFrame : dataFrame, optional

Definition at line 148 of file parquetTable.py.

Constructor & Destructor Documentation

◆ __init__()

| def lsst.pipe.tasks.parquetTable.MultilevelParquetTable.__init__ | ( | self, | |

| * | args, | ||

| ** | kwargs | ||

| ) |

Definition at line 198 of file parquetTable.py.

Member Function Documentation

◆ columnIndex()

|

inherited |

Columns as a pandas Index

Definition at line 91 of file parquetTable.py.

◆ columnLevelNames()

| def lsst.pipe.tasks.parquetTable.MultilevelParquetTable.columnLevelNames | ( | self | ) |

Definition at line 204 of file parquetTable.py.

◆ columnLevels()

| def lsst.pipe.tasks.parquetTable.MultilevelParquetTable.columnLevels | ( | self | ) |

Names of levels in column index

Definition at line 213 of file parquetTable.py.

◆ columns()

|

inherited |

List of column names (or column index if df is set) This may either be a list of column names, or a pandas.Index object describing the column index, depending on whether the ParquetTable object is wrapping a ParquetFile or a DataFrame.

Definition at line 105 of file parquetTable.py.

◆ pandasMd()

|

inherited |

Definition at line 83 of file parquetTable.py.

◆ toDataFrame() [1/2]

|

inherited |

Get table (or specified columns) as a pandas DataFrame

Parameters

----------

columns : list, optional

Desired columns. If `None`, then all columns will be

returned.

Definition at line 126 of file parquetTable.py.

std::shared_ptr< table::io::Persistable > read(table::io::InputArchive const &archive, table::io::CatalogVector const &catalogs) const override

Definition: warpExposure.cc:0

◆ toDataFrame() [2/2]

| def lsst.pipe.tasks.parquetTable.MultilevelParquetTable.toDataFrame | ( | self, | |

columns = None, |

|||

droplevels = True |

|||

| ) |

Get table (or specified columns) as a pandas DataFrame

To get specific columns in specified sub-levels:

parq = MultilevelParquetTable(filename)

columnDict = {'dataset':'meas',

'filter':'HSC-G',

'column':['coord_ra', 'coord_dec']}

df = parq.toDataFrame(columns=columnDict)

Or, to get an entire subtable, leave out one level name:

parq = MultilevelParquetTable(filename)

columnDict = {'dataset':'meas',

'filter':'HSC-G'}

df = parq.toDataFrame(columns=columnDict)

Parameters

----------

columns : list or dict, optional

Desired columns. If `None`, then all columns will be

returned. If a list, then the names of the columns must

be *exactly* as stored by pyarrow; that is, stringified tuples.

If a dictionary, then the entries of the dictionary must

correspond to the level names of the column multi-index

(that is, the `columnLevels` attribute). Not every level

must be passed; if any level is left out, then all entries

in that level will be implicitly included.

droplevels : bool

If True drop levels of column index that have just one entry

Definition at line 235 of file parquetTable.py.

def format(config, name=None, writeSourceLine=True, prefix="", verbose=False)

Definition: history.py:174

◆ write()

|

inherited |

Write pandas dataframe to parquet

Parameters

----------

filename : str

Path to which to write.

Definition at line 69 of file parquetTable.py.

void write(OutputArchiveHandle &handle) const override

Member Data Documentation

◆ filename

|

inherited |

Definition at line 55 of file parquetTable.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-0.7.0/Linux64/pipe_tasks/21.0.0-172-gfb10e10a+18fedfabac/python/lsst/pipe/tasks/parquetTable.py