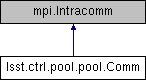

Inheritance diagram for lsst.ctrl.pool.pool.Comm:

Public Member Functions | |

| def | __new__ (cls, comm=mpi.COMM_WORLD, recvSleep=0.1, barrierSleep=0.1) |

| Construct an MPI.Comm wrapper. More... | |

| def | recv (self, obj=None, source=0, tag=0, status=None) |

| def | send (self, obj=None, *args, **kwargs) |

| def | Barrier (self, tag=0) |

| def | broadcast (self, value, root=0) |

| def | scatter (self, dataList, root=0, tag=0) |

| def | Free (self) |

Detailed Description

Wrapper to mpi4py's MPI.Intracomm class to avoid busy-waiting. As suggested by Lisandro Dalcin at: * http://code.google.com/p/mpi4py/issues/detail?id=4 and * https://groups.google.com/forum/?fromgroups=#!topic/mpi4py/nArVuMXyyZI

Member Function Documentation

◆ __new__()

| def lsst.ctrl.pool.pool.Comm.__new__ | ( | cls, | |

comm = mpi.COMM_WORLD, |

|||

recvSleep = 0.1, |

|||

barrierSleep = 0.1 |

|||

| ) |

◆ Barrier()

| def lsst.ctrl.pool.pool.Comm.Barrier | ( | self, | |

tag = 0 |

|||

| ) |

◆ broadcast()

| def lsst.ctrl.pool.pool.Comm.broadcast | ( | self, | |

| value, | |||

root = 0 |

|||

| ) |

◆ Free()

| def lsst.ctrl.pool.pool.Comm.Free | ( | self | ) |

◆ recv()

| def lsst.ctrl.pool.pool.Comm.recv | ( | self, | |

obj = None, |

|||

source = 0, |

|||

tag = 0, |

|||

status = None |

|||

| ) |

◆ scatter()

| def lsst.ctrl.pool.pool.Comm.scatter | ( | self, | |

| dataList, | |||

root = 0, |

|||

tag = 0 |

|||

| ) |

Scatter data across the nodes

The default version apparently pickles the entire 'dataList',

which can cause errors if the pickle size grows over 2^31 bytes

due to fundamental problems with pickle in python 2. Instead,

we send the data to each slave node in turn; this reduces the

pickle size.

@param dataList List of data to distribute; one per node

(including root)

@param root Index of root node

@param tag Message tag (integer)

@return Data for this node

Definition at line 306 of file pool.py.

◆ send()

| def lsst.ctrl.pool.pool.Comm.send | ( | self, | |

obj = None, |

|||

| * | args, | ||

| ** | kwargs | ||

| ) |

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-0.7.0/Linux64/ctrl_pool/22.0.1-2-g92698f7+dcf3732eb2/python/lsst/ctrl/pool/pool.py