Public Member Functions | |

| def | __init__ (self, *args, **kwargs) |

| def | __del__ (self) |

| def | log (self, msg, *args) |

| def | command (self, cmd) |

| def | map (self, context, func, dataList, *args, **kwargs) |

| Scatter work to slaves and gather the results. More... | |

| def | reduce (self, context, reducer, func, dataList, *args, **kwargs) |

| Scatter work to slaves and reduce the results. More... | |

| def | mapNoBalance (self, context, func, dataList, *args, **kwargs) |

| Scatter work to slaves and gather the results. More... | |

| def | reduceNoBalance (self, context, reducer, func, dataList, *args, **kwargs) |

| Scatter work to slaves and reduce the results. More... | |

| def | mapToPrevious (self, context, func, dataList, *args, **kwargs) |

| Scatter work to the same target as before. More... | |

| def | reduceToPrevious (self, context, reducer, func, dataList, *args, **kwargs) |

| Reduction where work goes to the same target as before. More... | |

| def | storeSet (self, context, **kwargs) |

| Store data on slave for a particular context. More... | |

| def | storeDel (self, context, *nameList) |

| def | storeClear (self, context) |

| def | cacheClear (self, context) |

| def | cacheList (self, context) |

| def | storeList (self, context) |

| def | exit (self) |

| def | isMaster (self) |

| def | __call__ (cls, *args, **kwargs) |

Public Attributes | |

| root | |

| size | |

| comm | |

| rank | |

| debugger | |

| node | |

Detailed Description

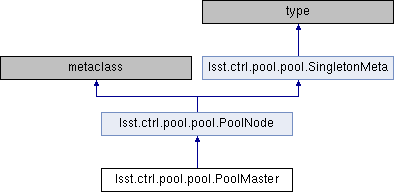

Master node instance of MPI process pool Only the master node should instantiate this. WARNING: You should not let a pool instance hang around at program termination, as the garbage collection behaves differently, and may cause a segmentation fault (signal 11).

Constructor & Destructor Documentation

◆ __init__()

| def lsst.ctrl.pool.pool.PoolMaster.__init__ | ( | self, | |

| * | args, | ||

| ** | kwargs | ||

| ) |

◆ __del__()

| def lsst.ctrl.pool.pool.PoolMaster.__del__ | ( | self | ) |

Member Function Documentation

◆ __call__()

|

inherited |

◆ cacheClear()

| def lsst.ctrl.pool.pool.PoolMaster.cacheClear | ( | self, | |

| context | |||

| ) |

Reset cache for a particular context on master and slaves

Reimplemented from lsst.ctrl.pool.pool.PoolNode.

◆ cacheList()

| def lsst.ctrl.pool.pool.PoolMaster.cacheList | ( | self, | |

| context | |||

| ) |

List cache contents for a particular context on master and slaves

Reimplemented from lsst.ctrl.pool.pool.PoolNode.

◆ command()

| def lsst.ctrl.pool.pool.PoolMaster.command | ( | self, | |

| cmd | |||

| ) |

Send command to slaves A command is the name of the PoolSlave method they should run.

◆ exit()

| def lsst.ctrl.pool.pool.PoolMaster.exit | ( | self | ) |

◆ isMaster()

|

inherited |

◆ log()

| def lsst.ctrl.pool.pool.PoolMaster.log | ( | self, | |

| msg, | |||

| * | args | ||

| ) |

Log a debugging message

Reimplemented from lsst.ctrl.pool.pool.PoolNode.

Definition at line 644 of file pool.py.

◆ map()

| def lsst.ctrl.pool.pool.PoolMaster.map | ( | self, | |

| context, | |||

| func, | |||

| dataList, | |||

| * | args, | ||

| ** | kwargs | ||

| ) |

Scatter work to slaves and gather the results.

Work is distributed dynamically, so that slaves that finish

quickly will receive more work.

Each slave applies the function to the data they're provided.

The slaves may optionally be passed a cache instance, which

they can use to store data for subsequent executions (to ensure

subsequent data is distributed in the same pattern as before,

use the 'mapToPrevious' method). The cache also contains

data that has been stored on the slaves.

The 'func' signature should be func(cache, data, *args, **kwargs)

if 'context' is non-None; otherwise func(data, *args, **kwargs).

@param context: Namespace for cache

@param func: function for slaves to run; must be picklable

@param dataList: List of data to distribute to slaves; must be picklable

@param args: List of constant arguments

@param kwargs: Dict of constant arguments

@return list of results from applying 'func' to dataList

Definition at line 656 of file pool.py.

◆ mapNoBalance()

| def lsst.ctrl.pool.pool.PoolMaster.mapNoBalance | ( | self, | |

| context, | |||

| func, | |||

| dataList, | |||

| * | args, | ||

| ** | kwargs | ||

| ) |

Scatter work to slaves and gather the results.

Work is distributed statically, so there is no load balancing.

Each slave applies the function to the data they're provided.

The slaves may optionally be passed a cache instance, which

they can store data in for subsequent executions (to ensure

subsequent data is distributed in the same pattern as before,

use the 'mapToPrevious' method). The cache also contains

data that has been stored on the slaves.

The 'func' signature should be func(cache, data, *args, **kwargs)

if 'context' is true; otherwise func(data, *args, **kwargs).

@param context: Namespace for cache

@param func: function for slaves to run; must be picklable

@param dataList: List of data to distribute to slaves; must be picklable

@param args: List of constant arguments

@param kwargs: Dict of constant arguments

@return list of results from applying 'func' to dataList

Definition at line 763 of file pool.py.

◆ mapToPrevious()

| def lsst.ctrl.pool.pool.PoolMaster.mapToPrevious | ( | self, | |

| context, | |||

| func, | |||

| dataList, | |||

| * | args, | ||

| ** | kwargs | ||

| ) |

Scatter work to the same target as before.

Work is distributed so that each slave handles the same

indices in the dataList as when 'map' was called.

This allows the right data to go to the right cache.

It is assumed that the dataList is the same length as when it was

passed to 'map'.

The 'func' signature should be func(cache, data, *args, **kwargs).

@param context: Namespace for cache

@param func: function for slaves to run; must be picklable

@param dataList: List of data to distribute to slaves; must be picklable

@param args: List of constant arguments

@param kwargs: Dict of constant arguments

@return list of results from applying 'func' to dataList

Definition at line 885 of file pool.py.

◆ reduce()

| def lsst.ctrl.pool.pool.PoolMaster.reduce | ( | self, | |

| context, | |||

| reducer, | |||

| func, | |||

| dataList, | |||

| * | args, | ||

| ** | kwargs | ||

| ) |

Scatter work to slaves and reduce the results.

Work is distributed dynamically, so that slaves that finish

quickly will receive more work.

Each slave applies the function to the data they're provided.

The slaves may optionally be passed a cache instance, which

they can use to store data for subsequent executions (to ensure

subsequent data is distributed in the same pattern as before,

use the 'mapToPrevious' method). The cache also contains

data that has been stored on the slaves.

The 'func' signature should be func(cache, data, *args, **kwargs)

if 'context' is non-None; otherwise func(data, *args, **kwargs).

The 'reducer' signature should be reducer(old, new). If the 'reducer'

is None, then we will return the full list of results

@param context: Namespace for cache

@param reducer: function for master to run to reduce slave results; or None

@param func: function for slaves to run; must be picklable

@param dataList: List of data to distribute to slaves; must be picklable

@param args: List of constant arguments

@param kwargs: Dict of constant arguments

@return reduced result (if reducer is non-None) or list of results

from applying 'func' to dataList

Definition at line 683 of file pool.py.

◆ reduceNoBalance()

| def lsst.ctrl.pool.pool.PoolMaster.reduceNoBalance | ( | self, | |

| context, | |||

| reducer, | |||

| func, | |||

| dataList, | |||

| * | args, | ||

| ** | kwargs | ||

| ) |

Scatter work to slaves and reduce the results.

Work is distributed statically, so there is no load balancing.

Each slave applies the function to the data they're provided.

The slaves may optionally be passed a cache instance, which

they can store data in for subsequent executions (to ensure

subsequent data is distributed in the same pattern as before,

use the 'mapToPrevious' method). The cache also contains

data that has been stored on the slaves.

The 'func' signature should be func(cache, data, *args, **kwargs)

if 'context' is true; otherwise func(data, *args, **kwargs).

The 'reducer' signature should be reducer(old, new). If the 'reducer'

is None, then we will return the full list of results

@param context: Namespace for cache

@param reducer: function for master to run to reduce slave results; or None

@param func: function for slaves to run; must be picklable

@param dataList: List of data to distribute to slaves; must be picklable

@param args: List of constant arguments

@param kwargs: Dict of constant arguments

@return reduced result (if reducer is non-None) or list of results

from applying 'func' to dataList

Definition at line 789 of file pool.py.

◆ reduceToPrevious()

| def lsst.ctrl.pool.pool.PoolMaster.reduceToPrevious | ( | self, | |

| context, | |||

| reducer, | |||

| func, | |||

| dataList, | |||

| * | args, | ||

| ** | kwargs | ||

| ) |

Reduction where work goes to the same target as before.

Work is distributed so that each slave handles the same

indices in the dataList as when 'map' was called.

This allows the right data to go to the right cache.

It is assumed that the dataList is the same length as when it was

passed to 'map'.

The 'func' signature should be func(cache, data, *args, **kwargs).

The 'reducer' signature should be reducer(old, new). If the 'reducer'

is None, then we will return the full list of results

@param context: Namespace for cache

@param reducer: function for master to run to reduce slave results; or None

@param func: function for slaves to run; must be picklable

@param dataList: List of data to distribute to slaves; must be picklable

@param args: List of constant arguments

@param kwargs: Dict of constant arguments

@return reduced result (if reducer is non-None) or list of results

from applying 'func' to dataList

Definition at line 908 of file pool.py.

◆ storeClear()

| def lsst.ctrl.pool.pool.PoolMaster.storeClear | ( | self, | |

| context | |||

| ) |

Reset data store for a particular context on master and slaves

Reimplemented from lsst.ctrl.pool.pool.PoolNode.

◆ storeDel()

| def lsst.ctrl.pool.pool.PoolMaster.storeDel | ( | self, | |

| context, | |||

| * | nameList | ||

| ) |

Delete stored data on slave for a particular context

Reimplemented from lsst.ctrl.pool.pool.PoolNode.

Definition at line 1007 of file pool.py.

◆ storeList()

| def lsst.ctrl.pool.pool.PoolMaster.storeList | ( | self, | |

| context | |||

| ) |

List store contents for a particular context on master and slaves

Reimplemented from lsst.ctrl.pool.pool.PoolNode.

◆ storeSet()

| def lsst.ctrl.pool.pool.PoolMaster.storeSet | ( | self, | |

| context, | |||

| ** | kwargs | ||

| ) |

Store data on slave for a particular context.

The data is made available to functions through the cache. The

stored data differs from the cache in that it is identical for

all operations, whereas the cache is specific to the data being

operated upon.

@param context: namespace for store

@param kwargs: dict of name=value pairs

Reimplemented from lsst.ctrl.pool.pool.PoolNode.

Definition at line 989 of file pool.py.

Member Data Documentation

◆ comm

◆ debugger

◆ node

◆ rank

◆ root

◆ size

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-0.7.0/Linux64/ctrl_pool/22.0.1-2-g92698f7+dcf3732eb2/python/lsst/ctrl/pool/pool.py