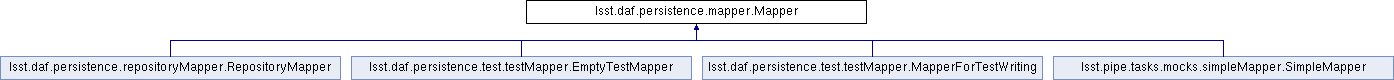

Inheritance diagram for lsst.daf.persistence.mapper.Mapper:

Public Member Functions | |

| def | __new__ (cls, *args, **kwargs) |

| def | __init__ (self, **kwargs) |

| def | __getstate__ (self) |

| def | __setstate__ (self, state) |

| def | keys (self) |

| def | queryMetadata (self, datasetType, format, dataId) |

| def | getDatasetTypes (self) |

| def | map (self, datasetType, dataId, write=False) |

| def | canStandardize (self, datasetType) |

| def | standardize (self, datasetType, item, dataId) |

| def | validate (self, dataId) |

| def | backup (self, datasetType, dataId) |

| def | getRegistry (self) |

Static Public Member Functions | |

| def | Mapper (cfg) |

Detailed Description

Mapper is a base class for all mappers.

Subclasses may define the following methods:

map_{datasetType}(self, dataId, write)

Map a dataset id for the given dataset type into a ButlerLocation.

If write=True, this mapping is for an output dataset.

query_{datasetType}(self, key, format, dataId)

Return the possible values for the format fields that would produce

datasets at the granularity of key in combination with the provided

partial dataId.

std_{datasetType}(self, item)

Standardize an object of the given data set type.

Methods that must be overridden:

keys(self)

Return a list of the keys that can be used in data ids.

Other public methods:

__init__(self)

getDatasetTypes(self)

map(self, datasetType, dataId, write=False)

queryMetadata(self, datasetType, key, format, dataId)

canStandardize(self, datasetType)

standardize(self, datasetType, item, dataId)

validate(self, dataId)

Constructor & Destructor Documentation

◆ Mapper()

|

static |

Instantiate a Mapper from a configuration.

In come cases the cfg may have already been instantiated into a Mapper, this is allowed and

the input var is simply returned.

:param cfg: the cfg for this mapper. It is recommended this be created by calling

Mapper.cfg()

:return: a Mapper instance

Definition at line 71 of file mapper.py.

◆ __init__()

| def lsst.daf.persistence.mapper.Mapper.__init__ | ( | self, | |

| ** | kwargs | ||

| ) |

Member Function Documentation

◆ __getstate__()

| def lsst.daf.persistence.mapper.Mapper.__getstate__ | ( | self | ) |

◆ __new__()

| def lsst.daf.persistence.mapper.Mapper.__new__ | ( | cls, | |

| * | args, | ||

| ** | kwargs | ||

| ) |

Create a new Mapper, saving arguments for pickling. This is in __new__ instead of __init__ to save the user from having to save the arguments themselves (either explicitly, or by calling the super's __init__ with all their *args,**kwargs. The resulting pickling system (of __new__, __getstate__ and __setstate__ is similar to how __reduce__ is usually used, except that we save the user from any responsibility (except when overriding __new__, but that is not common).

Definition at line 84 of file mapper.py.

◆ __setstate__()

| def lsst.daf.persistence.mapper.Mapper.__setstate__ | ( | self, | |

| state | |||

| ) |

◆ backup()

| def lsst.daf.persistence.mapper.Mapper.backup | ( | self, | |

| datasetType, | |||

| dataId | |||

| ) |

Rename any existing object with the given type and dataId. Not implemented in the base mapper.

◆ canStandardize()

| def lsst.daf.persistence.mapper.Mapper.canStandardize | ( | self, | |

| datasetType | |||

| ) |

Return true if this mapper can standardize an object of the given dataset type.

◆ getDatasetTypes()

| def lsst.daf.persistence.mapper.Mapper.getDatasetTypes | ( | self | ) |

◆ getRegistry()

| def lsst.daf.persistence.mapper.Mapper.getRegistry | ( | self | ) |

◆ keys()

| def lsst.daf.persistence.mapper.Mapper.keys | ( | self | ) |

Reimplemented in lsst.pipe.tasks.mocks.simpleMapper.SimpleMapper.

Definition at line 111 of file mapper.py.

◆ map()

| def lsst.daf.persistence.mapper.Mapper.map | ( | self, | |

| datasetType, | |||

| dataId, | |||

write = False |

|||

| ) |

Map a data id using the mapping method for its dataset type.

Parameters

----------

datasetType : string

The datasetType to map

dataId : DataId instance

The dataId to use when mapping

write : bool, optional

Indicates if the map is being performed for a read operation

(False) or a write operation (True)

Returns

-------

ButlerLocation or a list of ButlerLocation

The location(s) found for the map operation. If write is True, a

list is returned. If write is False a single ButlerLocation is

returned.

Raises

------

NoResults

If no locaiton was found for this map operation, the derived mapper

class may raise a lsst.daf.persistence.NoResults exception. Butler

catches this and will look in the next Repository if there is one.

Definition at line 137 of file mapper.py.

◆ queryMetadata()

| def lsst.daf.persistence.mapper.Mapper.queryMetadata | ( | self, | |

| datasetType, | |||

| format, | |||

| dataId | |||

| ) |

◆ standardize()

| def lsst.daf.persistence.mapper.Mapper.standardize | ( | self, | |

| datasetType, | |||

| item, | |||

| dataId | |||

| ) |

◆ validate()

| def lsst.daf.persistence.mapper.Mapper.validate | ( | self, | |

| dataId | |||

| ) |

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-0.7.0/Linux64/daf_persistence/22.0.1-4-g243d05b+871c1b8305/python/lsst/daf/persistence/mapper.py