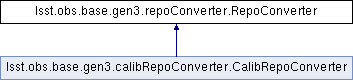

Inheritance diagram for lsst.obs.base.gen3.repoConverter.RepoConverter:

Public Member Functions | |

| def | __init__ (self, root, universe, baseDataId, mapper=None, skyMap=None) |

| def | addDatasetType (self, datasetTypeName, storageClass) |

| def | extractDatasetRef (self, fileNameInRoot) |

| def | walkRepo (self, directory=None, skipDirs=()) |

| def | convertRepo (self, butler, directory=None, transfer=None, formatter=None, skipDirs=()) |

Public Attributes | |

| root | |

| mapper | |

| universe | |

| baseDataId | |

| extractors | |

| skyMap | |

Static Public Attributes | |

| tuple | COADD_NAMES = ("deep", "goodSeeing", "dcr") |

| tuple | REPO_ROOT_FILES |

Detailed Description

A helper class that ingests (some of) the contents of a Gen2 data

repository into a Gen3 data repository.

Parameters

----------

root : `str`

Root of the Gen2 data repository.

universe : `lsst.daf.butler.DimensionUniverse`

Object containing all dimension definitions.

baseDataId : `dict`

Key-value pairs that may need to appear in the Gen3 data ID, but can

never be inferred from a Gen2 filename. This should always include

the instrument name (even Gen3 data IDs that don't involve the

instrument dimension have instrument-dependent Gen2 filenames) and

should also include the skymap name in order to process any data IDs

that involve tracts or patches.

mapper : `lsst.obs.base.CameraMapper`, optional

Object that defines Gen2 filename templates. Will be identified,

imported, and constructed from ``root`` if not provided.

skyMap : `lsst.skymap.BaseSkyMap`, optional

SkyMap that defines tracts and patches. Must be provided in order to

provess datasets with a ``patch`` key in their data IDs.

Definition at line 142 of file repoConverter.py.

Constructor & Destructor Documentation

◆ __init__()

| def lsst.obs.base.gen3.repoConverter.RepoConverter.__init__ | ( | self, | |

| root, | |||

| universe, | |||

| baseDataId, | |||

mapper = None, |

|||

skyMap = None |

|||

| ) |

Definition at line 171 of file repoConverter.py.

def findMapperClass(root)

Definition: repoConverter.py:40

def __init__(self, minimum, dataRange, Q)

Definition: rgbContinued.py:476

Member Function Documentation

◆ addDatasetType()

| def lsst.obs.base.gen3.repoConverter.RepoConverter.addDatasetType | ( | self, | |

| datasetTypeName, | |||

| storageClass | |||

| ) |

Add a dataset type to those recognized by the converter.

Parameters

----------

datasetTypeName : `str`

String name of the dataset type.

storageClass : `str` or `lsst.daf.butler.StorageClass`

Gen3 storage class of the dataset type.

Returns

-------

extractor : `DataIdExtractor`

The object that will be used to extract data IDs for instances of

this dataset type (also held internally, so the return value can

usually be ignored).

Definition at line 201 of file repoConverter.py.

◆ convertRepo()

| def lsst.obs.base.gen3.repoConverter.RepoConverter.convertRepo | ( | self, | |

| butler, | |||

directory = None, |

|||

transfer = None, |

|||

formatter = None, |

|||

skipDirs = () |

|||

| ) |

Ingest all recognized files into a Gen3 repository.

Parameters

----------

butler : `lsst.daf.butler.Butler`

Gen3 butler that files should be ingested into.

directory : `str`, optional

A subdirectory of the repository root to process, instead of

processing the entire repository.

transfer : str, optional

If not `None`, must be one of 'move', 'copy', 'hardlink', or

'symlink' indicating how to transfer the file.

formatter : `lsst.daf.butler.Formatter`, optional

Formatter that should be used to retreive the Dataset. If not

provided, the formatter will be constructed according to

Datastore configuration. This should only be used when converting

only a single dataset type multiple dataset types of the same

storage class.

skipDirs : sequence of `str`

Subdirectories that should be skipped.

Definition at line 286 of file repoConverter.py.

325

std::shared_ptr< FrameSet > append(FrameSet const &first, FrameSet const &second)

Construct a FrameSet that performs two transformations in series.

Definition: functional.cc:33

◆ extractDatasetRef()

| def lsst.obs.base.gen3.repoConverter.RepoConverter.extractDatasetRef | ( | self, | |

| fileNameInRoot | |||

| ) |

Extract a Gen3 `~lsst.daf.butler.DatasetRef` from a filename in a

Gen2 data repository.

Parameters

----------

fileNameInRoot : `str`

Name of the file, relative to the root of its Gen2 repository.

Return

------

ref : `lsst.daf.butler.DatasetRef` or `None`

Reference to the Gen3 dataset that would be created by converting

this file, or `None` if the file is not recognized as an instance

of a dataset type known to this converter.

Definition at line 223 of file repoConverter.py.

◆ walkRepo()

| def lsst.obs.base.gen3.repoConverter.RepoConverter.walkRepo | ( | self, | |

directory = None, |

|||

skipDirs = () |

|||

| ) |

Recursively a (subset of) a Gen2 data repository, yielding files

that may be convertible.

Parameters

----------

directory : `str`, optional

A subdirectory of the repository root to process, instead of

processing the entire repository.

skipDirs : sequence of `str`

Subdirectories that should be skipped.

Yields

------

fileNameInRoot : `str`

Name of a file in the repository, relative to the root of the

repository.

Definition at line 249 of file repoConverter.py.

bool any(CoordinateExpr< N > const &expr) noexcept

Return true if any elements are true.

Definition: CoordinateExpr.h:89

Member Data Documentation

◆ baseDataId

| lsst.obs.base.gen3.repoConverter.RepoConverter.baseDataId |

Definition at line 184 of file repoConverter.py.

◆ COADD_NAMES

|

static |

Definition at line 167 of file repoConverter.py.

◆ extractors

| lsst.obs.base.gen3.repoConverter.RepoConverter.extractors |

Definition at line 185 of file repoConverter.py.

◆ mapper

| lsst.obs.base.gen3.repoConverter.RepoConverter.mapper |

Definition at line 182 of file repoConverter.py.

◆ REPO_ROOT_FILES

|

static |

Initial value:

= ("registry.sqlite3", "_mapper", "repositoryCfg.yaml",

"calibRegistry.sqlite3", "_parent")

Definition at line 168 of file repoConverter.py.

◆ root

| lsst.obs.base.gen3.repoConverter.RepoConverter.root |

Definition at line 172 of file repoConverter.py.

◆ skyMap

| lsst.obs.base.gen3.repoConverter.RepoConverter.skyMap |

Definition at line 199 of file repoConverter.py.

◆ universe

| lsst.obs.base.gen3.repoConverter.RepoConverter.universe |

Definition at line 183 of file repoConverter.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/Linux64/obs_base/18.1.0-18-gb5d19ff+1/python/lsst/obs/base/gen3/repoConverter.py

Generated on Mon Sep 23 2019 07:03:02 for LSSTApplications by

1.8.13

1.8.13