Public Member Functions | |

| def | __init__ (self, datasetType, policy, registry, rootStorage, provided=None) |

| def | template (self) |

| def | keys (self) |

| def | map (self, mapper, dataId, write=False) |

| def | lookup (self, properties, dataId) |

| def | have (self, properties, dataId) |

| def | need (self, properties, dataId) |

Public Attributes | |

| datasetType | |

| registry | |

| rootStorage | |

| keyDict | |

| python | |

| persistable | |

| storage | |

| level | |

| tables | |

| range | |

| columns | |

| obsTimeName | |

| recipe | |

Detailed Description

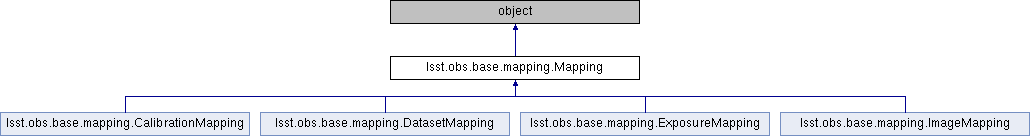

Mapping is a base class for all mappings. Mappings are used by

the Mapper to map (determine a path to some data given some

identifiers) and standardize (convert data into some standard

format or type) data, and to query the associated registry to see

what data is available.

Subclasses must specify self.storage or else override self.map().

Public methods: lookup, have, need, getKeys, map

Mappings are specified mainly by policy. A Mapping policy should

consist of:

template (string): a Python string providing the filename for that

particular dataset type based on some data identifiers. In the

case of redundancy in the path (e.g., file uniquely specified by

the exposure number, but filter in the path), the

redundant/dependent identifiers can be looked up in the registry.

python (string): the Python type for the retrieved data (e.g.

lsst.afw.image.ExposureF)

persistable (string): the Persistable registration for the on-disk data

(e.g. ImageU)

storage (string, optional): Storage type for this dataset type (e.g.

"FitsStorage")

level (string, optional): the level in the camera hierarchy at which the

data is stored (Amp, Ccd or skyTile), if relevant

tables (string, optional): a whitespace-delimited list of tables in the

registry that can be NATURAL JOIN-ed to look up additional

information.

Parameters

----------

datasetType : `str`

Butler dataset type to be mapped.

policy : `daf_persistence.Policy`

Mapping Policy.

registry : `lsst.obs.base.Registry`

Registry for metadata lookups.

rootStorage : Storage subclass instance

Interface to persisted repository data.

provided : `list` of `str`

Keys provided by the mapper.

Definition at line 33 of file mapping.py.

Constructor & Destructor Documentation

◆ __init__()

| def lsst.obs.base.mapping.Mapping.__init__ | ( | self, | |

| datasetType, | |||

| policy, | |||

| registry, | |||

| rootStorage, | |||

provided = None |

|||

| ) |

Definition at line 84 of file mapping.py.

Member Function Documentation

◆ have()

| def lsst.obs.base.mapping.Mapping.have | ( | self, | |

| properties, | |||

| dataId | |||

| ) |

Returns whether the provided data identifier has all

the properties in the provided list.

Parameters

----------

properties : `list of `str`

Properties required.

dataId : `dict`

Dataset identifier.

Returns

-------

bool

True if all properties are present.

Definition at line 275 of file mapping.py.

◆ keys()

| def lsst.obs.base.mapping.Mapping.keys | ( | self | ) |

Return the dict of keys and value types required for this mapping.

Definition at line 135 of file mapping.py.

◆ lookup()

| def lsst.obs.base.mapping.Mapping.lookup | ( | self, | |

| properties, | |||

| dataId | |||

| ) |

Look up properties for in a metadata registry given a partial

dataset identifier.

Parameters

----------

properties : `list` of `str`

What to look up.

dataId : `dict`

Dataset identifier

Returns

-------

`list` of `tuple`

Values of properties.

Reimplemented in lsst.obs.base.mapping.CalibrationMapping.

Definition at line 192 of file mapping.py.

◆ map()

| def lsst.obs.base.mapping.Mapping.map | ( | self, | |

| mapper, | |||

| dataId, | |||

write = False |

|||

| ) |

Standard implementation of map function.

Parameters

----------

mapper: `lsst.daf.persistence.Mapper`

Object to be mapped.

dataId: `dict`

Dataset identifier.

Returns

-------

lsst.daf.persistence.ButlerLocation

Location of object that was mapped.

Reimplemented in lsst.obs.base.mapping.CalibrationMapping.

Definition at line 140 of file mapping.py.

◆ need()

| def lsst.obs.base.mapping.Mapping.need | ( | self, | |

| properties, | |||

| dataId | |||

| ) |

Ensures all properties in the provided list are present in

the data identifier, looking them up as needed. This is only

possible for the case where the data identifies a single

exposure.

Parameters

----------

properties : `list` of `str`

Properties required.

dataId : `dict`

Partial dataset identifier

Returns

-------

`dict`

Copy of dataset identifier with enhanced values.

Definition at line 296 of file mapping.py.

◆ template()

| def lsst.obs.base.mapping.Mapping.template | ( | self | ) |

Definition at line 128 of file mapping.py.

Member Data Documentation

◆ columns

| lsst.obs.base.mapping.Mapping.columns |

Definition at line 123 of file mapping.py.

◆ datasetType

| lsst.obs.base.mapping.Mapping.datasetType |

Definition at line 89 of file mapping.py.

◆ keyDict

| lsst.obs.base.mapping.Mapping.keyDict |

Definition at line 102 of file mapping.py.

◆ level

| lsst.obs.base.mapping.Mapping.level |

Definition at line 117 of file mapping.py.

◆ obsTimeName

| lsst.obs.base.mapping.Mapping.obsTimeName |

Definition at line 124 of file mapping.py.

◆ persistable

| lsst.obs.base.mapping.Mapping.persistable |

Definition at line 114 of file mapping.py.

◆ python

| lsst.obs.base.mapping.Mapping.python |

Definition at line 113 of file mapping.py.

◆ range

| lsst.obs.base.mapping.Mapping.range |

Definition at line 122 of file mapping.py.

◆ recipe

| lsst.obs.base.mapping.Mapping.recipe |

Definition at line 125 of file mapping.py.

◆ registry

| lsst.obs.base.mapping.Mapping.registry |

Definition at line 90 of file mapping.py.

◆ rootStorage

| lsst.obs.base.mapping.Mapping.rootStorage |

Definition at line 91 of file mapping.py.

◆ storage

| lsst.obs.base.mapping.Mapping.storage |

Definition at line 115 of file mapping.py.

◆ tables

| lsst.obs.base.mapping.Mapping.tables |

Definition at line 119 of file mapping.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/0.2.1/Linux64/obs_base/21.0.0-27-gbbd0d29+ae871e0f33/python/lsst/obs/base/mapping.py