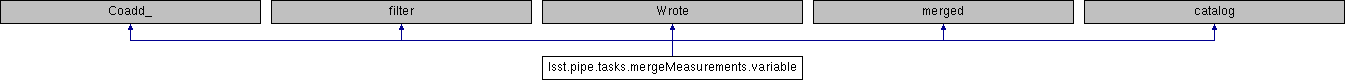

Inheritance diagram for lsst.pipe.tasks.mergeMeasurements.variable:

Detailed Description

_DefaultName = "mergeCoaddMeasurements"

ConfigClass = MergeMeasurementsConfig

RunnerClass = MergeSourcesRunner

inputDataset = "meas"

outputDataset = "ref"

getSchemaCatalogs = _makeGetSchemaCatalogs("ref")

@classmethod

def _makeArgumentParser(cls):

return makeMergeArgumentParser(cls._DefaultName, cls.inputDataset)

def getInputSchema(self, butler=None, schema=None):

return getInputSchema(self, butler, schema)

def runQuantum(self, butlerQC, inputRefs, outputRefs):

inputs = butlerQC.get(inputRefs)

dataIds = (ref.dataId for ref in inputRefs.catalogs)

catalogDict = {dataId['band']: cat for dataId, cat in zip(dataIds, inputs['catalogs'])}

inputs['catalogs'] = catalogDict

outputs = self.run(**inputs)

butlerQC.put(outputs, outputRefs)

def __init__(self, butler=None, schema=None, initInputs=None, **kwargs):super().__init__(**kwargs)

if initInputs is not None:

inputSchema = initInputs['inputSchema'].schema

else:

inputSchema = self.getInputSchema(butler=butler, schema=schema)

self.schemaMapper = afwTable.SchemaMapper(inputSchema, True)

self.schemaMapper.addMinimalSchema(inputSchema, True)

self.instFluxKey = inputSchema.find(self.config.snName + "_instFlux").getKey()

self.instFluxErrKey = inputSchema.find(self.config.snName + "_instFluxErr").getKey()

self.fluxFlagKey = inputSchema.find(self.config.snName + "_flag").getKey()

self.flagKeys = {}

for band in self.config.priorityList:

outputKey = self.schemaMapper.editOutputSchema().addField(

"merge_measurement_%s" % band,

type="Flag",

doc="Flag field set if the measurements here are from the %s filter" % band

)

peakKey = inputSchema.find("merge_peak_%s" % band).key

footprintKey = inputSchema.find("merge_footprint_%s" % band).key

self.flagKeys[band] = pipeBase.Struct(peak=peakKey, footprint=footprintKey, output=outputKey)

self.schema = self.schemaMapper.getOutputSchema()

self.pseudoFilterKeys = []

for filt in self.config.pseudoFilterList:

try:

self.pseudoFilterKeys.append(self.schema.find("merge_peak_%s" % filt).getKey())

except Exception as e:

self.log.warning("merge_peak is not set for pseudo-filter %s: %s", filt, e)

self.badFlags = {}

for flag in self.config.flags:

try:

self.badFlags[flag] = self.schema.find(flag).getKey()

except KeyError as exc:

self.log.warning("Can't find flag %s in schema: %s", flag, exc)

self.outputSchema = afwTable.SourceCatalog(self.schema)

def runDataRef(self, patchRefList):

catalogs = dict(readCatalog(self, patchRef) for patchRef in patchRefList) mergedCatalog = self.run(catalogs).mergedCatalog self.write(patchRefList[0], mergedCatalog) def run(self, catalogs):

# Put catalogs, filters in priority order

orderedCatalogs = [catalogs[band] for band in self.config.priorityList if band in catalogs.keys()]

orderedKeys = [self.flagKeys[band] for band in self.config.priorityList if band in catalogs.keys()]

mergedCatalog = afwTable.SourceCatalog(self.schema)

mergedCatalog.reserve(len(orderedCatalogs[0]))

idKey = orderedCatalogs[0].table.getIdKey()

for catalog in orderedCatalogs[1:]:

if numpy.any(orderedCatalogs[0].get(idKey) != catalog.get(idKey)):

raise ValueError("Error in inputs to MergeCoaddMeasurements: source IDs do not match")

# This first zip iterates over all the catalogs simultaneously, yielding a sequence of one

# record for each band, in priority order.

for orderedRecords in zip(*orderedCatalogs):

maxSNRecord = None

maxSNFlagKeys = None

maxSN = 0.

priorityRecord = None

priorityFlagKeys = None

prioritySN = 0.

hasPseudoFilter = False

# Now we iterate over those record-band pairs, keeping track of the priority and the

# largest S/N band.

for inputRecord, flagKeys in zip(orderedRecords, orderedKeys):

parent = (inputRecord.getParent() == 0 and inputRecord.get(flagKeys.footprint))

child = (inputRecord.getParent() != 0 and inputRecord.get(flagKeys.peak))

if not (parent or child):

for pseudoFilterKey in self.pseudoFilterKeys:

if inputRecord.get(pseudoFilterKey):

hasPseudoFilter = True

priorityRecord = inputRecord

priorityFlagKeys = flagKeys

break

if hasPseudoFilter:

break

isBad = any(inputRecord.get(flag) for flag in self.badFlags)

if isBad or inputRecord.get(self.fluxFlagKey) or inputRecord.get(self.instFluxErrKey) == 0:

sn = 0.

else:

sn = inputRecord.get(self.instFluxKey)/inputRecord.get(self.instFluxErrKey)

if numpy.isnan(sn) or sn < 0.:

sn = 0.

if (parent or child) and priorityRecord is None:

priorityRecord = inputRecord

priorityFlagKeys = flagKeys

prioritySN = sn

if sn > maxSN:

maxSNRecord = inputRecord

maxSNFlagKeys = flagKeys

maxSN = sn

# If the priority band has a low S/N we would like to choose the band with the highest S/N as

# the reference band instead. However, we only want to choose the highest S/N band if it is

# significantly better than the priority band. Therefore, to choose a band other than the

# priority, we require that the priority S/N is below the minimum threshold and that the

# difference between the priority and highest S/N is larger than the difference threshold.

#

# For pseudo code objects we always choose the first band in the priority list.

bestRecord = None

bestFlagKeys = None

if hasPseudoFilter:

bestRecord = priorityRecord

bestFlagKeys = priorityFlagKeys

elif (prioritySN < self.config.minSN and (maxSN - prioritySN) > self.config.minSNDiff

and maxSNRecord is not None):

bestRecord = maxSNRecord

bestFlagKeys = maxSNFlagKeys

elif priorityRecord is not None:

bestRecord = priorityRecord

bestFlagKeys = priorityFlagKeys

if bestRecord is not None and bestFlagKeys is not None:

outputRecord = mergedCatalog.addNew()

outputRecord.assign(bestRecord, self.schemaMapper)

outputRecord.set(bestFlagKeys.output, True)

else: # if we didn't find any records

raise ValueError("Error in inputs to MergeCoaddMeasurements: no valid reference for %s" %

inputRecord.getId())

# more checking for sane inputs, since zip silently iterates over the smallest sequence

for inputCatalog in orderedCatalogs:

if len(mergedCatalog) != len(inputCatalog):

raise ValueError("Mismatch between catalog sizes: %s != %s" %

(len(mergedCatalog), len(orderedCatalogs)))

return pipeBase.Struct(

mergedCatalog=mergedCatalog

)

def write(self, patchRef, catalog):

Definition at line 395 of file mergeMeasurements.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-0.7.0/Linux64/pipe_tasks/21.0.0-172-gfb10e10a+18fedfabac/python/lsst/pipe/tasks/mergeMeasurements.py