Public Member Functions | |

| def | __init__ (self, schema, **kwds) |

| def | run (self, exposure, catalog) |

| def | emptyMetadata (self) |

| def | getSchemaCatalogs (self) |

| def | getAllSchemaCatalogs (self) |

| def | getFullMetadata (self) |

| def | getFullName (self) |

| def | getName (self) |

| def | getTaskDict (self) |

| def | makeSubtask (self, name, **keyArgs) |

| def | timer (self, name, logLevel=Log.DEBUG) |

| def | makeField (cls, doc) |

| def | __reduce__ (self) |

Public Attributes | |

| refFluxKeys | |

| toCorrect | |

| metadata | |

| log | |

| config | |

Static Public Attributes | |

| ConfigClass = MeasureApCorrConfig | |

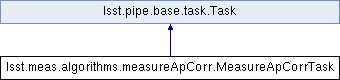

Detailed Description

Task to measure aperture correction

Definition at line 110 of file measureApCorr.py.

Constructor & Destructor Documentation

◆ __init__()

| def lsst.meas.algorithms.measureApCorr.MeasureApCorrTask.__init__ | ( | self, | |

| schema, | |||

| ** | kwds | ||

| ) |

Construct a MeasureApCorrTask

For every name in lsst.meas.base.getApCorrNameSet():

- If the corresponding flux fields exist in the schema:

- Add a new field apcorr_{name}_used

- Add an entry to the self.toCorrect dict

- Otherwise silently skip the name

Definition at line 116 of file measureApCorr.py.

Member Function Documentation

◆ __reduce__()

|

inherited |

Pickler.

Reimplemented in lsst.pipe.drivers.multiBandDriver.MultiBandDriverTask, and lsst.pipe.drivers.coaddDriver.CoaddDriverTask.

◆ emptyMetadata()

|

inherited |

Empty (clear) the metadata for this Task and all sub-Tasks.

Definition at line 166 of file task.py.

◆ getAllSchemaCatalogs()

|

inherited |

Get schema catalogs for all tasks in the hierarchy, combining the

results into a single dict.

Returns

-------

schemacatalogs : `dict`

Keys are butler dataset type, values are a empty catalog (an

instance of the appropriate `lsst.afw.table` Catalog type) for all

tasks in the hierarchy, from the top-level task down

through all subtasks.

Notes

-----

This method may be called on any task in the hierarchy; it will return

the same answer, regardless.

The default implementation should always suffice. If your subtask uses

schemas the override `Task.getSchemaCatalogs`, not this method.

Definition at line 204 of file task.py.

◆ getFullMetadata()

|

inherited |

Get metadata for all tasks.

Returns

-------

metadata : `lsst.daf.base.PropertySet`

The `~lsst.daf.base.PropertySet` keys are the full task name.

Values are metadata for the top-level task and all subtasks,

sub-subtasks, etc.

Notes

-----

The returned metadata includes timing information (if

``@timer.timeMethod`` is used) and any metadata set by the task. The

name of each item consists of the full task name with ``.`` replaced

by ``:``, followed by ``.`` and the name of the item, e.g.::

topLevelTaskName:subtaskName:subsubtaskName.itemName

using ``:`` in the full task name disambiguates the rare situation

that a task has a subtask and a metadata item with the same name.

Definition at line 229 of file task.py.

◆ getFullName()

|

inherited |

Get the task name as a hierarchical name including parent task

names.

Returns

-------

fullName : `str`

The full name consists of the name of the parent task and each

subtask separated by periods. For example:

- The full name of top-level task "top" is simply "top".

- The full name of subtask "sub" of top-level task "top" is

"top.sub".

- The full name of subtask "sub2" of subtask "sub" of top-level

task "top" is "top.sub.sub2".

Definition at line 256 of file task.py.

◆ getName()

|

inherited |

Get the name of the task.

Returns

-------

taskName : `str`

Name of the task.

See also

--------

getFullName

Definition at line 274 of file task.py.

◆ getSchemaCatalogs()

|

inherited |

Get the schemas generated by this task.

Returns

-------

schemaCatalogs : `dict`

Keys are butler dataset type, values are an empty catalog (an

instance of the appropriate `lsst.afw.table` Catalog type) for

this task.

Notes

-----

.. warning::

Subclasses that use schemas must override this method. The default

implementation returns an empty dict.

This method may be called at any time after the Task is constructed,

which means that all task schemas should be computed at construction

time, *not* when data is actually processed. This reflects the

philosophy that the schema should not depend on the data.

Returning catalogs rather than just schemas allows us to save e.g.

slots for SourceCatalog as well.

See also

--------

Task.getAllSchemaCatalogs

Definition at line 172 of file task.py.

◆ getTaskDict()

|

inherited |

Get a dictionary of all tasks as a shallow copy.

Returns

-------

taskDict : `dict`

Dictionary containing full task name: task object for the top-level

task and all subtasks, sub-subtasks, etc.

Definition at line 288 of file task.py.

◆ makeField()

|

inherited |

Make a `lsst.pex.config.ConfigurableField` for this task.

Parameters

----------

doc : `str`

Help text for the field.

Returns

-------

configurableField : `lsst.pex.config.ConfigurableField`

A `~ConfigurableField` for this task.

Examples

--------

Provides a convenient way to specify this task is a subtask of another

task.

Here is an example of use:

.. code-block:: python

class OtherTaskConfig(lsst.pex.config.Config):

aSubtask = ATaskClass.makeField("brief description of task")

Definition at line 359 of file task.py.

◆ makeSubtask()

|

inherited |

Create a subtask as a new instance as the ``name`` attribute of this

task.

Parameters

----------

name : `str`

Brief name of the subtask.

keyArgs

Extra keyword arguments used to construct the task. The following

arguments are automatically provided and cannot be overridden:

- "config".

- "parentTask".

Notes

-----

The subtask must be defined by ``Task.config.name``, an instance of

`~lsst.pex.config.ConfigurableField` or

`~lsst.pex.config.RegistryField`.

Definition at line 299 of file task.py.

◆ run()

| def lsst.meas.algorithms.measureApCorr.MeasureApCorrTask.run | ( | self, | |

| exposure, | |||

| catalog | |||

| ) |

Measure aperture correction

Parameters

----------

exposure : `lsst.afw.image.Exposure`

Exposure aperture corrections are being measured on. The

bounding box is retrieved from it, and it is passed to the

sourceSelector. The output aperture correction map is *not*

added to the exposure; this is left to the caller.

catalog : `lsst.afw.table.SourceCatalog`

SourceCatalog containing measurements to be used to

compute aperture corrections.

Returns

-------

Struct : `lsst.pipe.base.Struct`

Contains the following:

``apCorrMap``

aperture correction map (`lsst.afw.image.ApCorrMap`)

that contains two entries for each flux field:

- flux field (e.g. base_PsfFlux_instFlux): 2d model

- flux sigma field (e.g. base_PsfFlux_instFluxErr): 2d model of error

Definition at line 136 of file measureApCorr.py.

◆ timer()

|

inherited |

Context manager to log performance data for an arbitrary block of

code.

Parameters

----------

name : `str`

Name of code being timed; data will be logged using item name:

``Start`` and ``End``.

logLevel

A `lsst.log` level constant.

Examples

--------

Creating a timer context:

.. code-block:: python

with self.timer("someCodeToTime"):

pass # code to time

See also

--------

timer.logInfo

Definition at line 327 of file task.py.

Member Data Documentation

◆ config

◆ ConfigClass

|

static |

Definition at line 113 of file measureApCorr.py.

◆ log

◆ metadata

◆ refFluxKeys

| lsst.meas.algorithms.measureApCorr.MeasureApCorrTask.refFluxKeys |

Definition at line 126 of file measureApCorr.py.

◆ toCorrect

| lsst.meas.algorithms.measureApCorr.MeasureApCorrTask.toCorrect |

Definition at line 127 of file measureApCorr.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/0.4.3/Linux64/meas_algorithms/21.0.0-19-g4cded4ca+71a93a33c0/python/lsst/meas/algorithms/measureApCorr.py