Public Member Functions | |

| __init__ (self, ApdbCassandraConfig config) | |

| None | __del__ (self) |

| VersionTuple | apdbImplementationVersion (cls) |

| VersionTuple | apdbSchemaVersion (self) |

| Table|None | tableDef (self, ApdbTables table) |

| ApdbCassandraConfig | init_database (cls, list[str] hosts, str keyspace, *str|None schema_file=None, str|None schema_name=None, int|None read_sources_months=None, int|None read_forced_sources_months=None, bool use_insert_id=False, bool use_insert_id_skips_diaobjects=False, int|None port=None, str|None username=None, str|None prefix=None, str|None part_pixelization=None, int|None part_pix_level=None, bool time_partition_tables=True, str|None time_partition_start=None, str|None time_partition_end=None, str|None read_consistency=None, str|None write_consistency=None, int|None read_timeout=None, int|None write_timeout=None, list[str]|None ra_dec_columns=None, int|None replication_factor=None, bool drop=False) |

| ApdbCassandraReplica | get_replica (self) |

| pandas.DataFrame | getDiaObjects (self, sphgeom.Region region) |

| pandas.DataFrame|None | getDiaSources (self, sphgeom.Region region, Iterable[int]|None object_ids, astropy.time.Time visit_time) |

| pandas.DataFrame|None | getDiaForcedSources (self, sphgeom.Region region, Iterable[int]|None object_ids, astropy.time.Time visit_time) |

| bool | containsVisitDetector (self, int visit, int detector) |

| pandas.DataFrame | getSSObjects (self) |

| None | store (self, astropy.time.Time visit_time, pandas.DataFrame objects, pandas.DataFrame|None sources=None, pandas.DataFrame|None forced_sources=None) |

| None | storeSSObjects (self, pandas.DataFrame objects) |

| None | reassignDiaSources (self, Mapping[int, int] idMap) |

| None | dailyJob (self) |

| int | countUnassociatedObjects (self) |

| ApdbMetadata | metadata (self) |

Public Attributes | |

| config | |

| metadataSchemaVersionKey | |

| metadataCodeVersionKey | |

| metadataReplicaVersionKey | |

| metadataConfigKey | |

Static Public Attributes | |

| str | metadataSchemaVersionKey = "version:schema" |

| str | metadataCodeVersionKey = "version:ApdbCassandra" |

| str | metadataReplicaVersionKey = "version:ApdbCassandraReplica" |

| str | metadataConfigKey = "config:apdb-cassandra.json" |

| partition_zero_epoch = astropy.time.Time(0, format="unix_tai") | |

Protected Member Functions | |

| Timer | _timer (self, str name, *Mapping[str, str|int]|None tags=None) |

| tuple[Cluster, Session] | _make_session (cls, ApdbCassandraConfig config) |

| AuthProvider|None | _make_auth_provider (cls, ApdbCassandraConfig config) |

| None | _versionCheck (self, ApdbMetadataCassandra metadata) |

| None | _makeSchema (cls, ApdbConfig config, *bool drop=False, int|None replication_factor=None) |

| Mapping[Any, ExecutionProfile] | _makeProfiles (cls, ApdbCassandraConfig config) |

| pandas.DataFrame | _getSources (self, sphgeom.Region region, Iterable[int]|None object_ids, float mjd_start, float mjd_end, ApdbTables table_name) |

| None | _storeReplicaChunk (self, ReplicaChunk replica_chunk, astropy.time.Time visit_time) |

| None | _storeDiaObjects (self, pandas.DataFrame objs, astropy.time.Time visit_time, ReplicaChunk|None replica_chunk) |

| None | _storeDiaSources (self, ApdbTables table_name, pandas.DataFrame sources, astropy.time.Time visit_time, ReplicaChunk|None replica_chunk) |

| None | _storeDiaSourcesPartitions (self, pandas.DataFrame sources, astropy.time.Time visit_time, ReplicaChunk|None replica_chunk) |

| None | _storeObjectsPandas (self, pandas.DataFrame records, ApdbTables|ExtraTables table_name, Mapping|None extra_columns=None, int|None time_part=None) |

| pandas.DataFrame | _add_obj_part (self, pandas.DataFrame df) |

| pandas.DataFrame | _add_src_part (self, pandas.DataFrame sources, pandas.DataFrame objs) |

| pandas.DataFrame | _add_fsrc_part (self, pandas.DataFrame sources, pandas.DataFrame objs) |

| int | _time_partition_cls (cls, float|astropy.time.Time time, float epoch_mjd, int part_days) |

| int | _time_partition (self, float|astropy.time.Time time) |

| pandas.DataFrame | _make_empty_catalog (self, ApdbTables table_name) |

| Iterator[tuple[cassandra.query.Statement, tuple]] | _combine_where (self, str prefix, list[tuple[str, tuple]] where1, list[tuple[str, tuple]] where2, str|None suffix=None) |

| list[tuple[str, tuple]] | _spatial_where (self, sphgeom.Region|None region, bool use_ranges=False) |

| tuple[list[str], list[tuple[str, tuple]]] | _temporal_where (self, ApdbTables table, float|astropy.time.Time start_time, float|astropy.time.Time end_time, bool|None query_per_time_part=None) |

Protected Attributes | |

| _keyspace | |

| _cluster | |

| _session | |

| _metadata | |

| _pixelization | |

| _schema | |

| _partition_zero_epoch_mjd | |

| _preparer | |

| _timer_args | |

Static Protected Attributes | |

| tuple | _frozen_parameters |

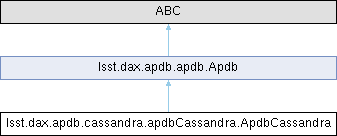

Detailed Description

Implementation of APDB database on to of Apache Cassandra.

The implementation is configured via standard ``pex_config`` mechanism

using `ApdbCassandraConfig` configuration class. For an example of

different configurations check config/ folder.

Parameters

----------

config : `ApdbCassandraConfig`

Configuration object.

Definition at line 253 of file apdbCassandra.py.

Constructor & Destructor Documentation

◆ __init__()

| lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.__init__ | ( | self, | |

| ApdbCassandraConfig | config ) |

Definition at line 292 of file apdbCassandra.py.

◆ __del__()

| None lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.__del__ | ( | self | ) |

Definition at line 346 of file apdbCassandra.py.

Member Function Documentation

◆ _add_fsrc_part()

|

protected |

Add apdb_part column to DiaForcedSource catalog. Notes ----- This method copies apdb_part value from a matching DiaObject record. DiaObject catalog needs to have a apdb_part column filled by ``_add_obj_part`` method and DiaSource records need to be associated to DiaObjects via ``diaObjectId`` column. This overrides any existing column in a DataFrame with the same name (apdb_part). Original DataFrame is not changed, copy of a DataFrame is returned.

Definition at line 1270 of file apdbCassandra.py.

◆ _add_obj_part()

|

protected |

Calculate spatial partition for each record and add it to a DataFrame. Notes ----- This overrides any existing column in a DataFrame with the same name (apdb_part). Original DataFrame is not changed, copy of a DataFrame is returned.

Definition at line 1214 of file apdbCassandra.py.

◆ _add_src_part()

|

protected |

Add apdb_part column to DiaSource catalog. Notes ----- This method copies apdb_part value from a matching DiaObject record. DiaObject catalog needs to have a apdb_part column filled by ``_add_obj_part`` method and DiaSource records need to be associated to DiaObjects via ``diaObjectId`` column. This overrides any existing column in a DataFrame with the same name (apdb_part). Original DataFrame is not changed, copy of a DataFrame is returned.

Definition at line 1235 of file apdbCassandra.py.

◆ _combine_where()

|

protected |

Make cartesian product of two parts of WHERE clause into a series

of statements to execute.

Parameters

----------

prefix : `str`

Initial statement prefix that comes before WHERE clause, e.g.

"SELECT * from Table"

Definition at line 1364 of file apdbCassandra.py.

◆ _getSources()

|

protected |

Return catalog of DiaSource instances given set of DiaObject IDs.

Parameters

----------

region : `lsst.sphgeom.Region`

Spherical region.

object_ids :

Collection of DiaObject IDs

mjd_start : `float`

Lower bound of time interval.

mjd_end : `float`

Upper bound of time interval.

table_name : `ApdbTables`

Name of the table.

Returns

-------

catalog : `pandas.DataFrame`, or `None`

Catalog containing DiaSource records. Empty catalog is returned if

``object_ids`` is empty.

Definition at line 938 of file apdbCassandra.py.

◆ _make_auth_provider()

|

protected |

Make Cassandra authentication provider instance.

Definition at line 376 of file apdbCassandra.py.

◆ _make_empty_catalog()

|

protected |

Make an empty catalog for a table with a given name.

Parameters

----------

table_name : `ApdbTables`

Name of the table.

Returns

-------

catalog : `pandas.DataFrame`

An empty catalog.

Definition at line 1343 of file apdbCassandra.py.

◆ _make_session()

|

protected |

Make Cassandra session.

Definition at line 355 of file apdbCassandra.py.

◆ _makeProfiles()

|

protected |

Make all execution profiles used in the code.

Definition at line 879 of file apdbCassandra.py.

◆ _makeSchema()

|

protected |

Definition at line 604 of file apdbCassandra.py.

◆ _spatial_where()

|

protected |

Generate expressions for spatial part of WHERE clause.

Parameters

----------

region : `sphgeom.Region`

Spatial region for query results.

use_ranges : `bool`

If True then use pixel ranges ("apdb_part >= p1 AND apdb_part <=

p2") instead of exact list of pixels. Should be set to True for

large regions covering very many pixels.

Returns

-------

expressions : `list` [ `tuple` ]

Empty list is returned if ``region`` is `None`, otherwise a list

of one or more (expression, parameters) tuples

Definition at line 1408 of file apdbCassandra.py.

◆ _storeDiaObjects()

|

protected |

Store catalog of DiaObjects from current visit.

Parameters

----------

objs : `pandas.DataFrame`

Catalog with DiaObject records

visit_time : `astropy.time.Time`

Time of the current visit.

replica_chunk : `ReplicaChunk` or `None`

Replica chunk identifier if replication is configured.

Definition at line 1030 of file apdbCassandra.py.

◆ _storeDiaSources()

|

protected |

Store catalog of DIASources or DIAForcedSources from current visit.

Parameters

----------

table_name : `ApdbTables`

Table where to store the data.

sources : `pandas.DataFrame`

Catalog containing DiaSource records

visit_time : `astropy.time.Time`

Time of the current visit.

replica_chunk : `ReplicaChunk` or `None`

Replica chunk identifier if replication is configured.

Definition at line 1069 of file apdbCassandra.py.

◆ _storeDiaSourcesPartitions()

|

protected |

Store mapping of diaSourceId to its partitioning values.

Parameters

----------

sources : `pandas.DataFrame`

Catalog containing DiaSource records

visit_time : `astropy.time.Time`

Time of the current visit.

Definition at line 1105 of file apdbCassandra.py.

◆ _storeObjectsPandas()

|

protected |

Store generic objects.

Takes Pandas catalog and stores a bunch of records in a table.

Parameters

----------

records : `pandas.DataFrame`

Catalog containing object records

table_name : `ApdbTables`

Name of the table as defined in APDB schema.

extra_columns : `dict`, optional

Mapping (column_name, column_value) which gives fixed values for

columns in each row, overrides values in ``records`` if matching

columns exist there.

time_part : `int`, optional

If not `None` then insert into a per-partition table.

Notes

-----

If Pandas catalog contains additional columns not defined in table

schema they are ignored. Catalog does not have to contain all columns

defined in a table, but partition and clustering keys must be present

in a catalog or ``extra_columns``.

Definition at line 1127 of file apdbCassandra.py.

◆ _storeReplicaChunk()

|

protected |

Definition at line 1009 of file apdbCassandra.py.

◆ _temporal_where()

|

protected |

Generate table names and expressions for temporal part of WHERE

clauses.

Parameters

----------

table : `ApdbTables`

Table to select from.

start_time : `astropy.time.Time` or `float`

Starting Datetime of MJD value of the time range.

end_time : `astropy.time.Time` or `float`

Starting Datetime of MJD value of the time range.

query_per_time_part : `bool`, optional

If None then use ``query_per_time_part`` from configuration.

Returns

-------

tables : `list` [ `str` ]

List of the table names to query.

expressions : `list` [ `tuple` ]

A list of zero or more (expression, parameters) tuples.

Definition at line 1448 of file apdbCassandra.py.

◆ _time_partition()

|

protected |

Calculate time partition number for a given time.

Parameters

----------

time : `float` or `astropy.time.Time`

Time for which to calculate partition number. Can be float to mean

MJD or `astropy.time.Time`

Returns

-------

partition : `int`

Partition number for a given time.

Definition at line 1321 of file apdbCassandra.py.

◆ _time_partition_cls()

|

protected |

Calculate time partition number for a given time.

Parameters

----------

time : `float` or `astropy.time.Time`

Time for which to calculate partition number. Can be float to mean

MJD or `astropy.time.Time`

epoch_mjd : `float`

Epoch time for partition 0.

part_days : `int`

Number of days per partition.

Returns

-------

partition : `int`

Partition number for a given time.

Definition at line 1295 of file apdbCassandra.py.

◆ _timer()

|

protected |

Create `Timer` instance given its name.

Definition at line 350 of file apdbCassandra.py.

◆ _versionCheck()

|

protected |

Check schema version compatibility.

Definition at line 408 of file apdbCassandra.py.

◆ apdbImplementationVersion()

| VersionTuple lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.apdbImplementationVersion | ( | cls | ) |

Return version number for current APDB implementation.

Returns

-------

version : `VersionTuple`

Version of the code defined in implementation class.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 451 of file apdbCassandra.py.

◆ apdbSchemaVersion()

| VersionTuple lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.apdbSchemaVersion | ( | self | ) |

Return schema version number as defined in config file.

Returns

-------

version : `VersionTuple`

Version of the schema defined in schema config file.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 455 of file apdbCassandra.py.

◆ containsVisitDetector()

| bool lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.containsVisitDetector | ( | self, | |

| int | visit, | ||

| int | detector ) |

Test whether data for a given visit-detector is present in the APDB.

Parameters

----------

visit, detector : `int`

The ID of the visit-detector to search for.

Returns

-------

present : `bool`

`True` if some DiaObject, DiaSource, or DiaForcedSource records

exist for the specified observation, `False` otherwise.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 724 of file apdbCassandra.py.

◆ countUnassociatedObjects()

| int lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.countUnassociatedObjects | ( | self | ) |

Return the number of DiaObjects that have only one DiaSource

associated with them.

Used as part of ap_verify metrics.

Returns

-------

count : `int`

Number of DiaObjects with exactly one associated DiaSource.

Notes

-----

This method can be very inefficient or slow in some implementations.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 865 of file apdbCassandra.py.

◆ dailyJob()

| None lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.dailyJob | ( | self | ) |

Implement daily activities like cleanup/vacuum. What should be done during daily activities is determined by specific implementation.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 861 of file apdbCassandra.py.

◆ get_replica()

| ApdbCassandraReplica lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.get_replica | ( | self | ) |

Return `ApdbReplica` instance for this database.

Definition at line 597 of file apdbCassandra.py.

◆ getDiaForcedSources()

| pandas.DataFrame | None lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.getDiaForcedSources | ( | self, | |

| sphgeom.Region | region, | ||

| Iterable[int] | None | object_ids, | ||

| astropy.time.Time | visit_time ) |

Return catalog of DiaForcedSource instances from a given region.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIASources.

object_ids : iterable [ `int` ], optional

List of DiaObject IDs to further constrain the set of returned

sources. If list is empty then empty catalog is returned with a

correct schema. If `None` then returned sources are not

constrained. Some implementations may not support latter case.

visit_time : `astropy.time.Time`

Time of the current visit.

Returns

-------

catalog : `pandas.DataFrame`, or `None`

Catalog containing DiaSource records. `None` is returned if

``read_forced_sources_months`` configuration parameter is set to 0.

Raises

------

NotImplementedError

May be raised by some implementations if ``object_ids`` is `None`.

Notes

-----

This method returns DiaForcedSource catalog for a region with

additional filtering based on DiaObject IDs. Only a subset of DiaSource

history is returned limited by ``read_forced_sources_months`` config

parameter, w.r.t. ``visit_time``. If ``object_ids`` is empty then an

empty catalog is always returned with the correct schema

(columns/types). If ``object_ids`` is `None` then no filtering is

performed and some of the returned records may be outside the specified

region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 712 of file apdbCassandra.py.

◆ getDiaObjects()

| pandas.DataFrame lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.getDiaObjects | ( | self, | |

| sphgeom.Region | region ) |

Return catalog of DiaObject instances from a given region.

This method returns only the last version of each DiaObject. Some

records in a returned catalog may be outside the specified region, it

is up to a client to ignore those records or cleanup the catalog before

futher use.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIAObjects.

Returns

-------

catalog : `pandas.DataFrame`

Catalog containing DiaObject records for a region that may be a

superset of the specified region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 660 of file apdbCassandra.py.

◆ getDiaSources()

| pandas.DataFrame | None lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.getDiaSources | ( | self, | |

| sphgeom.Region | region, | ||

| Iterable[int] | None | object_ids, | ||

| astropy.time.Time | visit_time ) |

Return catalog of DiaSource instances from a given region.

Parameters

----------

region : `lsst.sphgeom.Region`

Region to search for DIASources.

object_ids : iterable [ `int` ], optional

List of DiaObject IDs to further constrain the set of returned

sources. If `None` then returned sources are not constrained. If

list is empty then empty catalog is returned with a correct

schema.

visit_time : `astropy.time.Time`

Time of the current visit.

Returns

-------

catalog : `pandas.DataFrame`, or `None`

Catalog containing DiaSource records. `None` is returned if

``read_sources_months`` configuration parameter is set to 0.

Notes

-----

This method returns DiaSource catalog for a region with additional

filtering based on DiaObject IDs. Only a subset of DiaSource history

is returned limited by ``read_sources_months`` config parameter, w.r.t.

``visit_time``. If ``object_ids`` is empty then an empty catalog is

always returned with the correct schema (columns/types). If

``object_ids`` is `None` then no filtering is performed and some of the

returned records may be outside the specified region.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 700 of file apdbCassandra.py.

◆ getSSObjects()

| pandas.DataFrame lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.getSSObjects | ( | self | ) |

Return catalog of SSObject instances.

Returns

-------

catalog : `pandas.DataFrame`

Catalog containing SSObject records, all existing records are

returned.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 728 of file apdbCassandra.py.

◆ init_database()

| ApdbCassandraConfig lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.init_database | ( | cls, | |

| list[str] | hosts, | ||

| str | keyspace, | ||

| *str | None | schema_file = None, | ||

| str | None | schema_name = None, | ||

| int | None | read_sources_months = None, | ||

| int | None | read_forced_sources_months = None, | ||

| bool | use_insert_id = False, | ||

| bool | use_insert_id_skips_diaobjects = False, | ||

| int | None | port = None, | ||

| str | None | username = None, | ||

| str | None | prefix = None, | ||

| str | None | part_pixelization = None, | ||

| int | None | part_pix_level = None, | ||

| bool | time_partition_tables = True, | ||

| str | None | time_partition_start = None, | ||

| str | None | time_partition_end = None, | ||

| str | None | read_consistency = None, | ||

| str | None | write_consistency = None, | ||

| int | None | read_timeout = None, | ||

| int | None | write_timeout = None, | ||

| list[str] | None | ra_dec_columns = None, | ||

| int | None | replication_factor = None, | ||

| bool | drop = False ) |

Initialize new APDB instance and make configuration object for it.

Parameters

----------

hosts : `list` [`str`]

List of host names or IP addresses for Cassandra cluster.

keyspace : `str`

Name of the keyspace for APDB tables.

schema_file : `str`, optional

Location of (YAML) configuration file with APDB schema. If not

specified then default location will be used.

schema_name : `str`, optional

Name of the schema in YAML configuration file. If not specified

then default name will be used.

read_sources_months : `int`, optional

Number of months of history to read from DiaSource.

read_forced_sources_months : `int`, optional

Number of months of history to read from DiaForcedSource.

use_insert_id : `bool`, optional

If True, make additional tables used for replication to PPDB.

use_insert_id_skips_diaobjects : `bool`, optional

If `True` then do not fill regular ``DiaObject`` table when

``use_insert_id`` is `True`.

port : `int`, optional

Port number to use for Cassandra connections.

username : `str`, optional

User name for Cassandra connections.

prefix : `str`, optional

Optional prefix for all table names.

part_pixelization : `str`, optional

Name of the MOC pixelization used for partitioning.

part_pix_level : `int`, optional

Pixelization level.

time_partition_tables : `bool`, optional

Create per-partition tables.

time_partition_start : `str`, optional

Starting time for per-partition tables, in yyyy-mm-ddThh:mm:ss

format, in TAI.

time_partition_end : `str`, optional

Ending time for per-partition tables, in yyyy-mm-ddThh:mm:ss

format, in TAI.

read_consistency : `str`, optional

Name of the consistency level for read operations.

write_consistency : `str`, optional

Name of the consistency level for write operations.

read_timeout : `int`, optional

Read timeout in seconds.

write_timeout : `int`, optional

Write timeout in seconds.

ra_dec_columns : `list` [`str`], optional

Names of ra/dec columns in DiaObject table.

replication_factor : `int`, optional

Replication factor used when creating new keyspace, if keyspace

already exists its replication factor is not changed.

drop : `bool`, optional

If `True` then drop existing tables before re-creating the schema.

Returns

-------

config : `ApdbCassandraConfig`

Resulting configuration object for a created APDB instance.

Definition at line 464 of file apdbCassandra.py.

◆ metadata()

| ApdbMetadata lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.metadata | ( | self | ) |

Object controlling access to APDB metadata (`ApdbMetadata`).

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 872 of file apdbCassandra.py.

◆ reassignDiaSources()

| None lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.reassignDiaSources | ( | self, | |

| Mapping[int, int] | idMap ) |

Associate DiaSources with SSObjects, dis-associating them

from DiaObjects.

Parameters

----------

idMap : `Mapping`

Maps DiaSource IDs to their new SSObject IDs.

Raises

------

ValueError

Raised if DiaSource ID does not exist in the database.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 773 of file apdbCassandra.py.

◆ store()

| None lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.store | ( | self, | |

| astropy.time.Time | visit_time, | ||

| pandas.DataFrame | objects, | ||

| pandas.DataFrame | None | sources = None, | ||

| pandas.DataFrame | None | forced_sources = None ) |

Store all three types of catalogs in the database.

Parameters

----------

visit_time : `astropy.time.Time`

Time of the visit.

objects : `pandas.DataFrame`

Catalog with DiaObject records.

sources : `pandas.DataFrame`, optional

Catalog with DiaSource records.

forced_sources : `pandas.DataFrame`, optional

Catalog with DiaForcedSource records.

Notes

-----

This methods takes DataFrame catalogs, their schema must be

compatible with the schema of APDB table:

- column names must correspond to database table columns

- types and units of the columns must match database definitions,

no unit conversion is performed presently

- columns that have default values in database schema can be

omitted from catalog

- this method knows how to fill interval-related columns of DiaObject

(validityStart, validityEnd) they do not need to appear in a

catalog

- source catalogs have ``diaObjectId`` column associating sources

with objects

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 741 of file apdbCassandra.py.

◆ storeSSObjects()

| None lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.storeSSObjects | ( | self, | |

| pandas.DataFrame | objects ) |

Store or update SSObject catalog.

Parameters

----------

objects : `pandas.DataFrame`

Catalog with SSObject records.

Notes

-----

If SSObjects with matching IDs already exist in the database, their

records will be updated with the information from provided records.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 769 of file apdbCassandra.py.

◆ tableDef()

| Table | None lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.tableDef | ( | self, | |

| ApdbTables | table ) |

Return table schema definition for a given table.

Parameters

----------

table : `ApdbTables`

One of the known APDB tables.

Returns

-------

tableSchema : `.schema_model.Table` or `None`

Table schema description, `None` is returned if table is not

defined by this implementation.

Reimplemented from lsst.dax.apdb.apdb.Apdb.

Definition at line 459 of file apdbCassandra.py.

Member Data Documentation

◆ _cluster

|

protected |

Definition at line 298 of file apdbCassandra.py.

◆ _frozen_parameters

|

staticprotected |

Definition at line 278 of file apdbCassandra.py.

◆ _keyspace

|

protected |

Definition at line 296 of file apdbCassandra.py.

◆ _metadata

|

protected |

Definition at line 301 of file apdbCassandra.py.

◆ _partition_zero_epoch_mjd

|

protected |

Definition at line 330 of file apdbCassandra.py.

◆ _pixelization

|

protected |

Definition at line 315 of file apdbCassandra.py.

◆ _preparer

|

protected |

Definition at line 336 of file apdbCassandra.py.

◆ _schema

|

protected |

Definition at line 321 of file apdbCassandra.py.

◆ _session

|

protected |

Definition at line 298 of file apdbCassandra.py.

◆ _timer_args

|

protected |

Definition at line 352 of file apdbCassandra.py.

◆ config

| lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.config |

Definition at line 310 of file apdbCassandra.py.

◆ metadataCodeVersionKey [1/2]

|

static |

Definition at line 269 of file apdbCassandra.py.

◆ metadataCodeVersionKey [2/2]

| lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.metadataCodeVersionKey |

Definition at line 644 of file apdbCassandra.py.

◆ metadataConfigKey [1/2]

|

static |

Definition at line 275 of file apdbCassandra.py.

◆ metadataConfigKey [2/2]

| lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.metadataConfigKey |

Definition at line 656 of file apdbCassandra.py.

◆ metadataReplicaVersionKey [1/2]

|

static |

Definition at line 272 of file apdbCassandra.py.

◆ metadataReplicaVersionKey [2/2]

| lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.metadataReplicaVersionKey |

Definition at line 649 of file apdbCassandra.py.

◆ metadataSchemaVersionKey [1/2]

|

static |

Definition at line 266 of file apdbCassandra.py.

◆ metadataSchemaVersionKey [2/2]

| lsst.dax.apdb.cassandra.apdbCassandra.ApdbCassandra.metadataSchemaVersionKey |

Definition at line 643 of file apdbCassandra.py.

◆ partition_zero_epoch

|

static |

Definition at line 289 of file apdbCassandra.py.

The documentation for this class was generated from the following file:

- /j/snowflake/release/lsstsw/stack/lsst-scipipe-8.0.0/Linux64/dax_apdb/g864b0138d7+aa38e45daa/python/lsst/dax/apdb/cassandra/apdbCassandra.py